Defining Virtual Cloud Servers

Virtual cloud servers, also known as virtual machines (VMs), are powerful tools that allow businesses and individuals to leverage the scalability and flexibility of cloud computing. They offer a virtualized environment that mimics the functionality of a physical server, but with enhanced control, resource management, and cost-effectiveness. Understanding their core components and differences from physical servers is crucial for effective utilization.

Virtual cloud servers consist of several key components working in harmony. These include the virtualized hardware (CPU, RAM, storage, network interfaces), the hypervisor (the software that manages the virtualized resources), the operating system installed within the VM, and the applications and data residing on the OS. Each VM is isolated from others, providing security and preventing interference.

Virtual Cloud Servers versus Physical Servers

The primary difference between a virtual cloud server and a physical server lies in their underlying architecture. A physical server is a self-contained unit of hardware; a single physical machine runs one operating system and its associated applications. In contrast, a virtual cloud server is a software-defined entity running on top of a physical server, often shared amongst many other VMs. This virtualization allows for greater resource utilization and scalability. A single physical server can host dozens, even hundreds, of virtual cloud servers, significantly reducing hardware costs and energy consumption. Furthermore, managing and maintaining virtual cloud servers is often simpler, as many tasks are handled by the cloud provider’s infrastructure. Finally, the flexibility to scale resources up or down on demand is a key advantage of virtual cloud servers, providing cost optimization based on actual needs.

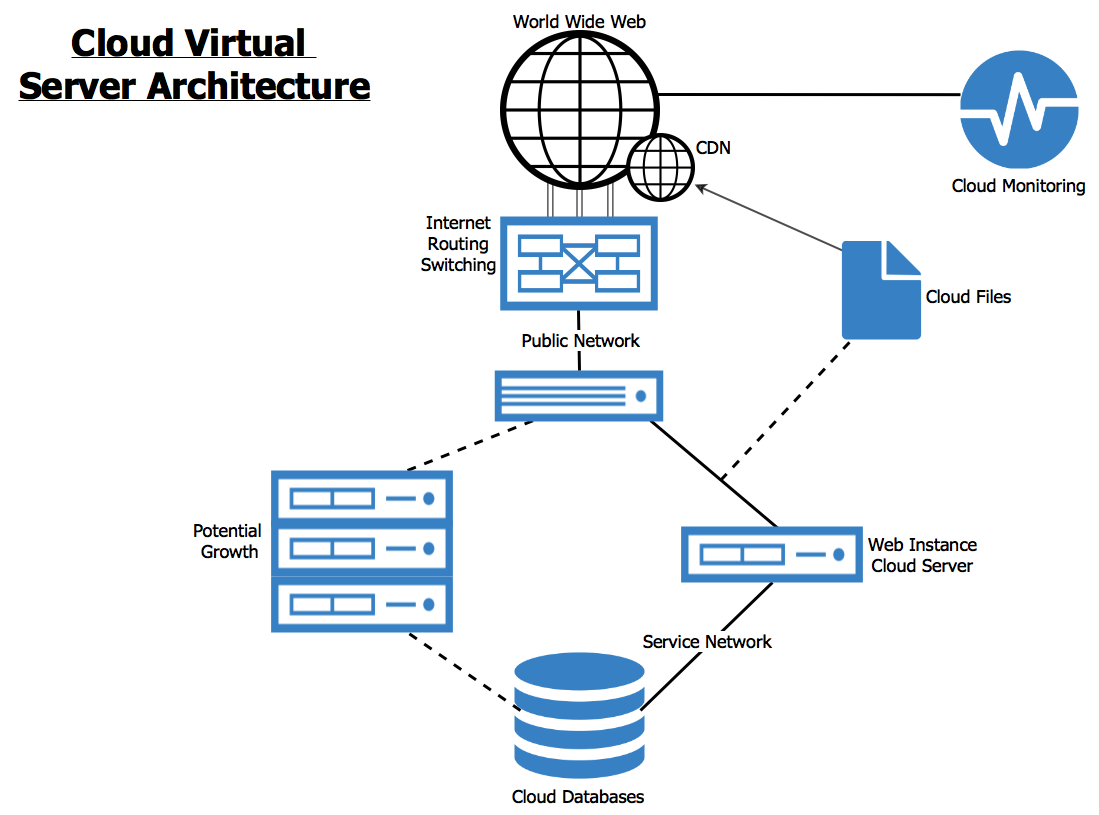

Comparison of Virtual Cloud Server Architectures

Several architectures exist for deploying virtual cloud servers, each offering different trade-offs in terms of performance, scalability, and cost. A common architecture is the IaaS (Infrastructure as a Service) model, where the cloud provider manages the underlying hardware, while the user manages the operating system and applications. This offers significant flexibility and control. Another popular model is PaaS (Platform as a Service), where the provider manages both the hardware and the operating system, leaving the user to focus solely on application development and deployment. This simplifies the management process but can limit customization options. Finally, some cloud providers offer serverless computing, where the provider manages everything except the code itself. The code is executed only when needed, eliminating the need to manage servers entirely. The choice of architecture depends heavily on the specific needs and resources of the user. For example, a large enterprise might choose IaaS for maximum control, while a startup might prefer PaaS or serverless for ease of use and reduced operational overhead. Each architecture presents a unique balance between control, management complexity, and cost.

Virtual Cloud Server Deployment Models

Choosing the right deployment model is crucial for optimizing your virtual cloud server’s performance, scalability, and cost-effectiveness. Different models offer varying levels of control and responsibility, impacting both the initial setup and ongoing management. Understanding these differences is key to making an informed decision.

Deployment models for virtual cloud servers primarily fall under Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and, less commonly in the context of individual servers, Software as a Service (SaaS). Each model represents a different level of abstraction and management responsibility.

Infrastructure as a Service (IaaS)

IaaS provides the most control. Users manage the operating system, applications, middleware, and data. The cloud provider only manages the underlying physical infrastructure, including the hardware, networking, and virtualization. This gives users maximum flexibility but also requires significant technical expertise.

- Advantages: High customization, granular control, cost optimization potential (pay only for what you use), and portability.

- Disadvantages: Higher management overhead, requires significant technical expertise, and potential for increased security responsibilities.

Platform as a Service (PaaS)

PaaS offers a middle ground, abstracting away much of the underlying infrastructure management. Users manage applications and data, while the provider handles the operating system, middleware, and server infrastructure. This simplifies deployment and management, reducing the technical expertise required.

- Advantages: Reduced management overhead, faster deployment times, simplified scaling, and improved developer productivity.

- Disadvantages: Less control over the underlying infrastructure, potential vendor lock-in, and potentially higher costs compared to IaaS for highly customized applications.

Deploying a Virtual Cloud Server using a Popular Provider’s API

Many cloud providers, such as Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure, offer robust APIs for programmatic server deployment. This allows for automation and integration with existing infrastructure management tools. For example, using the AWS Boto3 library in Python, one can create an EC2 instance (a virtual server) with a few lines of code. This approach ensures consistent and repeatable deployments, crucial for large-scale operations. A typical process would involve authentication, specifying instance type, operating system, storage, and network configuration, followed by the instance creation and status monitoring.

Deploying a Virtual Cloud Server using a Command-Line Interface

Deploying a virtual cloud server via a command-line interface (CLI) offers a flexible and powerful alternative to using a graphical user interface (GUI). This approach is especially useful for scripting and automation. The specific commands will vary depending on the cloud provider, but the general steps remain consistent.

- Authentication: Establish a secure connection to the cloud provider’s CLI using appropriate credentials.

- Resource Specification: Define the required resources, such as instance type, operating system image, storage size, and network configuration.

- Instance Creation: Execute the appropriate CLI command to create the virtual server instance based on the specified parameters.

- Security Group Configuration: Configure security groups to control inbound and outbound network traffic to the instance, ensuring security best practices are followed.

- Instance Monitoring: Monitor the instance’s status and ensure it’s running correctly.

For example, using the Google Cloud CLI (gcloud), one might create a compute engine instance with a command similar to: gcloud compute instances create my-instance --zone us-central1-a --machine-type n1-standard-1 --image ubuntu-os-cloud. This command creates an instance named “my-instance” in the specified zone, using a standard machine type and the Ubuntu operating system image. Remember to replace placeholders like zone, machine type, and image with your desired specifications.

Security Considerations for Virtual Cloud Servers

Securing virtual cloud servers is paramount due to their inherent vulnerabilities and the sensitive data they often hold. Understanding common threats and implementing robust security practices is crucial for maintaining data integrity, ensuring business continuity, and complying with relevant regulations. This section details common threats, best practices, and a comprehensive checklist to bolster your virtual cloud server security posture.

Common Security Threats Associated with Virtual Cloud Servers

Virtual cloud servers, while offering scalability and flexibility, are susceptible to various security threats. These threats can be broadly categorized into those targeting the infrastructure itself, the operating system and applications running on the server, and the data stored and processed. Examples include unauthorized access through compromised credentials or vulnerabilities in the underlying infrastructure, malware infections leading to data breaches or system disruptions, denial-of-service attacks overwhelming server resources, and insider threats from malicious or negligent employees. The distributed nature of cloud environments also introduces complexities in managing security across multiple layers and locations.

Best Practices for Securing a Virtual Cloud Server Environment

Implementing a multi-layered security approach is essential for protecting virtual cloud servers. This involves a combination of preventative, detective, and corrective measures. Strong password policies and multi-factor authentication are fundamental, minimizing the risk of unauthorized access. Regularly patching operating systems and applications closes known vulnerabilities exploited by attackers. Network security measures, such as firewalls and intrusion detection/prevention systems, monitor and control network traffic, preventing malicious activity. Data encryption, both in transit and at rest, protects sensitive data from unauthorized access, even if a breach occurs. Regular security audits and penetration testing identify vulnerabilities and weaknesses before attackers can exploit them. Finally, robust incident response planning ensures a swift and effective response in the event of a security incident.

Security Measures Checklist for Virtual Cloud Servers

Implementing a comprehensive set of security measures is vital for optimal protection. The following table Artikels key measures, their descriptions, and implementation steps:

| Measure | Description | Implementation Steps |

|---|---|---|

| Strong Passwords and Multi-Factor Authentication (MFA) | Enforces strong, unique passwords and adds an extra layer of security using MFA. | Enforce password complexity requirements (length, character types). Implement MFA using methods like TOTP, U2F, or hardware tokens. Regularly review and update password policies. |

| Regular Patching and Updates | Applies security patches and updates to operating systems, applications, and firmware to address known vulnerabilities. | Establish a regular patching schedule. Utilize automated patching tools where possible. Thoroughly test patches in a non-production environment before deploying to production. |

| Network Security (Firewall, IDS/IPS) | Implements firewalls to control network traffic and intrusion detection/prevention systems to monitor for malicious activity. | Configure firewalls to allow only necessary traffic. Deploy and configure IDS/IPS systems to detect and respond to suspicious network activity. Regularly review and update firewall rules and IDS/IPS signatures. |

| Data Encryption (In Transit and At Rest) | Protects data from unauthorized access using encryption both while it is being transmitted and while it is stored. | Use HTTPS for data in transit. Encrypt data at rest using tools like disk encryption or database encryption. Regularly review and update encryption keys. |

| Regular Security Audits and Penetration Testing | Identifies vulnerabilities and weaknesses in the system through regular security assessments and simulated attacks. | Schedule regular security audits conducted by internal or external security professionals. Conduct penetration testing to identify exploitable vulnerabilities. Address identified vulnerabilities promptly. |

| Access Control and Least Privilege | Limits user access to only the resources and functionalities they need to perform their job. | Implement role-based access control (RBAC). Grant users only the minimum necessary privileges. Regularly review and update access control lists. |

| Security Information and Event Management (SIEM) | Collects and analyzes security logs from various sources to detect and respond to security threats. | Implement a SIEM system to collect and analyze security logs. Configure alerts for critical events. Regularly review and analyze SIEM data. |

| Incident Response Plan | Artikels procedures to follow in the event of a security incident. | Develop a comprehensive incident response plan. Regularly test and update the plan. Train personnel on incident response procedures. |

Virtual Cloud Server Management and Monitoring

Effective management and monitoring are crucial for ensuring the optimal performance and reliability of your virtual cloud server. Proactive monitoring allows for early detection of potential problems, minimizing downtime and maximizing resource utilization. This section details various methods for monitoring performance, managing resources, and troubleshooting common issues.

Monitoring Virtual Cloud Server Performance

Several methods exist for monitoring the performance of a virtual cloud server. These range from built-in tools provided by cloud providers to third-party monitoring solutions. Effective monitoring involves tracking key metrics to identify bottlenecks and areas for improvement.

- Cloud Provider’s Monitoring Tools: Most cloud providers (e.g., AWS CloudWatch, Azure Monitor, Google Cloud Monitoring) offer comprehensive monitoring dashboards that provide real-time insights into CPU utilization, memory usage, network traffic, disk I/O, and other crucial metrics. These tools often include alerting capabilities, notifying administrators of potential issues.

- Third-Party Monitoring Tools: Solutions like Datadog, Nagios, and Prometheus offer advanced monitoring and alerting features, often integrating with multiple cloud platforms and providing more granular control over monitoring parameters and reporting. They can provide more sophisticated visualizations and analysis capabilities than built-in tools.

- Custom Scripts and Tools: For highly specialized monitoring needs, administrators can develop custom scripts using tools like PowerShell, Python, or Bash to collect and analyze specific performance metrics relevant to their applications.

Managing Virtual Cloud Server Resources

Efficient resource management is key to optimizing cost and performance. This involves dynamically adjusting CPU, memory, and storage allocations based on application demands and usage patterns.

- CPU Management: Cloud providers allow scaling CPU resources up or down as needed. For example, during peak usage times, you might increase the number of vCPUs allocated to your server. Conversely, during periods of low activity, you can reduce the vCPU allocation to save costs. Monitoring CPU utilization is essential for making informed decisions about scaling.

- Memory Management: Similar to CPU management, memory allocation can be adjusted based on application requirements. Monitoring memory usage helps identify memory leaks or applications consuming excessive memory, allowing for optimization or resource scaling.

- Storage Management: Cloud storage solutions offer various options for managing storage capacity and performance. This includes increasing or decreasing storage volume size, choosing different storage tiers (e.g., SSD vs. HDD) based on performance needs and cost considerations, and implementing strategies for data backup and archiving.

Troubleshooting Common Virtual Cloud Server Issues

A systematic approach to troubleshooting is crucial for resolving issues quickly and effectively. This involves identifying the problem, isolating the cause, and implementing a solution.

- Connectivity Problems: Network connectivity issues can stem from various sources, including network configuration errors, firewall rules, or DNS problems. Troubleshooting involves checking network settings, reviewing firewall logs, and testing network connectivity using tools like ping and traceroute.

- Application Errors: Application errors can be due to code bugs, misconfigurations, or resource limitations. Troubleshooting involves reviewing application logs, checking resource usage, and debugging the application code.

- Server Performance Issues: Slow performance can result from high CPU or memory utilization, insufficient storage I/O, or network bottlenecks. Troubleshooting involves monitoring resource usage, identifying bottlenecks, and adjusting resource allocations or optimizing the application.

Cost Optimization Strategies for Virtual Cloud Servers

Managing the cost of virtual cloud servers is crucial for maintaining a healthy budget and maximizing return on investment. Effective cost optimization involves a proactive approach to resource allocation, usage monitoring, and the selection of appropriate pricing models. This section details various strategies to reduce cloud spending without compromising performance or reliability.

Resource Optimization Techniques

Reducing unnecessary resource consumption is a cornerstone of cost optimization. This involves right-sizing instances, leveraging reserved instances or committed use discounts, and effectively managing storage. Right-sizing involves selecting the instance type that precisely meets your application’s needs, avoiding over-provisioning which leads to wasted compute power and increased costs. For example, if your application consistently uses only 20% of a large instance’s capacity, downsizing to a smaller instance can significantly reduce costs. Reserved instances and committed use discounts offer significant price reductions in exchange for a long-term commitment to a specific instance type and quantity. Similarly, efficient storage management, including regular data cleanup, archiving of less frequently accessed data to cheaper storage tiers, and utilizing cost-effective storage solutions like object storage, can substantially lower storage costs.

Choosing the Right Pricing Model

Cloud providers offer a variety of pricing models, each with its own advantages and disadvantages. Understanding these models is critical for selecting the most cost-effective option for your specific needs. The choice depends heavily on your workload characteristics, predicted usage patterns, and budget constraints. Careful consideration of factors like predicted usage, consistency of demand, and tolerance for interruptions can significantly influence the optimal pricing model.

Comparison of Cloud Pricing Models

| Provider | Pricing Model | Pros | Cons |

|---|---|---|---|

| Amazon Web Services (AWS) | On-Demand | Pay-as-you-go flexibility, ideal for unpredictable workloads. | Can be more expensive for consistent, high-usage applications. |

| AWS | Reserved Instances | Significant discounts for long-term commitments. | Requires accurate workload forecasting; commitment penalties for early termination. |

| Microsoft Azure | Pay-As-You-Go | Flexible pricing, suitable for varying workloads. | Higher costs for sustained high usage compared to committed use discounts. |

| Azure | Reserved Virtual Machine Instances | Cost savings for consistent usage; various reservation terms available. | Requires commitment; potential for wasted resources if usage patterns change. |

| Google Cloud Platform (GCP) | Sustained Use Discounts | Discounts based on sustained usage over a period. | Requires consistent usage to achieve maximum discounts. |

| GCP | Committed Use Discounts | Significant discounts for long-term commitments. | Requires accurate forecasting and commitment; penalties for early termination. |

Auto-Scaling and Load Balancing

Auto-scaling dynamically adjusts the number of virtual cloud servers based on demand, ensuring optimal performance while minimizing wasted resources. This is particularly beneficial for applications with fluctuating workloads. By automatically scaling up during peak demand and scaling down during low demand periods, organizations can significantly reduce costs compared to maintaining a consistently high number of servers. Load balancing distributes traffic across multiple servers, preventing any single server from becoming overloaded and ensuring consistent application performance. This prevents the need for over-provisioning individual servers to handle peak loads. Effective implementation of auto-scaling and load balancing can lead to substantial cost savings while maintaining high availability.

Scalability and Elasticity of Virtual Cloud Servers

Virtual cloud servers offer a significant advantage over traditional physical servers through their inherent scalability and elasticity. This means that resources allocated to a virtual server can be dynamically adjusted to meet fluctuating demands, ensuring optimal performance and cost-efficiency. This adaptability is crucial in today’s dynamic IT landscape, where workloads can vary dramatically over short periods.

The ability to scale resources up or down allows businesses to respond effectively to peak demands without over-provisioning resources during periods of low activity. This flexibility translates to significant cost savings and improved resource utilization. Furthermore, elasticity enables applications to automatically adjust their resource consumption based on real-time needs, ensuring consistent performance even under unpredictable load.

Vertical Scaling (Scaling Up/Down)

Vertical scaling involves modifying the resources allocated to a single virtual server. This could involve increasing the CPU power, RAM, or storage capacity of the server to handle increased workloads (scaling up). Conversely, when demand decreases, these resources can be reduced (scaling down) to optimize costs. This process is typically straightforward, often involving a simple modification through the cloud provider’s control panel or API. For example, a web server experiencing a sudden surge in traffic might have its CPU cores doubled temporarily to maintain responsiveness, then reduced back to the original configuration once the peak demand subsides. This ensures the server can handle the load without performance degradation while avoiding unnecessary expenses during periods of low usage.

Horizontal Scaling (Scaling Out/In)

Horizontal scaling involves adding or removing entire virtual servers from a pool of servers. If demand increases significantly, additional servers can be added to distribute the workload (scaling out). This approach is particularly beneficial for applications that can be easily distributed across multiple servers, such as web applications or databases. When demand decreases, servers can be removed (scaling in) to reduce costs. This process is often automated using load balancers and auto-scaling features. For instance, a large e-commerce website anticipates a significant increase in traffic during holiday sales. To handle this, they could automatically provision additional web servers to distribute the load and ensure the site remains responsive. After the holiday season, the extra servers are decommissioned, reducing the operational costs.

Auto-Scaling Features

Auto-scaling features automate the process of scaling resources based on predefined metrics. These metrics can include CPU utilization, memory usage, network traffic, or custom application-specific metrics. When a metric exceeds a defined threshold, the auto-scaling system automatically adds or removes resources to maintain optimal performance and meet the current demand. This eliminates the need for manual intervention and ensures that resources are always optimally allocated. The benefits of auto-scaling include improved application availability, reduced operational overhead, and optimized cost management.

Configuring Auto-Scaling on Amazon Web Services (AWS)

AWS offers a robust auto-scaling service called Amazon EC2 Auto Scaling. To configure auto-scaling for an EC2 instance, one would first create a Launch Configuration specifying the desired instance type, AMI (Amazon Machine Image), and other settings. Next, an Auto Scaling Group is created, defining the desired capacity (minimum, maximum, and desired number of instances), health checks, and scaling policies. Scaling policies are crucial, defining the conditions under which the Auto Scaling group will scale up or down. These policies can be based on metrics like CPU utilization, custom metrics from CloudWatch, or even scheduled events. For example, a policy might be set to automatically add instances when CPU utilization exceeds 80% for a sustained period, and remove instances when utilization falls below 20%. The system continuously monitors the specified metrics and automatically adjusts the number of instances accordingly, ensuring optimal performance and cost-efficiency.

Virtual Cloud Server Use Cases

Virtual cloud servers offer a highly flexible and scalable solution for a wide range of applications across diverse industries. Their on-demand nature and pay-as-you-go pricing models make them an attractive option for businesses of all sizes, from startups to large enterprises. The versatility of virtual cloud servers allows them to be tailored to specific needs, maximizing efficiency and minimizing costs.

Virtual cloud servers are deployed across numerous sectors, leveraging their inherent advantages to enhance operational efficiency and support business growth. Their scalability and adaptability make them ideal for handling fluctuating workloads and supporting rapid expansion.

Web Hosting

Virtual cloud servers are extensively used for web hosting, providing the necessary resources to manage websites and web applications. Their scalability allows hosting providers to easily accommodate fluctuating traffic demands, ensuring consistent website performance even during peak hours. The ability to quickly provision additional resources prevents service disruptions and ensures a positive user experience. For example, a rapidly growing e-commerce business can easily scale its web hosting infrastructure on a virtual cloud server to handle increased traffic during promotional periods or holiday sales, avoiding service outages that would negatively impact revenue.

Application Development and Deployment

Virtual cloud servers provide a robust and efficient environment for application development and deployment. Developers can create and test applications in a controlled environment, replicating production conditions with ease. The scalability of virtual cloud servers allows for easy scaling of applications based on user demand, ensuring optimal performance and reliability. For instance, a software company developing a new mobile game can utilize virtual cloud servers to run extensive load tests before launch, identifying and resolving potential bottlenecks to ensure a smooth and enjoyable user experience upon release.

Big Data Analytics

The processing power and storage capacity of virtual cloud servers are invaluable for big data analytics. These servers can handle large datasets and complex algorithms, enabling businesses to extract valuable insights from their data. The ability to easily scale resources allows analysts to handle growing datasets without compromising performance. A financial institution, for example, could use virtual cloud servers to process vast amounts of transactional data to detect fraudulent activities in real-time, leveraging the scalability to handle peak processing demands during specific periods.

High-Performance Computing (HPC)

Virtual cloud servers are increasingly used for high-performance computing tasks, such as scientific simulations, financial modeling, and video rendering. The ability to provision powerful virtual machines with specialized hardware configurations allows researchers and engineers to complete complex computations quickly and efficiently. A pharmaceutical company, for instance, might leverage virtual cloud servers to perform complex molecular simulations to accelerate drug discovery and development, utilizing the high processing power for quicker results and reduced research time.

Database Management

Virtual cloud servers provide a reliable and scalable platform for managing databases. The ability to easily scale resources ensures that databases can handle increasing amounts of data and user traffic. Furthermore, features like automated backups and disaster recovery ensure data protection and business continuity. A large retail company, for example, could use virtual cloud servers to manage its customer database, ensuring high availability and data integrity while scaling resources to accommodate seasonal increases in sales and transaction volumes.

Common Applications Best Suited for a Virtual Cloud Server Environment

Virtual cloud servers are well-suited for applications requiring scalability, flexibility, and high availability. This includes applications such as:

- Web applications

- Mobile applications

- Databases

- E-commerce platforms

- Big data analytics platforms

- High-performance computing applications

- DevOps environments

- Testing and development environments

The choice of a virtual cloud server deployment is often driven by the need for on-demand scalability, cost-effectiveness, and the ability to quickly adapt to changing business requirements. The inherent flexibility and robust features make virtual cloud servers a compelling solution for a diverse range of applications.

Comparing Different Virtual Cloud Server Providers

Choosing the right virtual cloud server provider is crucial for the success of any cloud-based project. The optimal provider depends heavily on individual needs, considering factors like budget, required resources, and specific application demands. This section compares three major providers – Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) – highlighting their strengths and weaknesses.

Provider Feature Comparison

The following table summarizes key features of AWS, Azure, and GCP, focusing on compute, storage, and networking capabilities. Direct comparisons are challenging due to the extensive and constantly evolving nature of each provider’s offerings. This table represents a snapshot based on generally available services at the time of writing. Specific pricing will vary based on region, instance type, and usage.

| Feature | Amazon Web Services (AWS) | Microsoft Azure | Google Cloud Platform (GCP) |

|---|---|---|---|

| Compute (VM Instances) | Wide range of instance types, optimized for various workloads (e.g., EC2). Strong emphasis on scalability and customization. | Diverse VM options with similar scalability to AWS. Good integration with other Microsoft services. | Competitive range of VM types, known for its strong performance in specific areas like machine learning. |

| Storage | Extensive storage options including S3 (object storage), EBS (block storage), and Glacier (archive storage). Highly scalable and reliable. | Azure Blob Storage (object), Azure Disks (block), and Azure Archive Storage offer comparable functionality to AWS. | Cloud Storage (object), Persistent Disk (block), and Archive Storage provide a similar range of storage solutions. |

| Networking | Robust networking infrastructure with features like VPC (Virtual Private Cloud) for enhanced security and control. | Azure Virtual Network provides similar capabilities to AWS VPC, enabling secure and isolated network environments. | Virtual Private Cloud (VPC) offers comparable features to AWS and Azure, enabling secure network segmentation. |

| Pricing | Pay-as-you-go model with various pricing options and discounts available. Can become complex to manage costs effectively without careful planning. | Similar pay-as-you-go model with competitive pricing. Offers various discounts and reserved instance options. | Competitive pricing structure with a pay-as-you-go model and opportunities for cost optimization. |

AWS Strengths and Weaknesses

AWS, the market leader, boasts extensive services, a vast global infrastructure, and a mature ecosystem. However, its sheer scale can lead to complexity, potentially increasing management overhead and costs if not properly managed. The pricing model, while flexible, can be intricate, requiring careful monitoring to avoid unexpected expenses.

Microsoft Azure Strengths and Weaknesses

Azure offers strong integration with Microsoft’s ecosystem, making it a compelling choice for organizations heavily invested in Microsoft technologies. Its global reach is comparable to AWS, providing reliable infrastructure. However, some might find its user interface less intuitive than AWS, and its market share in certain niche areas lags behind AWS.

Google Cloud Platform (GCP) Strengths and Weaknesses

GCP is known for its strong performance in specific areas, particularly machine learning and big data processing. It offers competitive pricing and a robust infrastructure. However, its market share is smaller than AWS and Azure, meaning a potentially smaller community and fewer readily available third-party tools.

Disaster Recovery and High Availability with Virtual Cloud Servers

Ensuring the continuous operation and data protection of virtual cloud servers is paramount for business continuity. High availability (HA) and disaster recovery (DR) strategies are crucial for mitigating the impact of outages, whether caused by hardware failure, natural disasters, or cyberattacks. These strategies work in tandem to minimize downtime and data loss, maintaining business operations and customer trust.

High availability focuses on preventing outages through redundancy and failover mechanisms, while disaster recovery focuses on restoring services and data in the event of a catastrophic event impacting the primary infrastructure. Effective implementation requires a well-defined plan, incorporating robust backup and replication methods, and regular testing to validate its effectiveness.

High Availability Strategies

High availability for virtual cloud servers typically relies on redundant components and automated failover mechanisms. This might involve deploying multiple virtual servers across different availability zones within a cloud provider’s infrastructure. If one server fails, the system automatically switches to a standby server, minimizing disruption. Load balancing distributes traffic across multiple servers, preventing any single server from becoming overloaded and failing. Regular health checks monitor the status of servers, triggering failover if a problem is detected. For example, a web application might use multiple instances distributed across different availability zones. If one zone experiences an outage, the load balancer redirects traffic to instances in other zones, maintaining service availability.

Data Protection through Backups and Replication

Regular backups are essential for data protection and disaster recovery. These backups should be stored in a geographically separate location to protect against data loss from regional disasters. Different backup strategies exist, including full backups, incremental backups, and differential backups. Full backups create a complete copy of the data, while incremental and differential backups only capture changes since the last full or incremental backup, respectively. Replication creates copies of data in real-time or near real-time, ensuring that a recent copy is always available in a different location. This allows for faster recovery times compared to relying solely on backups. For instance, a company might use a combination of on-site backups and off-site cloud-based replication to protect its critical data. This ensures data protection even in the event of a local disaster affecting the primary data center.

Disaster Recovery Plan for Virtual Cloud Servers

A comprehensive disaster recovery plan is vital. This plan should Artikel procedures for responding to various disaster scenarios, including hardware failures, natural disasters, and cyberattacks. It should define roles and responsibilities, communication protocols, and recovery time objectives (RTOs) and recovery point objectives (RPOs). RTO specifies the maximum acceptable downtime after a disaster, while RPO defines the maximum acceptable data loss. The plan should detail steps for restoring virtual servers from backups or replicated data, including the testing procedures to verify the plan’s effectiveness. For example, a company might define an RTO of 4 hours and an RPO of 2 hours for its critical applications. This means the systems should be restored within 4 hours of a disaster, and no more than 2 hours of data loss is acceptable. The plan would include detailed steps for restoring the application from backups and replicated data, along with a schedule for regular testing and updates. The plan should also address communication with stakeholders and clients during the recovery process.

Essential Questionnaire

What is the difference between a virtual and a physical server?

A physical server is a standalone computer, while a virtual server is a software-based emulation of a physical server running on a physical host. Multiple virtual servers can share the resources of a single physical server.

How do I choose the right virtual cloud server provider?

Consider factors like pricing, features (compute, storage, networking), security, customer support, and scalability when selecting a provider. Evaluate your specific needs and compare offerings from different providers.

What are the common security threats associated with virtual cloud servers?

Common threats include unauthorized access, data breaches, denial-of-service attacks, and malware infections. Implementing strong security measures is crucial to mitigate these risks.

Can I migrate my existing applications to a virtual cloud server?

Yes, many applications can be migrated to a virtual cloud server. The complexity of migration depends on the application’s architecture and dependencies.