Defining Cloud Server Infrastructure

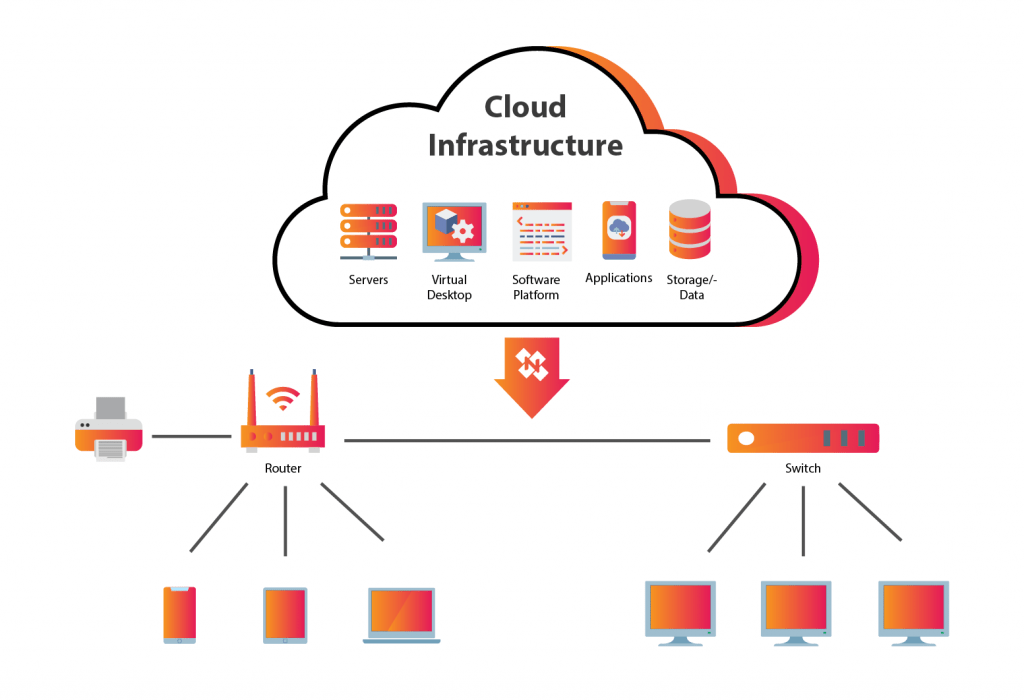

Cloud server infrastructure represents the underlying hardware and software resources that enable the delivery of computing services over the internet. It encompasses a vast network of interconnected servers, storage devices, and networking components, all managed and provisioned to provide scalable and on-demand access to IT resources. This eliminates the need for organizations to invest heavily in and maintain their own physical data centers.

Cloud server infrastructure is built upon several core components working in concert. These components ensure the reliable and efficient delivery of services.

Core Components of Cloud Server Infrastructure

The core components of cloud server infrastructure are intricately linked to provide a seamless and scalable service. These include the physical servers themselves, the virtualization technology that allows for efficient resource allocation, the networking infrastructure connecting everything, and the management software that orchestrates the entire system. Crucially, robust security measures are integrated throughout to protect data and resources. Without these interconnected elements, the efficient delivery of cloud services would be impossible. For example, virtualization allows multiple virtual servers to run on a single physical server, maximizing resource utilization and reducing costs. The network infrastructure ensures low latency communication between servers and users. Sophisticated management software allows for automation, monitoring, and efficient scaling of resources to meet changing demands.

Cloud Deployment Models: Public, Private, and Hybrid

The choice of cloud deployment model significantly impacts an organization’s infrastructure strategy, security needs, and cost considerations. Public clouds, such as Amazon Web Services (AWS) or Microsoft Azure, provide shared resources accessible over the internet, offering scalability and cost-effectiveness. Private clouds, on the other hand, are dedicated resources hosted either on-premises or by a third-party provider, offering greater control and security but potentially higher costs. Hybrid clouds combine elements of both, leveraging the benefits of both public and private cloud environments. For example, a company might use a public cloud for less sensitive data and applications while keeping critical data and systems within a private cloud for enhanced security.

Cloud Service Models: IaaS, PaaS, and SaaS

Different cloud service models cater to various needs and levels of technical expertise. Infrastructure as a Service (IaaS) provides access to fundamental computing resources such as virtual machines, storage, and networking. Users manage operating systems and applications. Platform as a Service (PaaS) offers a platform for application development and deployment, abstracting away much of the underlying infrastructure management. Software as a Service (SaaS) provides fully managed applications accessible over the internet, requiring minimal user management. For instance, IaaS is akin to renting a server, PaaS is like renting a pre-configured development environment, and SaaS is like renting a fully functional application such as email or CRM software. Each model offers a different level of control and responsibility for the user.

Security Considerations in Cloud Server Infrastructure

Migrating to a cloud environment offers numerous benefits, but it also introduces a new set of security challenges. Understanding and mitigating these risks is crucial for maintaining the confidentiality, integrity, and availability of your data and applications. This section will explore common threats, best practices, and a sample security architecture for a cloud-based application.

Common Security Threats and Vulnerabilities in Cloud Environments

Cloud environments, while offering scalability and flexibility, are susceptible to various security threats. These threats often exploit vulnerabilities inherent in the shared responsibility model of cloud computing, where security responsibilities are divided between the cloud provider and the customer. Misconfigurations, insufficient access control, and inadequate data protection measures are among the most prevalent issues. For example, leaving default passwords unchanged on virtual machines or failing to properly configure firewalls can leave systems vulnerable to unauthorized access. Furthermore, the distributed nature of cloud environments makes it more challenging to detect and respond to security incidents promptly. Data breaches, denial-of-service attacks, and insider threats are all potential concerns.

Best Practices for Securing Cloud Server Infrastructure

Implementing robust security measures is paramount for protecting cloud-based systems. A layered security approach, combining multiple security controls, is highly recommended. This includes strong authentication and authorization mechanisms, such as multi-factor authentication (MFA) and role-based access control (RBAC). Regular security audits and penetration testing can identify vulnerabilities before they are exploited. Data encryption, both in transit and at rest, is crucial for protecting sensitive information. Utilizing cloud-native security tools, such as cloud security posture management (CSPM) and cloud workload protection platforms (CWPP), can provide enhanced visibility and control over cloud resources. Maintaining up-to-date software and patching systems promptly is also essential to mitigate known vulnerabilities. Finally, a well-defined incident response plan is crucial for effectively handling security incidents and minimizing their impact.

Example Security Architecture for a Hypothetical Cloud-Based Application

Consider a hypothetical e-commerce application deployed on a cloud platform like AWS. The architecture would incorporate several security layers. The application itself would be containerized and deployed using Kubernetes, with network policies restricting communication between containers. A web application firewall (WAF) would protect against common web attacks. Data at rest would be encrypted using server-side encryption, and data in transit would be secured using TLS/SSL. User authentication would leverage MFA and RBAC, limiting access based on user roles. Centralized logging and monitoring would provide visibility into system activity, enabling the detection of suspicious behavior. Regular vulnerability scans and penetration testing would be conducted to identify and address potential weaknesses. Finally, an incident response plan would Artikel procedures for handling security incidents, including communication protocols and escalation paths. This layered approach provides a robust security posture for the application, minimizing the risk of breaches and data loss.

Scalability and Elasticity in Cloud Server Infrastructure

Cloud infrastructure offers significant advantages over traditional on-premise solutions, primarily due to its inherent scalability and elasticity. These characteristics allow businesses to adapt their computing resources to fluctuating demands, optimizing cost and performance. This section will explore how cloud platforms achieve scalability and elasticity, provide examples of their crucial applications, and discuss methods for efficient resource allocation.

Cloud infrastructure enables scalability and elasticity through its dynamic resource provisioning capabilities. Unlike traditional systems with fixed hardware limitations, cloud environments allow users to easily increase or decrease computing resources (such as CPU, memory, storage, and network bandwidth) on demand. This flexibility is achieved through virtualization and automation, allowing cloud providers to rapidly allocate and deallocate resources based on user needs. This dynamic allocation ensures that applications always have the necessary resources to function optimally, while simultaneously preventing over-provisioning and associated costs.

Scalability and Elasticity Scenarios

Scalability and elasticity are critical in various scenarios. For example, e-commerce businesses experience significant traffic spikes during peak shopping seasons like Black Friday or Cyber Monday. Without scalable infrastructure, their websites might crash under the load, resulting in lost sales and reputational damage. Similarly, media streaming services need to scale their infrastructure to handle simultaneous access from a large number of users during popular events or new content releases. A cloud-based architecture allows these services to automatically increase their capacity to meet the increased demand and then scale back down once the peak demand subsides, ensuring optimal performance and cost efficiency. Another example would be a SaaS company experiencing rapid user growth; cloud infrastructure enables them to effortlessly expand their server capacity to accommodate new users without lengthy procurement and setup processes associated with traditional on-premise solutions.

Optimizing Resource Allocation for Enhanced Scalability

Efficient resource allocation is crucial for maximizing the benefits of cloud scalability and elasticity. Several methods contribute to this optimization. Auto-scaling features, offered by most major cloud providers, automatically adjust resources based on predefined metrics such as CPU utilization, memory usage, or network traffic. This eliminates the need for manual intervention and ensures that resources are always appropriately provisioned. Right-sizing instances involves selecting the appropriate size of virtual machines (VMs) for specific workloads. Over-provisioning leads to unnecessary costs, while under-provisioning can result in performance bottlenecks. Utilizing cloud monitoring tools provides real-time insights into resource usage, enabling proactive adjustments to prevent performance issues. Finally, employing containerization technologies such as Docker and Kubernetes enhances resource utilization by packaging applications and their dependencies into isolated units, enabling efficient resource sharing and deployment across multiple environments. Careful planning and design, combined with the utilization of these tools and techniques, ensures efficient resource allocation and maximizes the benefits of cloud scalability and elasticity.

Cost Optimization in Cloud Server Infrastructure

Minimizing cloud computing costs is crucial for maintaining profitability and maximizing return on investment. Effective cost optimization strategies involve a combination of proactive planning, continuous monitoring, and the adoption of cost-effective practices throughout the lifecycle of your cloud infrastructure. This section explores key strategies and provides a sample cost optimization plan.

Strategies for Minimizing Cloud Computing Costs

Several key strategies contribute to significant cost reductions in cloud environments. These strategies encompass various aspects of cloud resource management, from efficient resource utilization to leveraging cost-effective pricing models.

- Rightsizing Instances: Choosing the appropriate instance size for your workload is paramount. Over-provisioning leads to wasted resources and unnecessary expense. Regularly review instance utilization metrics and adjust sizes as needed to match actual demand. For example, downsizing from a large instance to a medium instance during periods of low activity can yield considerable savings.

- Reserved Instances and Savings Plans: Committing to using specific resources for a defined period allows cloud providers to offer discounted rates. Reserved Instances (RIs) and Savings Plans are excellent options for predictable workloads, guaranteeing significant cost savings compared to on-demand pricing.

- Spot Instances: Spot instances offer significant cost reductions by utilizing spare compute capacity. While there’s a risk of interruption, they are ideal for fault-tolerant applications or batch processing tasks that can tolerate occasional downtime. Using Spot Instances for non-critical tasks can result in savings of up to 90% compared to on-demand pricing.

- Automated Scaling: Auto-scaling dynamically adjusts the number of instances based on demand, preventing over-provisioning during periods of low activity and ensuring sufficient capacity during peak times. This eliminates the need for manual intervention and ensures optimal resource utilization, leading to cost savings.

- Resource Tagging and Cost Allocation: Implementing a comprehensive tagging strategy allows for granular tracking of resource costs. This facilitates cost allocation across different teams or projects, enabling better budget management and identifying areas for optimization. For example, tagging resources by department or project helps determine which team is consuming the most resources and pinpoint areas for improvement.

Cost Optimization Plan: Sample Deployment

Consider a hypothetical e-commerce website requiring a cloud infrastructure. The initial deployment includes:

* Web servers: 3 x medium instances (on-demand)

* Database server: 1 x large instance (on-demand)

* Load balancer: 1 x small instance (on-demand)

A cost optimization plan would involve:

1. Rightsizing: Analyze resource utilization. If the web servers consistently operate below 50% utilization, downsize to small instances. If the database server shows consistent high utilization, consider upgrading to a larger instance type for improved performance and potential cost savings in the long run.

2. Reserved Instances/Savings Plans: After assessing consistent usage patterns, transition to Reserved Instances or Savings Plans for the web servers and database server. This will provide substantial cost reductions.

3. Auto-scaling: Implement auto-scaling for the web servers to handle fluctuating traffic demands. This ensures optimal resource utilization during peak hours and minimizes wasted resources during low-traffic periods.

4. Monitoring and Optimization: Regularly review cost and usage reports. Identify underutilized resources and adjust accordingly. Leverage cloud provider tools to gain insights into cost drivers and optimize resource allocation.

Comparison of Cloud Provider Pricing Models

Different cloud providers offer various pricing models. The following table compares pricing models across AWS, Azure, and GCP, highlighting key differences.

| Feature | AWS | Azure | GCP |

|---|---|---|---|

| On-Demand Instances | Pay-as-you-go for compute time. | Pay-as-you-go for compute time. | Pay-as-you-go for compute time. |

| Reserved Instances/Savings Plans | Discounted rates for committed usage. | Similar to AWS Reserved Instances, offering discounted rates for committed usage. | Offers sustained use discounts and committed use discounts. |

| Spot Instances | Significant discounts for unused capacity, but with risk of interruption. | Offers similar low-cost spot instances. | Offers preemptible VMs at a significantly reduced cost. |

| Pricing Transparency | Detailed pricing calculators and cost management tools available. | Provides pricing calculators and cost management tools. | Offers detailed pricing calculators and cost management tools. |

Cloud Server Infrastructure Management Tools

Effective management of cloud server infrastructure is crucial for maintaining performance, security, and cost-efficiency. This requires a robust suite of tools capable of monitoring, automating, and optimizing various aspects of the infrastructure. The right tools can significantly reduce manual effort, improve response times to issues, and enhance overall operational efficiency.

A wide range of tools and platforms are available, catering to different needs and scales of operation. These tools can be broadly categorized into those focused on monitoring and logging, and those designed for automation and orchestration. The choice of tools often depends on the specific cloud provider being used (AWS, Azure, GCP, etc.), the complexity of the infrastructure, and the organization’s specific requirements.

Popular Cloud Management Tools and Platforms

Several prominent platforms offer comprehensive management capabilities for cloud infrastructure. These platforms often integrate various functionalities, including monitoring, automation, and security management, into a single interface. This consolidated approach streamlines operations and provides a unified view of the entire infrastructure.

- AWS Management Console: Amazon’s web-based interface provides access to manage all AWS services. It offers features for provisioning, configuring, and monitoring resources across various AWS services like EC2, S3, and RDS.

- Azure Portal: Microsoft’s cloud management portal allows users to manage their Azure resources, including virtual machines, storage accounts, and networking components. It offers similar functionalities to the AWS Management Console, including resource provisioning, configuration, and monitoring.

- Google Cloud Console: Google’s web-based console offers a similar set of tools for managing Google Cloud Platform (GCP) resources, providing a centralized interface for managing compute engines, storage buckets, and other GCP services.

- Terraform: This open-source Infrastructure as Code (IaC) tool allows for the definition and management of infrastructure across multiple cloud providers using a declarative configuration language. It enables automation of infrastructure provisioning and updates.

- Ansible: An open-source automation tool that uses a simple language (YAML) to automate IT infrastructure tasks, including cloud server management. It excels at configuration management and application deployment.

Monitoring and Logging Tools for Cloud Infrastructure

Real-time monitoring and comprehensive logging are essential for maintaining the health and security of cloud server infrastructure. These tools provide valuable insights into system performance, resource utilization, and potential security threats, allowing for proactive identification and resolution of issues.

- CloudWatch (AWS): Provides monitoring and logging services for AWS resources. It collects metrics, logs, and events from various AWS services and allows for setting alarms based on defined thresholds.

- Azure Monitor (Azure): Microsoft’s monitoring service collects metrics and logs from Azure resources, providing insights into performance and availability. It also offers functionalities for log analytics and alerting.

- Stackdriver (GCP): Google Cloud’s monitoring and logging service offers similar functionalities to CloudWatch and Azure Monitor, providing comprehensive monitoring and logging capabilities for GCP resources.

- Prometheus: An open-source monitoring and alerting toolkit that can be used to monitor a wide range of applications and infrastructure components. It’s highly scalable and flexible.

- Grafana: A popular open-source data visualization and analytics platform. It can be used to visualize metrics collected by tools like Prometheus, providing dashboards for monitoring various aspects of the infrastructure.

Automating Tasks Related to Cloud Server Infrastructure Management

Automation is key to efficient cloud infrastructure management. Automating repetitive tasks reduces human error, increases speed and consistency, and frees up IT staff to focus on more strategic initiatives. This can involve scripting, using configuration management tools, or leveraging the automation capabilities built into cloud platforms.

For example, automating the provisioning of new servers, deploying applications, scaling resources based on demand, and backing up data are all common use cases. Effective automation typically involves the use of Infrastructure as Code (IaC) tools and scripting languages such as Python or Bash.

- Using IaC tools like Terraform or CloudFormation: These tools allow you to define your infrastructure as code, enabling automated provisioning and updates. Changes to the infrastructure are made by modifying the code, and the tools automatically apply those changes.

- Employing configuration management tools like Ansible or Chef: These tools manage the configuration of servers and applications, ensuring consistency across multiple environments. They automate tasks such as software installation, configuration file updates, and security patching.

- Leveraging cloud provider APIs: Cloud providers offer APIs that allow programmatic interaction with their services. This enables automation of tasks such as creating and deleting resources, managing security groups, and scaling instances.

- Implementing CI/CD pipelines: Continuous Integration/Continuous Delivery (CI/CD) pipelines automate the process of building, testing, and deploying applications. This ensures that code changes are quickly and reliably deployed to the cloud infrastructure.

Disaster Recovery and Business Continuity in the Cloud

Maintaining business operations during and after disruptive events is critical for any organization. Cloud-based infrastructure offers several advantages in achieving this goal, providing resilience and scalability that traditional on-premise solutions often lack. A robust disaster recovery (DR) and business continuity (BC) plan is essential for leveraging these advantages and minimizing downtime in the face of unforeseen circumstances. This section explores strategies and best practices for ensuring business continuity in a cloud environment.

Strategies for Ensuring Business Continuity in a Cloud Environment

Effective business continuity in the cloud relies on a multi-faceted approach. This includes proactive measures like regular backups, redundancy across multiple availability zones, and well-defined recovery procedures. A crucial element is the selection of appropriate cloud services that offer inherent resilience features. For example, utilizing geographically dispersed services ensures that if one region experiences an outage, the application can seamlessly failover to another region. Furthermore, implementing robust monitoring and alerting systems enables proactive identification and mitigation of potential issues before they escalate into major disruptions. Regular testing of the DR plan is also vital to ensure its effectiveness and identify any weaknesses. This testing should simulate various scenarios, including hardware failures, natural disasters, and cyberattacks.

Designing a Disaster Recovery Plan for a Cloud-Based Application

A comprehensive disaster recovery plan for a cloud-based application should detail procedures for restoring services in the event of an outage. This plan should clearly define roles and responsibilities, recovery time objectives (RTOs), and recovery point objectives (RPOs). The RTO specifies the maximum acceptable downtime after an outage, while the RPO defines the maximum acceptable data loss. For example, a financial institution might have a very low RTO and RPO, while a less critical application might tolerate a longer recovery time and some data loss. The plan should also Artikel specific steps for recovering data, applications, and infrastructure, including detailed instructions for accessing backup systems and restoring services. This should include the use of automated tools where possible to expedite the recovery process. Regular reviews and updates of the plan are essential to ensure its continued relevance and effectiveness.

The Role of Backups and Redundancy in Cloud Disaster Recovery

Backups and redundancy are cornerstones of effective cloud disaster recovery. Regular backups provide a means of restoring data and applications to a known good state in the event of data loss or corruption. Cloud providers offer various backup services, ranging from simple snapshots to more comprehensive solutions that include replication and archiving. Redundancy, achieved through techniques like replication across multiple availability zones or regions, ensures that services remain available even if one part of the infrastructure fails. This could involve deploying multiple instances of an application across different geographical locations or using redundant storage systems. The choice of backup and redundancy strategies will depend on factors such as the criticality of the application, the RTO and RPO requirements, and the budget constraints. For instance, a highly critical application might require multiple geographically dispersed backups and synchronous replication, while a less critical application might suffice with asynchronous replication and less frequent backups.

Networking Aspects of Cloud Server Infrastructure

Networking forms the backbone of any cloud server infrastructure, enabling communication and data transfer between virtual machines (VMs), applications, and external resources. A robust and well-designed network architecture is crucial for ensuring high availability, performance, and security within a cloud environment. Without efficient networking, the benefits of scalability, elasticity, and cost optimization offered by cloud computing are significantly diminished.

Effective networking in cloud environments involves selecting appropriate network topologies, configuring virtual networks, implementing security measures, and managing network traffic efficiently. These aspects directly impact application performance, user experience, and overall operational efficiency.

Cloud Network Topologies

Cloud providers offer various network topologies to suit different needs and scales. The choice of topology influences factors like latency, bandwidth, and security. Common topologies include: Star topology, where all VMs connect to a central hub or switch; Mesh topology, characterized by multiple interconnected paths between VMs, offering redundancy and fault tolerance; and Bus topology, a simpler, less redundant design where all VMs connect to a single cable or bus. The selection depends on the specific application requirements and the desired level of redundancy and performance. For instance, a high-availability application might benefit from a mesh topology, while a less critical application might suffice with a star topology.

Virtual Network Configuration and Management

Virtual networks (VNs) are logically isolated sections of a cloud provider’s physical network. They allow users to create their own private networks within the cloud, providing enhanced security and control. VN configuration involves defining subnets, assigning IP addresses, configuring routing tables, and implementing security groups or network access control lists (ACLs). Management involves monitoring network performance, troubleshooting connectivity issues, and scaling the network to meet changing demands. For example, a company might create multiple VNs to isolate different departments or applications, enhancing security and preventing unauthorized access. This isolation also simplifies troubleshooting and maintenance, as issues within one VN are less likely to affect others. Furthermore, sophisticated VN management tools offered by cloud providers allow for automated scaling and configuration changes, ensuring optimal performance and resource utilization.

Network Security in Cloud Environments

Security is paramount in cloud networking. Cloud providers offer various security features to protect virtual networks and the applications running within them. These include virtual firewalls, intrusion detection and prevention systems (IDS/IPS), and virtual private networks (VPNs). Proper configuration of these security features is crucial to prevent unauthorized access and data breaches. Implementing robust security practices, such as regularly updating security patches, employing strong passwords, and implementing multi-factor authentication, is also vital for maintaining a secure cloud environment. Failure to properly configure and manage network security can lead to significant security vulnerabilities and potential data loss. A well-defined security policy and its consistent enforcement are critical for mitigating these risks.

Choosing the Right Cloud Provider

Selecting the appropriate cloud provider is a crucial decision for any organization, impacting scalability, security, cost, and overall operational efficiency. The diverse landscape of cloud providers, each offering a unique blend of services and features, necessitates a careful evaluation process to align infrastructure needs with provider capabilities. This section will explore key factors to consider and provide a framework for making an informed decision.

Comparison of Major Cloud Providers

The three major players in the cloud computing market—Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP)—each offer a comprehensive suite of services, but with distinct strengths and weaknesses. AWS boasts the largest market share and a mature ecosystem, providing a vast array of services, from compute and storage to machine learning and analytics. Azure excels in hybrid cloud solutions and strong integration with Microsoft products, making it a preferred choice for enterprises already heavily invested in the Microsoft ecosystem. GCP stands out with its strong focus on data analytics, machine learning, and big data processing, offering powerful tools for data-intensive applications. While all three offer comparable core services like virtual machines and storage, their pricing models, service availability, and specific features vary significantly. For instance, AWS might offer a wider range of specialized databases, while Azure might have superior integration with on-premises Active Directory. GCP might be the most cost-effective option for certain large-scale data processing tasks.

Factors to Consider When Selecting a Cloud Provider

Choosing a cloud provider involves considering several critical factors beyond just the core services. These include:

- Service Portfolio: Does the provider offer the specific services needed, such as databases, serverless computing, or specific machine learning models? A comprehensive service portfolio reduces the need for multiple providers and simplifies management.

- Geographic Location and Data Sovereignty: Data residency and compliance regulations often dictate where data must be stored. The provider’s global infrastructure and data center locations are crucial considerations.

- Security and Compliance: Robust security features, compliance certifications (e.g., ISO 27001, SOC 2), and data encryption capabilities are paramount for protecting sensitive data.

- Pricing and Cost Optimization: Cloud pricing models can be complex. Understanding pricing structures, including pay-as-you-go, reserved instances, and spot instances, is vital for cost optimization. Analyzing pricing calculators and comparing costs across providers is essential.

- Scalability and Elasticity: The ability to easily scale resources up or down based on demand is critical. The provider’s infrastructure should be capable of handling fluctuations in workload and ensuring consistent performance.

- Support and Documentation: Reliable customer support and comprehensive documentation are essential for resolving issues and efficiently managing the cloud infrastructure. Consider the availability of 24/7 support and the quality of the provider’s knowledge base.

- Vendor Lock-in: The potential for vendor lock-in should be evaluated. Consider the ease of migrating data and applications to another provider if necessary.

Decision-Making Framework for Choosing a Cloud Provider

A structured approach to selecting a cloud provider is essential. This framework Artikels a step-by-step process:

- Define Requirements: Clearly articulate the organization’s specific needs, including application requirements, data storage needs, security requirements, scalability expectations, and budget constraints.

- Evaluate Providers: Based on the defined requirements, shortlist potential providers and thoroughly evaluate their service offerings, pricing models, security features, and compliance certifications. Use the provider’s documentation and pricing calculators to estimate costs.

- Conduct Proof of Concept (POC): Perform a POC with the top contenders to test their services and evaluate their performance in a real-world scenario. This allows for hands-on experience and validation of claims.

- Negotiate Contracts: Once a provider is selected, carefully negotiate the contract terms, focusing on pricing, service level agreements (SLAs), and support provisions.

- Implement and Monitor: After implementation, continuously monitor performance, costs, and security to ensure the cloud infrastructure meets the organization’s needs.

Emerging Trends in Cloud Server Infrastructure

The cloud computing landscape is in constant flux, driven by technological advancements and evolving business needs. Emerging trends are reshaping how businesses design, deploy, and manage their server infrastructure, leading to greater efficiency, scalability, and cost optimization. These advancements are not merely incremental improvements; they represent fundamental shifts in the way we approach computing.

Serverless computing and edge computing are two particularly impactful examples of these emerging trends. They address specific challenges in traditional cloud architectures, opening up new possibilities for application development and deployment.

Serverless Computing

Serverless computing represents a paradigm shift from traditional server management. Instead of provisioning and managing servers, developers focus solely on writing and deploying code. The cloud provider handles all underlying infrastructure, automatically scaling resources based on demand. This eliminates the need for server administration, reduces operational overhead, and allows developers to concentrate on application logic. The cost model is typically pay-per-use, further enhancing cost efficiency. For instance, a company deploying a large-scale event processing application could see significant cost savings by using a serverless architecture compared to managing a fleet of virtual machines. The scalability inherent in serverless functions allows the application to effortlessly handle sudden spikes in user activity without requiring manual intervention.

Edge Computing

Edge computing addresses the latency challenges associated with cloud-based applications. By processing data closer to the source (the “edge” of the network), edge computing reduces latency and improves responsiveness. This is particularly crucial for applications requiring real-time processing, such as IoT devices, autonomous vehicles, and augmented reality experiences. Imagine a smart city application that relies on real-time data from numerous sensors. Edge computing allows this data to be processed locally, reducing the reliance on a centralized cloud infrastructure and minimizing delays. This results in faster response times, improved data security, and reduced bandwidth consumption. Companies deploying IoT solutions, for example, often find edge computing essential for managing large volumes of data generated by connected devices.

Impact on Businesses

These emerging trends offer significant advantages to businesses of all sizes. Serverless computing empowers developers to build and deploy applications faster and more cost-effectively, while edge computing enables the development of real-time, responsive applications. The combined effect is increased agility, improved operational efficiency, and enhanced customer experience. Businesses can leverage these technologies to innovate faster, respond to market changes more quickly, and ultimately gain a competitive edge. For example, a retail company might use serverless functions to process customer orders in real-time, ensuring faster delivery and improved customer satisfaction. Similarly, a manufacturing company could utilize edge computing to monitor equipment performance and predict potential failures, minimizing downtime and improving productivity.

Innovative Applications of Cloud Server Infrastructure

The combination of serverless computing, edge computing, and other advancements is fostering innovation across various sectors. Artificial intelligence (AI) and machine learning (ML) are significantly benefiting from cloud infrastructure. AI-powered applications, such as image recognition, natural language processing, and predictive analytics, often require significant computing power and scalability, which cloud platforms readily provide. Furthermore, the ability to process data at the edge enables real-time AI applications in scenarios like autonomous driving or medical diagnosis. Another example is the rise of cloud-based gaming, which relies heavily on scalable cloud infrastructure to deliver high-quality gaming experiences to millions of users simultaneously. The use of serverless functions allows developers to focus on game logic and user experience while the cloud provider manages the underlying infrastructure.

Data Storage and Management in Cloud Infrastructure

Effective data storage and management are critical components of a successful cloud infrastructure strategy. The right approach ensures data accessibility, security, and cost-effectiveness while supporting business operations and growth. Choosing the appropriate storage type and implementing robust management practices are paramount.

Data storage in the cloud offers a wide range of options, each with its own strengths and weaknesses, catering to diverse application needs and data characteristics. Understanding these differences is crucial for optimizing performance and minimizing costs.

Cloud Storage Options

Cloud providers offer various storage services designed for different data types and access patterns. These options allow for tailored solutions based on specific requirements, balancing performance, cost, and scalability.

- Object Storage: Ideal for unstructured data like images, videos, and backups. Data is stored as objects with metadata, accessed via APIs. Examples include Amazon S3, Azure Blob Storage, and Google Cloud Storage. This type is highly scalable and cost-effective for large datasets.

- Block Storage: Provides raw storage capacity that is typically attached to virtual machines (VMs) as virtual disks. Think of it as a traditional hard drive, but virtualized. Examples include Amazon EBS, Azure Managed Disks, and Google Compute Engine Persistent Disks. This is commonly used for operating systems and applications requiring high performance and low latency.

- File Storage: Offers shared file systems accessible by multiple users and applications simultaneously. This is analogous to a network file share, but in the cloud. Examples include Amazon EFS, Azure Files, and Google Cloud Filestore. It is suitable for collaborative environments and applications requiring shared file access.

Data Security and Management Methods

Robust security measures are essential for protecting data stored in the cloud. A multi-layered approach encompassing various techniques is necessary to mitigate risks and ensure data integrity.

- Access Control: Implementing granular access control lists (ACLs) and role-based access control (RBAC) restricts access to sensitive data based on user roles and permissions. This limits the potential impact of breaches.

- Data Encryption: Encrypting data both in transit (using HTTPS/TLS) and at rest (using server-side encryption) protects data from unauthorized access even if a breach occurs. Many cloud providers offer encryption options, both managed and customer-managed.

- Data Loss Prevention (DLP): DLP tools monitor data movement and identify sensitive information leaving the cloud environment without authorization. This helps prevent accidental or malicious data leaks.

- Regular Backups and Recovery: Implementing regular backups to a separate storage location (geographically dispersed if possible) ensures data availability in case of failures or disasters. Testing the recovery process is crucial.

Data Lifecycle Management

Effective data lifecycle management (DLM) involves managing data throughout its entire lifecycle, from creation to archiving and eventual deletion. This optimizes storage costs and ensures compliance with regulations.

- Data Archiving: Moving less frequently accessed data to cheaper storage tiers (like Glacier or Archive Storage) reduces overall storage costs. This process often involves automated workflows triggered by data age or access patterns.

- Data Retention Policies: Establishing clear data retention policies ensures compliance with legal and regulatory requirements. These policies dictate how long data is kept and what happens after the retention period expires.

- Data Deletion: Securely deleting data when it’s no longer needed minimizes the risk of data breaches and ensures compliance. This often involves secure deletion methods that overwrite data multiple times.

Monitoring and Performance Optimization of Cloud Server Infrastructure

Effective monitoring and optimization are crucial for ensuring the smooth operation and optimal performance of cloud server infrastructure. Proactive monitoring allows for early identification of potential issues, preventing disruptions and minimizing downtime, while performance optimization ensures efficient resource utilization and cost savings. This section will explore key performance indicators (KPIs), optimization techniques, and a sample monitoring dashboard.

Key Performance Indicators (KPIs) for Monitoring Cloud Server Infrastructure

Understanding key performance indicators is fundamental to effective cloud infrastructure monitoring. These metrics provide insights into the health, performance, and resource utilization of your cloud environment. By regularly tracking these KPIs, administrators can identify bottlenecks, predict potential problems, and make informed decisions to optimize performance and resource allocation.

Critical KPIs often include:

- CPU Utilization: The percentage of CPU capacity being used. High sustained CPU utilization indicates potential bottlenecks and the need for scaling or optimization.

- Memory Utilization: The percentage of RAM being used. High memory utilization can lead to slowdowns and application crashes. Monitoring this metric helps in identifying memory leaks or the need for increased RAM allocation.

- Disk I/O: The rate of data read and write operations on storage devices. High disk I/O can indicate slow database queries or inefficient data access patterns.

- Network Latency: The time it takes for data to travel between different points in the network. High latency can significantly impact application performance, especially for applications that rely on real-time data exchange.

- Application Response Time: The time it takes for an application to respond to a user request. Slow response times indicate performance bottlenecks and negatively affect user experience.

- Error Rates: The frequency of errors or exceptions encountered by applications or system components. High error rates indicate potential issues requiring immediate attention.

- Uptime: The percentage of time a system or application is operational. High uptime is a crucial indicator of system reliability and stability.

Techniques for Optimizing the Performance of Cloud-Based Applications

Performance optimization involves a multifaceted approach aimed at improving application speed, responsiveness, and resource utilization. Strategies range from code-level optimizations to infrastructure adjustments.

Several techniques are commonly employed:

- Code Optimization: Improving the efficiency of application code to reduce processing time and resource consumption. This includes techniques such as code profiling, algorithm optimization, and database query optimization.

- Caching: Storing frequently accessed data in a temporary storage location to reduce the time it takes to retrieve the data. Caching can significantly improve application response times.

- Content Delivery Network (CDN): Distributing static content (images, CSS, JavaScript) across multiple servers geographically closer to users. This reduces latency and improves website loading times.

- Load Balancing: Distributing incoming traffic across multiple servers to prevent any single server from becoming overloaded. This ensures high availability and consistent performance.

- Database Optimization: Optimizing database queries, indexing, and schema design to improve database performance. This includes techniques such as query optimization, schema normalization, and the use of database caching.

- Vertical and Horizontal Scaling: Adjusting the resources allocated to individual servers (vertical scaling) or adding more servers to the infrastructure (horizontal scaling) to meet changing demand.

Sample Monitoring Dashboard

A comprehensive monitoring dashboard provides a centralized view of key metrics and their thresholds. This allows for quick identification of performance issues and facilitates proactive intervention.

A simplified example using an HTML table is shown below:

| Metric | Current Value | Threshold | Status |

|---|---|---|---|

| CPU Utilization | 25% | 80% | OK |

| Memory Utilization | 50% | 90% | OK |

| Disk I/O | 10 MB/s | 50 MB/s | OK |

| Network Latency | 20ms | 100ms | OK |

| Application Response Time | 150ms | 500ms | OK |

| Error Rate | 0.1% | 1% | OK |

| Uptime | 99.99% | 99.9% | OK |

Q&A

What is the difference between IaaS, PaaS, and SaaS?

IaaS (Infrastructure as a Service) provides virtualized computing resources like servers, storage, and networking. PaaS (Platform as a Service) offers a platform for developing and deploying applications, including tools and services. SaaS (Software as a Service) delivers software applications over the internet, eliminating the need for local installation.

How can I choose the right cloud provider for my needs?

Consider factors such as budget, required services (compute, storage, databases), security compliance needs, geographic location, and provider reputation. A thorough needs assessment and comparison of provider offerings is crucial.

What are the common security threats in cloud environments?

Common threats include data breaches, denial-of-service attacks, malware infections, misconfigurations, and insider threats. Implementing strong security practices, including access controls, encryption, and regular security audits, is vital.

How much does cloud server infrastructure typically cost?

Cloud costs vary significantly depending on usage, chosen services, and provider. Pay-as-you-go models are common, allowing for flexible scaling and cost control. Careful planning and monitoring are essential for cost optimization.