Defining File Cloud Servers

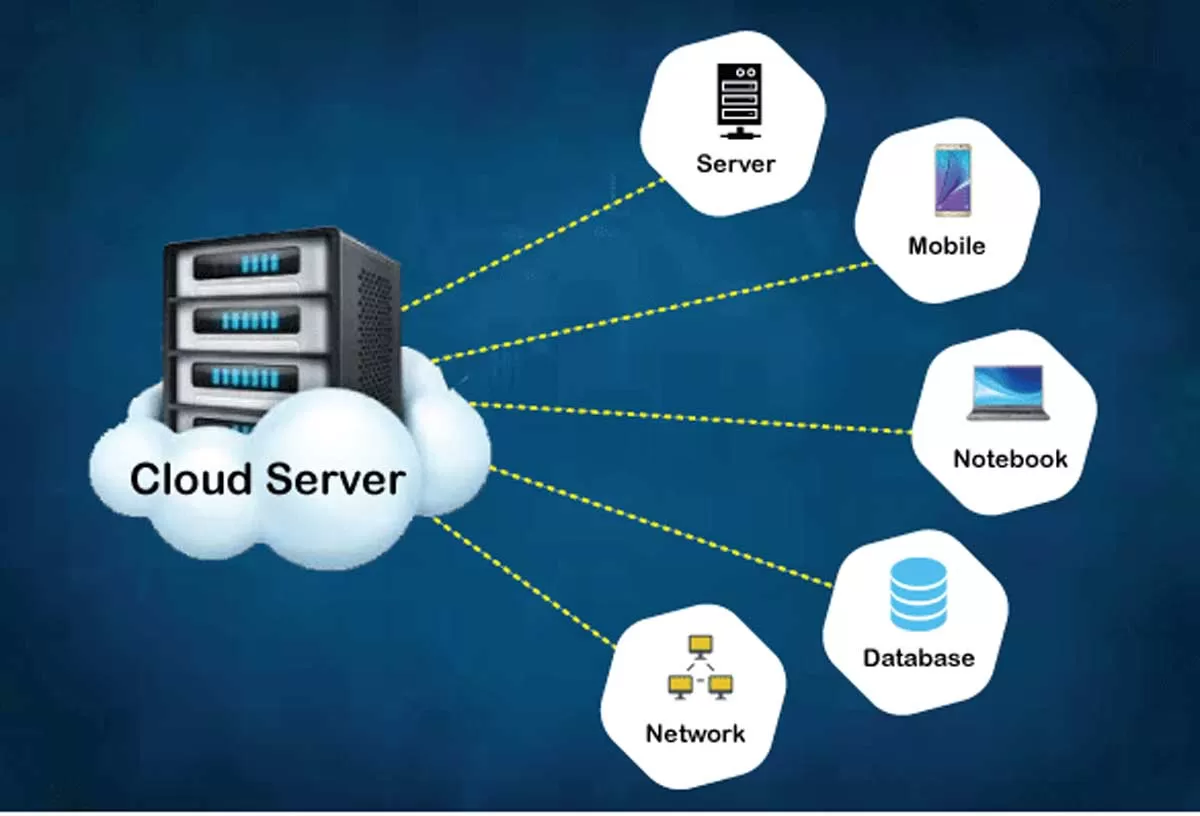

File cloud servers represent a fundamental shift in how we store and access data, offering a flexible and scalable alternative to traditional storage methods. They provide centralized, remote storage accessible via the internet, enabling users to share, collaborate on, and manage files from various devices and locations. This accessibility significantly enhances productivity and collaboration, particularly in distributed teams or organizations.

File cloud servers offer a range of core functionalities beyond simple storage. These include features like version control (allowing users to revert to previous versions of files), access control (managing who can view and modify files), synchronization (keeping files consistent across multiple devices), and data backup and recovery. Robust security measures, such as encryption and access permissions, are also crucial components of a well-designed file cloud server system.

Types of File Cloud Servers

The choice of file cloud server depends largely on the specific needs and security requirements of an organization or individual. Three primary types exist, each offering distinct advantages and disadvantages.

- Public Cloud Servers: These servers are hosted by third-party providers (e.g., Dropbox, Google Drive, OneDrive) and are accessible via the internet. They offer scalability, cost-effectiveness (often through a pay-as-you-go model), and accessibility from anywhere with an internet connection. However, they raise concerns regarding data security and privacy, as the data is stored on a provider’s infrastructure.

- Private Cloud Servers: These servers are hosted within an organization’s own data center or infrastructure. They offer greater control over data security and privacy, ensuring compliance with internal regulations and policies. However, they require significant upfront investment in hardware and IT expertise for maintenance and management. Scalability can also be a challenge compared to public cloud solutions.

- Hybrid Cloud Servers: This approach combines elements of both public and private cloud servers. Sensitive data might be stored on a private server, while less critical data is stored on a public cloud. This hybrid model offers a balance between cost-effectiveness, scalability, and security, allowing organizations to tailor their storage solution to their specific needs.

Comparison with Traditional File Storage

Traditional file storage methods, such as local hard drives or network-attached storage (NAS) devices, offer direct control over data and are generally less expensive for smaller amounts of data. However, they lack the scalability, accessibility, and collaboration features of cloud servers. Access is limited to the physical location of the storage device, making collaboration difficult and data backup and recovery more complex. Cloud servers, in contrast, offer remote access, seamless collaboration, and robust backup and recovery mechanisms, though they introduce dependence on internet connectivity and potential security concerns related to third-party providers. For example, a small business using a NAS device might find it challenging to share files with remote clients or ensure data redundancy in case of hardware failure, whereas a cloud-based solution readily addresses these limitations.

Security Aspects of File Cloud Servers

The security of data stored on file cloud servers is paramount, given the sensitive nature of information often entrusted to these services. Robust security measures are crucial to protect against various threats and ensure data confidentiality, integrity, and availability. This section will explore common security threats, best practices for mitigation, data encryption methods, and a sample security protocol design.

Common Security Threats Associated with File Cloud Servers

File cloud servers face a multitude of security threats, ranging from external attacks to internal vulnerabilities. These threats can lead to data breaches, unauthorized access, and service disruptions, potentially resulting in significant financial and reputational damage. Understanding these threats is the first step towards effective security implementation.

- Data breaches: Unauthorized access to stored data, often through exploiting vulnerabilities in the server’s software or network infrastructure, leading to data theft or leakage.

- Malware infections: Malicious software can infect the server, compromising data integrity and potentially spreading to connected devices.

- Insider threats: Malicious or negligent actions by employees or authorized users with access to the server can result in data loss or compromise.

- Denial-of-service (DoS) attacks: Overwhelming the server with traffic, rendering it inaccessible to legitimate users.

- Phishing attacks: Tricking users into revealing their credentials, granting attackers access to the server.

- Weak passwords and authentication: Inadequate password policies and authentication mechanisms can make it easier for attackers to gain unauthorized access.

- Unpatched software vulnerabilities: Outdated software with known security flaws can be exploited by attackers.

Best Practices for Securing Data Stored on File Cloud Servers

Implementing a multi-layered security approach is essential to protect data stored on file cloud servers. This involves a combination of technical and administrative controls.

- Strong authentication and authorization: Implementing multi-factor authentication (MFA) and role-based access control (RBAC) to restrict access to authorized personnel only.

- Regular software updates and patching: Keeping all software components up-to-date with the latest security patches to address known vulnerabilities.

- Data encryption: Encrypting data both in transit (using HTTPS) and at rest (using encryption algorithms like AES) to protect against unauthorized access.

- Regular security audits and penetration testing: Conducting regular security assessments to identify and address vulnerabilities.

- Intrusion detection and prevention systems (IDS/IPS): Monitoring network traffic for malicious activity and blocking suspicious connections.

- Data loss prevention (DLP) measures: Implementing controls to prevent sensitive data from leaving the server without authorization.

- Robust backup and recovery plans: Maintaining regular backups of data and having a plan in place to restore data in case of a disaster.

Data Encryption Methods Used in File Cloud Servers

Data encryption is a critical component of file cloud server security. Several methods are employed to protect data confidentiality and integrity.

- AES (Advanced Encryption Standard): A widely used symmetric encryption algorithm offering strong security. AES-256, with a 256-bit key, is considered highly secure.

- RSA (Rivest-Shamir-Adleman): An asymmetric encryption algorithm commonly used for key exchange and digital signatures.

- TLS/SSL (Transport Layer Security/Secure Sockets Layer): Protocols that provide secure communication over a network, encrypting data in transit.

Security Protocol Design for a Hypothetical File Cloud Server

This design incorporates several security best practices discussed above.

- Authentication: Users authenticate using MFA (e.g., password + one-time code from an authenticator app).

- Authorization: RBAC is implemented to restrict access based on user roles and permissions.

- Data Encryption: AES-256 is used for encrypting data at rest, while TLS 1.3 is used for secure data transmission.

- Regular Security Audits: Automated vulnerability scans are performed weekly, with manual penetration testing conducted quarterly.

- Intrusion Detection: An IDS/IPS system monitors network traffic for suspicious activity, alerting administrators to potential threats.

- Data Backup and Recovery: Data is backed up daily to an offsite location, with a disaster recovery plan in place.

- Logging and Monitoring: All server activities are logged and monitored for anomalies.

Scalability and Performance of File Cloud Servers

File cloud servers must be designed to handle significant fluctuations in data volume and user demand. Their ability to scale efficiently and maintain high performance is crucial for user satisfaction and business continuity. This section will explore various scaling strategies and performance optimization techniques employed in modern file cloud server architectures. We will also compare the performance characteristics of different architectural approaches.

Scalability in file cloud servers refers to the system’s capacity to handle increasing amounts of data and user traffic without compromising performance. This is achieved through various strategies, often involving a combination of hardware and software solutions. Performance, on the other hand, encompasses the speed and efficiency with which the server processes requests, delivers files, and manages overall system operations. Optimizing performance requires a multifaceted approach targeting both infrastructure and application-level improvements.

Methods for Scaling File Cloud Servers

Several methods exist for scaling file cloud servers to accommodate growing data volumes and user demands. These methods are often employed in conjunction to achieve optimal results.

- Horizontal Scaling: This involves adding more servers to the existing infrastructure. Each server handles a portion of the overall workload, distributing the load and preventing any single point of failure. For example, a file cloud server initially using one server might scale horizontally by adding additional servers, each storing a subset of the total data. This approach increases storage capacity and processing power linearly with the number of added servers.

- Vertical Scaling: This involves upgrading the existing server hardware, such as increasing RAM, CPU power, or storage capacity. While simpler to implement than horizontal scaling, vertical scaling has limitations as there is a physical limit to the resources a single server can handle. Imagine a file cloud server initially using a single server with limited storage. Vertical scaling would involve upgrading that server to one with a larger hard drive and faster processors.

- Distributed File Systems: These systems distribute data across multiple servers, providing high availability and scalability. Popular examples include Hadoop Distributed File System (HDFS) and Ceph. In a distributed file system, data is fragmented and stored across many servers. If one server fails, the data is still accessible from other servers, ensuring high availability. This approach also facilitates horizontal scaling as more servers can easily be added to the system.

Methods for Optimizing File Cloud Server Performance

Optimizing the performance of a file cloud server requires a multi-pronged approach addressing various aspects of the system.

- Caching: Implementing caching mechanisms at various levels (e.g., browser, server, CDN) reduces the load on the server and improves response times. Frequently accessed files are stored in a cache closer to the user, reducing latency.

- Content Delivery Networks (CDNs): CDNs distribute content geographically, allowing users to access files from servers closer to their location, thereby minimizing latency and improving download speeds. For example, a CDN would replicate popular files on servers across different regions, ensuring that users in different parts of the world access the files from the nearest server.

- Load Balancing: Distributing incoming requests across multiple servers prevents any single server from becoming overloaded. Load balancers intelligently route requests to servers with available capacity. Load balancing is crucial for maintaining consistent performance even during peak demand.

- Database Optimization: Efficient database design and query optimization are essential for fast data retrieval. Proper indexing and query optimization techniques can significantly improve the speed of data access operations.

Comparison of File Cloud Server Architectures

Different architectures offer varying levels of scalability and performance. The optimal choice depends on specific requirements and constraints.

| Architecture | Scalability | Performance | Cost |

|---|---|---|---|

| Single Server | Limited | Moderate | Low |

| Multiple Servers with Load Balancer | Good | High | Medium |

| Distributed File System | Excellent | Very High | High |

A single-server architecture is suitable for small-scale deployments with limited data and user traffic. As the scale increases, a multi-server architecture with a load balancer becomes necessary to maintain performance. For extremely large-scale deployments with massive data volumes and high user traffic, a distributed file system offers the best scalability and performance, albeit at a higher cost.

Cost and Management of File Cloud Servers

Choosing a file cloud server involves careful consideration of various cost factors. Understanding these costs and implementing effective management strategies are crucial for optimizing expenses and ensuring long-term financial viability. This section details the cost components and provides strategies for cost optimization.

Cost Factors Associated with File Cloud Servers

The total cost of ownership (TCO) for a file cloud server encompasses several key elements. These costs can vary significantly depending on the provider, chosen plan, and usage patterns. Understanding these factors allows for informed decision-making and budget planning.

- Storage Capacity: The amount of storage space required directly impacts cost. Larger storage needs naturally lead to higher monthly or annual fees.

- Data Transfer: The volume of data uploaded and downloaded influences the cost, particularly for large datasets or frequent file transfers. Exceeding data transfer limits often incurs extra charges.

- Number of Users: Many providers charge per user, with pricing tiers varying based on the number of accounts needing access to the cloud server.

- Features and Functionality: Advanced features such as version control, collaboration tools, security enhancements, and customer support typically come at an added cost.

- Support and Maintenance: The level of technical support provided can affect pricing. Higher levels of support, including dedicated account managers or priority response times, are usually more expensive.

- Compliance and Regulations: Meeting specific industry compliance requirements (e.g., HIPAA, GDPR) may necessitate additional security measures and audits, resulting in higher costs.

Strategies for Managing and Optimizing Costs

Effective cost management is crucial for maintaining a financially sustainable file cloud server solution. Several strategies can help optimize costs without compromising performance or security.

- Right-sizing Storage: Regularly review storage usage to identify and eliminate unnecessary files. Employ data archiving strategies for less frequently accessed data to reduce storage costs.

- Optimizing Data Transfer: Minimize unnecessary data transfers by employing techniques such as data deduplication and compression. Consider using content delivery networks (CDNs) for geographically distributed users to reduce latency and transfer costs.

- Choosing the Right Pricing Model: Carefully evaluate different pricing models offered by providers (e.g., pay-as-you-go, subscription-based) to find the best fit for your anticipated usage patterns. Negotiate contracts for large-scale deployments to potentially secure discounted rates.

- Leveraging Free Tiers and Trials: Many providers offer free tiers or trial periods, allowing you to test the service and assess its suitability before committing to a paid plan.

- Monitoring and Reporting: Regularly monitor usage patterns and costs through the provider’s dashboards or reporting tools. This proactive approach allows for timely identification and resolution of cost anomalies.

Cost Comparison of File Cloud Server Providers

The following table provides a simplified comparison of pricing and features from different providers. Note that pricing can change, and these are examples based on commonly available plans at the time of writing. It’s crucial to consult each provider’s website for the most up-to-date information.

| Provider | Pricing Model | Storage Capacity (Example) | Features (Example) |

|---|---|---|---|

| Provider A | Subscription (monthly/annual) | 1TB – 100TB+ | Version control, user management, data encryption, 24/7 support |

| Provider B | Pay-as-you-go | Variable, based on usage | Basic storage, data transfer limits, limited support |

| Provider C | Tiered subscription | 50GB – Unlimited | Collaboration tools, advanced security options, robust API |

| Provider D | Hybrid model (subscription + pay-as-you-go) | Customizable | Flexible storage options, scalable infrastructure, enterprise-grade security |

Data Backup and Disaster Recovery

Data backup and disaster recovery (DR) are critical components of a robust file cloud server strategy. The potential for data loss due to hardware failure, cyberattacks, or natural disasters necessitates a comprehensive plan to ensure business continuity and minimize downtime. A well-defined backup and DR plan protects valuable data, maintains operational efficiency, and safeguards the organization’s reputation.

Data loss can lead to significant financial repercussions, including lost revenue, legal liabilities, and damage to brand reputation. Furthermore, restoring data from a compromised or outdated backup can be time-consuming and expensive, potentially impacting customer trust and business operations. Therefore, a proactive approach to data backup and DR is essential for any organization relying on a file cloud server.

Backup Methods for File Cloud Servers

Several methods exist for backing up data from file cloud servers, each with its own advantages and disadvantages. The choice of method depends on factors such as the size of the data, recovery time objectives (RTO), recovery point objectives (RPO), and budget.

- Local Backups: Data is copied to local storage devices, such as hard drives or tape drives, within the same data center. This method offers fast recovery times but is vulnerable to the same physical threats as the primary server.

- Remote Backups: Data is copied to a geographically separate location, often a different data center or cloud storage provider. This offers better protection against site-wide disasters but may have longer recovery times.

- Cloud-based Backups: Data is replicated to a cloud storage service. This provides scalability, cost-effectiveness, and offsite protection, but reliance on a third-party provider introduces a dependency on their service availability and security.

- Snapshot Backups: Creates a point-in-time copy of the data, allowing for quick restoration to a specific point in time. This method is often used in conjunction with other backup strategies.

Recovery Methods for File Cloud Servers

Effective data recovery relies on well-defined procedures and the appropriate tools. The recovery method should align with the chosen backup strategy and the organization’s RTO and RPO.

- Full System Restore: Reinstalls the entire operating system and applications, then restores the backed-up data. This is a time-consuming process but ensures a clean and consistent system.

- Incremental Restore: Restores only the changed data since the last full backup. This is faster than a full system restore but requires a valid full backup as a foundation.

- File-Level Restore: Allows for the restoration of individual files or folders, offering flexibility and speed for smaller recovery tasks.

Comprehensive Backup and Disaster Recovery Plan

A comprehensive backup and DR plan should incorporate several key elements:

- Risk Assessment: Identify potential threats to the file cloud server, including hardware failures, natural disasters, cyberattacks, and human error. This assessment should inform the design of the backup and DR strategy.

- Backup Strategy: Define the backup method (local, remote, cloud), backup frequency (daily, weekly, etc.), and retention policy (how long backups are kept). A 3-2-1 backup strategy (3 copies of data, on 2 different media, with 1 offsite copy) is a common best practice.

- Recovery Strategy: Artikel the procedures for recovering data in the event of a disaster, including the steps to restore the server, applications, and data. This should include roles and responsibilities for each team member.

- Testing and Validation: Regularly test the backup and DR plan to ensure its effectiveness and identify any weaknesses. This includes performing full and partial restorations to verify data integrity and recovery times.

- Documentation: Maintain detailed documentation of the backup and DR plan, including procedures, contact information, and system configurations. This documentation should be readily accessible to all relevant personnel.

A well-defined backup and DR plan is not just a set of procedures; it’s a critical investment in business continuity and resilience.

Integration with Other Systems

Seamless integration with existing enterprise systems is crucial for the successful deployment of a file cloud server. Effective integration ensures data flows smoothly between the cloud storage and other applications, enhancing productivity and streamlining workflows. This section explores various methods and considerations for achieving this integration.

The integration of a file cloud server with other enterprise systems typically involves utilizing application programming interfaces (APIs) and established communication protocols. This allows different software applications to communicate and exchange data, enabling functionalities like automated file transfers, real-time synchronization, and centralized access control. Successful integration requires careful planning and consideration of the complexities of different IT environments.

API and Protocol Usage

Several APIs and protocols facilitate the integration of file cloud servers. RESTful APIs, known for their simplicity and widespread adoption, are commonly used for managing files, users, and permissions. WebDAV, a protocol for accessing and manipulating files over HTTP, offers a standardized way to interact with the cloud storage. Furthermore, various cloud providers offer proprietary APIs tailored to their specific services, offering advanced features and functionality. For example, the Amazon S3 API provides extensive control over object storage within the AWS ecosystem, while Microsoft’s Azure Blob Storage API offers similar capabilities within the Microsoft Azure cloud platform. The choice of API and protocol depends on factors such as the existing IT infrastructure, the required level of functionality, and the specific cloud provider selected.

Challenges of Integration in Complex IT Infrastructures

Integrating file cloud servers into complex IT infrastructures presents several challenges. Security concerns, such as ensuring data integrity and preventing unauthorized access, are paramount. Maintaining data consistency across different systems requires robust synchronization mechanisms and error handling capabilities. Compatibility issues between the file cloud server and legacy systems may necessitate custom integrations or middleware solutions. Furthermore, managing the complexities of network connectivity, authentication, and authorization across multiple systems requires careful planning and coordination. For instance, integrating a cloud-based file server with an on-premises ERP system might involve configuring VPN connections, setting up secure authentication protocols like OAuth 2.0, and establishing data transformation rules to ensure compatibility between different data formats. Another challenge could be the need for specialized skills to handle the integration process and subsequent maintenance, requiring expertise in different programming languages, APIs, and networking technologies.

User Access Control and Permissions

Robust user access control is paramount for any file cloud server, ensuring data security and maintaining user privacy. This involves implementing mechanisms to define who can access specific files and folders, and what actions they are permitted to perform. Effective access control prevents unauthorized data breaches and maintains the integrity of the stored information.

User access control and permissions are managed through a combination of authentication methods and authorization policies. Authentication verifies the identity of a user, while authorization determines what resources a user can access and what actions they can perform on those resources. These processes work together to secure data and ensure only authorized users have access to sensitive information.

User Authentication Methods

Several methods exist for verifying user identities. These methods vary in their security levels and implementation complexity. Choosing the right method depends on the security requirements and the technical capabilities of the system.

- Password-based authentication: This traditional method requires users to provide a username and password. While simple to implement, it is vulnerable to password cracking if weak passwords are used. Strong password policies, including password complexity requirements and regular password changes, are crucial for mitigating this risk. Multi-factor authentication (MFA) can significantly enhance security by requiring users to provide multiple forms of authentication, such as a password and a one-time code from a mobile app.

- Token-based authentication: This method uses access tokens to verify user identity. Tokens are short-lived and typically expire after a certain period, reducing the risk of unauthorized access if a token is compromised. This method is commonly used in API-based interactions and web applications. Examples include JWT (JSON Web Token) and OAuth 2.0.

- Biometric authentication: This increasingly popular method uses unique biological characteristics, such as fingerprints or facial recognition, to verify user identity. Biometric authentication offers a high level of security but may require specialized hardware and software.

- Multi-factor authentication (MFA): As mentioned earlier, MFA combines multiple authentication factors to improve security. For example, a user might need to provide a password, a one-time code from a mobile app, and a security question to gain access. This layered approach significantly reduces the risk of unauthorized access, even if one authentication factor is compromised.

User Role and Permission Management

A well-designed system for managing user roles and permissions is essential for effective access control. This system should allow administrators to define different user roles with varying levels of access. Permissions are then assigned to these roles, specifying what actions users in that role can perform.

A hierarchical role-based access control (RBAC) model is often used. In this model, users are assigned to roles, and roles are assigned permissions. This allows for efficient management of user access, as changes to permissions only need to be made at the role level. For example, an “administrator” role might have full access to all files and folders, while a “viewer” role might only have read-only access. More granular control can be achieved by creating roles with specific permissions, such as “editor” for users who can modify files, or “uploader” for users who can only upload files. A matrix showing roles and associated permissions can be used to visually represent the access control scheme. This matrix would list roles in rows and permissions in columns, with checkmarks indicating which permissions are granted to each role.

Implementing Access Control Mechanisms

Implementing access control mechanisms involves several key steps. These steps ensure that the system correctly enforces the defined permissions and prevents unauthorized access.

- Defining Access Control Lists (ACLs): ACLs are lists that specify which users or groups have access to a particular file or folder, and what permissions they have (read, write, execute, delete, etc.). ACLs are a fundamental component of most access control systems.

- Using Group-Based Permissions: Organizing users into groups simplifies permission management. Instead of assigning permissions to individual users, permissions can be assigned to groups, and users can be added or removed from groups as needed.

- Implementing Auditing and Logging: Auditing and logging track user activities, providing a record of who accessed what files and when. This is crucial for security monitoring and incident response.

- Regular Security Audits and Reviews: Regular security audits and reviews of the access control system are vital to ensure its effectiveness and identify any vulnerabilities. This includes reviewing user roles and permissions, checking for inactive accounts, and ensuring that the system is properly configured.

File Sharing and Collaboration

File cloud servers revolutionize how individuals and teams share and collaborate on files. By providing a centralized, accessible repository, they eliminate the limitations of traditional methods like email attachments or shared network drives, fostering seamless teamwork and efficient project management. This section will explore how file cloud servers facilitate these processes and highlight key features that enhance collaborative workflows.

File cloud servers facilitate file sharing and collaboration through a variety of features designed to simplify file access, version control, and real-time interaction. Users can easily grant access to specific files or folders, enabling simultaneous editing and reducing the risk of version conflicts. This centralized approach fosters better organization and eliminates the confusion often associated with multiple versions scattered across different devices or email inboxes. The ability to track changes and revert to previous versions adds a crucial layer of security and control to collaborative projects.

Features Supporting Collaborative Workflows

Several features directly support collaborative workflows within file cloud servers. These include real-time co-editing, allowing multiple users to work on the same document simultaneously; integrated commenting and feedback tools, enabling streamlined communication and review processes; version history tracking, providing a complete audit trail of changes made to files; and file locking mechanisms, preventing accidental overwriting and ensuring data integrity. Many platforms also offer notifications, alerting users to updates and changes, keeping everyone informed and engaged. For example, Google Workspace allows multiple users to co-edit a Google Doc simultaneously, while Microsoft 365 offers similar functionality with its suite of applications. Dropbox also allows for shared folders and file versioning.

Approaches to File Sharing and Collaboration

Different file cloud servers offer varying approaches to file sharing and collaboration, catering to diverse user needs and preferences. Some platforms prioritize simplicity and ease of use, focusing on basic file sharing and syncing capabilities. Others offer more advanced features, such as robust version control, granular permission settings, and extensive integration with other productivity tools. For instance, a smaller team might opt for a simple service like Dropbox for its ease of use, while a large organization with complex workflows might choose a more comprehensive solution like Microsoft SharePoint or Google Workspace, which offer features like advanced access controls and robust integration with other enterprise applications. The choice often depends on the scale of collaboration, the complexity of the projects, and the specific needs of the users.

Compliance and Regulations

File cloud servers, due to their nature of storing and managing sensitive data, are subject to a wide range of regulations and compliance standards. Understanding and adhering to these requirements is crucial for maintaining data security, protecting user privacy, and avoiding potential legal repercussions. Failure to comply can result in significant fines, legal action, and reputational damage.

The specific regulations applicable to a file cloud server depend heavily on the type of data stored, the industry involved, and the geographical location of the server and its users. A comprehensive compliance strategy requires careful consideration of these factors and a proactive approach to risk management.

Relevant Industry Regulations and Compliance Standards

Numerous regulations impact file cloud server operations. These include, but are not limited to, the General Data Protection Regulation (GDPR) in Europe, the California Consumer Privacy Act (CCPA) in California, and the Health Insurance Portability and Accountability Act (HIPAA) in the United States for healthcare data. Other relevant standards include ISO 27001 (information security management), SOC 2 (security, availability, processing integrity, confidentiality, and privacy), and NIST Cybersecurity Framework. The applicability of each standard depends on the specific context and the nature of the data being handled. For example, a financial institution would likely need to comply with stricter regulations than a small business storing only internal documents.

Strategies for Ensuring Compliance

Implementing a robust compliance program involves several key strategies. Firstly, a thorough risk assessment is vital to identify potential vulnerabilities and prioritize compliance efforts. This assessment should cover all aspects of the file cloud server infrastructure, including data storage, access control, and data transmission. Secondly, a clear data governance policy needs to be established, defining data classification, retention policies, and procedures for handling data breaches. Thirdly, regular audits and penetration testing are necessary to identify and address security weaknesses. Finally, employee training is crucial to ensure that all personnel understand their responsibilities in maintaining compliance. This training should cover relevant regulations, security best practices, and incident response procedures.

Compliance Checklist for File Cloud Server Implementation

A comprehensive checklist is essential for ensuring compliance. The specific items will vary depending on the applicable regulations, but a general checklist might include:

- Data Inventory and Classification: Document all data stored on the file cloud server and classify it according to sensitivity levels.

- Access Control Implementation: Implement strong access controls based on the principle of least privilege, ensuring only authorized users can access specific data.

- Data Encryption: Encrypt data both in transit and at rest to protect against unauthorized access.

- Regular Security Audits and Penetration Testing: Conduct regular audits and penetration tests to identify vulnerabilities and ensure the effectiveness of security controls.

- Incident Response Plan: Develop and regularly test an incident response plan to handle data breaches and other security incidents.

- Data Retention Policy: Establish a clear data retention policy that complies with all applicable regulations.

- Employee Training: Provide regular training to employees on security best practices and compliance requirements.

- Vendor Management: Carefully vet all third-party vendors that have access to the file cloud server and ensure they comply with relevant regulations.

- Documentation: Maintain comprehensive documentation of all compliance-related activities.

- Compliance Monitoring and Reporting: Regularly monitor compliance and generate reports to track progress and identify areas for improvement.

Future Trends in File Cloud Servers

The landscape of file cloud servers is constantly evolving, driven by advancements in technology and the ever-increasing demands for efficient, secure, and scalable data storage and management solutions. Several key trends are shaping the future of this critical infrastructure, impacting how businesses and individuals store, access, and utilize their data. These trends promise to significantly alter data management strategies and necessitate proactive adaptation by organizations.

Emerging technologies and their implications are reshaping the fundamental architecture and capabilities of file cloud servers. This evolution is characterized by a shift towards more intelligent, automated, and integrated systems that prioritize data security, performance, and cost-effectiveness.

Increased Adoption of Edge Computing

Edge computing, which processes data closer to its source rather than relying solely on centralized cloud servers, is gaining significant traction. This approach reduces latency, improves bandwidth efficiency, and enhances responsiveness for applications requiring real-time data processing, such as video conferencing or collaborative design tools. For file cloud servers, edge computing means faster access to files for users located geographically distant from central data centers, potentially leading to a more distributed and resilient file storage infrastructure. Companies like Microsoft and Amazon are already incorporating edge computing into their cloud offerings, demonstrating the growing importance of this trend.

Advancements in Artificial Intelligence (AI) and Machine Learning (ML)

AI and ML are revolutionizing data management by automating tasks such as data classification, organization, and security threat detection. In the context of file cloud servers, AI-powered systems can intelligently categorize files, identify duplicates, and predict storage needs, leading to improved efficiency and reduced storage costs. Furthermore, AI can enhance security by detecting and responding to anomalous activities, proactively mitigating potential breaches. For example, ML algorithms can be trained to recognize patterns indicative of malware or unauthorized access attempts, enabling faster and more effective security responses.

The Rise of Serverless Computing

Serverless computing eliminates the need for users to manage servers directly, simplifying deployment and reducing operational overhead. For file cloud servers, this translates to easier scalability and reduced management costs. Instead of provisioning and managing servers, users can focus on developing and deploying applications, leveraging the underlying infrastructure provided by the cloud provider. This approach allows for more efficient resource allocation and cost optimization, as users only pay for the compute resources they actually consume. Companies like AWS Lambda and Google Cloud Functions are prominent examples of serverless computing platforms.

Enhanced Security Measures Through Blockchain Technology

Blockchain technology, known for its secure and transparent nature, offers the potential to enhance the security of file cloud servers. By leveraging blockchain’s immutable ledger, it becomes possible to create a verifiable audit trail of file access, modifications, and ownership, strengthening data integrity and accountability. This could significantly reduce the risk of data breaches and unauthorized access, enhancing trust and transparency in file sharing and collaboration. While still in its early stages of adoption in this specific context, the potential for blockchain to revolutionize data security is substantial.

Focus on Data Privacy and Compliance

Growing awareness of data privacy and increasing regulatory scrutiny (like GDPR and CCPA) are driving the development of more robust privacy-enhancing technologies and compliance-focused features in file cloud servers. This includes advanced encryption methods, granular access controls, and tools for data anonymization and pseudonymization. Cloud providers are increasingly incorporating these features into their offerings to meet evolving regulatory requirements and user expectations regarding data privacy. The emphasis on data privacy will continue to be a key driver of innovation in file cloud server technology.

Case Studies of File Cloud Server Implementations

This section presents a detailed case study illustrating the successful implementation of a file cloud server, highlighting key aspects and demonstrating the achieved benefits. The example focuses on a medium-sized marketing agency’s transition to a cloud-based file storage solution.

The following case study details the implementation of a file cloud server solution for a medium-sized marketing agency, “Adroit Marketing,” with approximately 50 employees. Prior to the implementation, Adroit Marketing relied on a complex network of local file servers and shared drives, leading to significant inefficiencies and security vulnerabilities.

Adroit Marketing’s Cloud Server Implementation

Adroit Marketing’s successful implementation involved careful planning and execution across several key areas. The following bullet points Artikel the significant aspects of their transition.

- Needs Assessment and Solution Selection: Adroit Marketing conducted a thorough assessment of its existing file storage infrastructure, identifying bottlenecks and security risks. This led to the selection of a cloud-based file server solution offered by a reputable provider, chosen based on factors such as scalability, security features, and integration capabilities with existing software. The solution offered robust version control and granular access permissions, directly addressing their previous challenges.

- Data Migration Strategy: A phased approach to data migration was implemented to minimize disruption to ongoing operations. Critical data was migrated first, followed by less time-sensitive files. The migration process was meticulously documented, and regular checkpoints were established to monitor progress and address any issues promptly. This minimized downtime and ensured data integrity throughout the process.

- User Training and Support: Comprehensive training was provided to all employees on the new cloud-based file server system. This included tutorials, online documentation, and hands-on sessions to ensure users were comfortable navigating the new system and utilizing its features effectively. Ongoing support was provided to address any questions or issues that arose after the migration was complete.

- Security Protocols and Access Control: Robust security protocols were implemented, including multi-factor authentication, encryption both in transit and at rest, and regular security audits. Granular access control was configured to ensure that only authorized personnel could access sensitive information. Regular security awareness training was also conducted to educate employees on best practices for data security.

Benefits Achieved by Adroit Marketing

The implementation of the cloud-based file server yielded significant benefits for Adroit Marketing, improving efficiency, security, and collaboration.

- Improved Collaboration: The centralized file storage system facilitated seamless collaboration among team members, regardless of their location. Real-time co-editing capabilities improved project workflow and reduced delays caused by file version conflicts.

- Enhanced Security: The robust security features of the cloud-based system significantly reduced the risk of data breaches and unauthorized access. Data encryption and access controls ensured the confidentiality and integrity of sensitive information.

- Increased Efficiency: The streamlined file management system eliminated the inefficiencies associated with the previous local server setup. Employees gained easy access to files from anywhere with an internet connection, boosting productivity and reducing time wasted searching for files.

- Cost Savings: While an initial investment was required, the cloud-based solution ultimately resulted in cost savings by reducing the need for on-site IT infrastructure, maintenance, and personnel.

Popular Questions

What is the difference between a public and private file cloud server?

Public cloud servers are hosted by a third-party provider and shared among multiple users, while private cloud servers are dedicated to a single organization and hosted either on-premises or by a provider with dedicated resources.

How can I choose the right file cloud server provider for my needs?

Consider factors such as storage capacity requirements, budget, security features, scalability needs, and the level of technical support offered by different providers. It’s beneficial to compare offerings and features before making a decision.

What are the potential risks of using a file cloud server?

Potential risks include data breaches, unauthorized access, data loss due to provider outages, and vendor lock-in. Choosing a reputable provider with robust security measures and implementing appropriate security protocols mitigates these risks.

How do I ensure compliance with data privacy regulations when using a file cloud server?

Familiarize yourself with relevant regulations (e.g., GDPR, CCPA) and select a provider that demonstrates compliance. Implement strong access controls and data encryption to protect sensitive information.