Cloud vs. Server

This section provides a comparative overview of cloud computing and traditional server infrastructure, highlighting their functionalities, advantages, disadvantages, and key differences in cost, scalability, security, and maintenance. Understanding these distinctions is crucial for businesses choosing the optimal solution for their IT needs.

Functional Differences between Cloud and Server

Cloud computing and traditional server infrastructure differ significantly in their architecture and functionality. Traditional server infrastructure involves owning and managing physical servers located on-premises. These servers handle all aspects of data storage, processing, and application delivery. In contrast, cloud computing utilizes a network of remote servers accessed via the internet. This allows for shared resources and scalable computing power without the need for direct hardware management. Cloud providers handle the underlying infrastructure, allowing users to focus on their applications and data.

Advantages and Disadvantages of Cloud Computing

Cloud computing offers several advantages, including scalability (easily adjust resources based on demand), cost-effectiveness (pay-as-you-go models reduce upfront investment), accessibility (access data and applications from anywhere with an internet connection), and enhanced collaboration (easily share resources and data among teams). However, cloud computing also presents some disadvantages. Security concerns remain a primary challenge, requiring careful consideration of data protection and access control measures. Vendor lock-in, the dependence on a specific cloud provider, can also limit flexibility and increase switching costs. Finally, internet connectivity is crucial; outages can disrupt access to cloud-based resources.

Advantages and Disadvantages of Traditional Server Infrastructure

Traditional server infrastructure offers greater control over hardware and software configurations, providing a high degree of customization and potentially improved security due to localized control. It also provides greater predictability in performance and avoids reliance on external internet connectivity. However, on-premises servers require significant upfront investment in hardware and software, ongoing maintenance costs (including staffing for IT support), and limited scalability (expanding capacity can be costly and time-consuming). The total cost of ownership can be substantially higher than cloud solutions, particularly for organizations with fluctuating resource demands.

Comparative Analysis: Cost, Scalability, Security, and Maintenance

| Feature | Cloud Computing | Traditional Server Infrastructure |

|---|---|---|

| Cost | Typically lower upfront cost, pay-as-you-go model, potential for unexpected costs based on usage. | High upfront investment in hardware and software, ongoing maintenance and staffing costs. |

| Scalability | Highly scalable, easily adjust resources based on demand. | Limited scalability, expanding capacity requires significant investment and time. |

| Security | Security responsibility shared between provider and user, potential vulnerabilities related to data breaches and access control. | Greater control over security, but requires dedicated security expertise and ongoing maintenance. |

| Maintenance | Minimal maintenance required by the user; provider handles most infrastructure maintenance. | Requires significant ongoing maintenance, including hardware upgrades, software patching, and system administration. |

Cloud Server Types and Architectures

Understanding the different types of cloud servers and the architectures they operate within is crucial for selecting the right solution to meet specific business needs. The choice depends on factors such as scalability requirements, security concerns, budget constraints, and the level of control desired.

Different cloud server types offer varying levels of control, customization, and cost-effectiveness. Cloud architectures, on the other hand, determine how resources are deployed and managed, impacting factors like security, accessibility, and compliance.

Cloud Server Types

Choosing the appropriate cloud server type hinges on your application’s demands and your organization’s technical capabilities. Each type offers a unique balance of control, flexibility, and cost.

- Virtual Machines (VMs): VMs are virtualized instances of a physical server, providing a dedicated operating system and resources within a shared physical infrastructure. They offer flexibility and scalability, allowing users to easily adjust resources as needed. Examples include instances offered by Amazon Web Services (AWS EC2), Microsoft Azure Virtual Machines, and Google Compute Engine.

- Dedicated Servers: Dedicated servers provide exclusive access to a physical server’s resources, offering maximum control and performance. This option is ideal for applications requiring high levels of security, performance, or customization, although it comes at a higher cost than shared resources like VMs. Companies often use dedicated servers for critical applications or when strict compliance regulations are in place.

- Containers: Containers are lightweight, standalone packages of software that include everything needed to run an application – code, runtime, system tools, system libraries – allowing for consistent execution across different environments. Containers offer high efficiency and portability, making them suitable for microservices architectures and DevOps workflows. Docker and Kubernetes are popular containerization technologies widely used in cloud environments.

Cloud Architectures

The architecture of a cloud deployment significantly influences its functionality, security, and cost. Understanding the different options is essential for making informed decisions.

- Public Cloud: Public cloud services are provided over the internet by third-party providers (e.g., AWS, Azure, Google Cloud). This offers scalability, cost-effectiveness, and ease of access, but may raise concerns about data security and compliance for sensitive information.

- Private Cloud: A private cloud is a dedicated cloud infrastructure solely used by a single organization. This offers enhanced security and control but typically involves higher setup and maintenance costs compared to public cloud solutions. Private clouds are commonly employed by organizations with stringent security requirements or specific compliance needs.

- Hybrid Cloud: Hybrid cloud combines public and private cloud environments, leveraging the strengths of both. This approach allows organizations to maintain control over sensitive data while benefiting from the scalability and cost-effectiveness of the public cloud. Hybrid clouds are often used to handle fluctuating workloads, with less critical applications residing in the public cloud and sensitive data remaining within the private cloud.

- Multi-Cloud: Multi-cloud involves using multiple public cloud providers simultaneously. This approach offers redundancy, vendor lock-in avoidance, and optimized resource allocation across different platforms. Organizations might choose a multi-cloud strategy to diversify their risk, leverage specialized services offered by different providers, or optimize costs by choosing the most cost-effective provider for specific workloads.

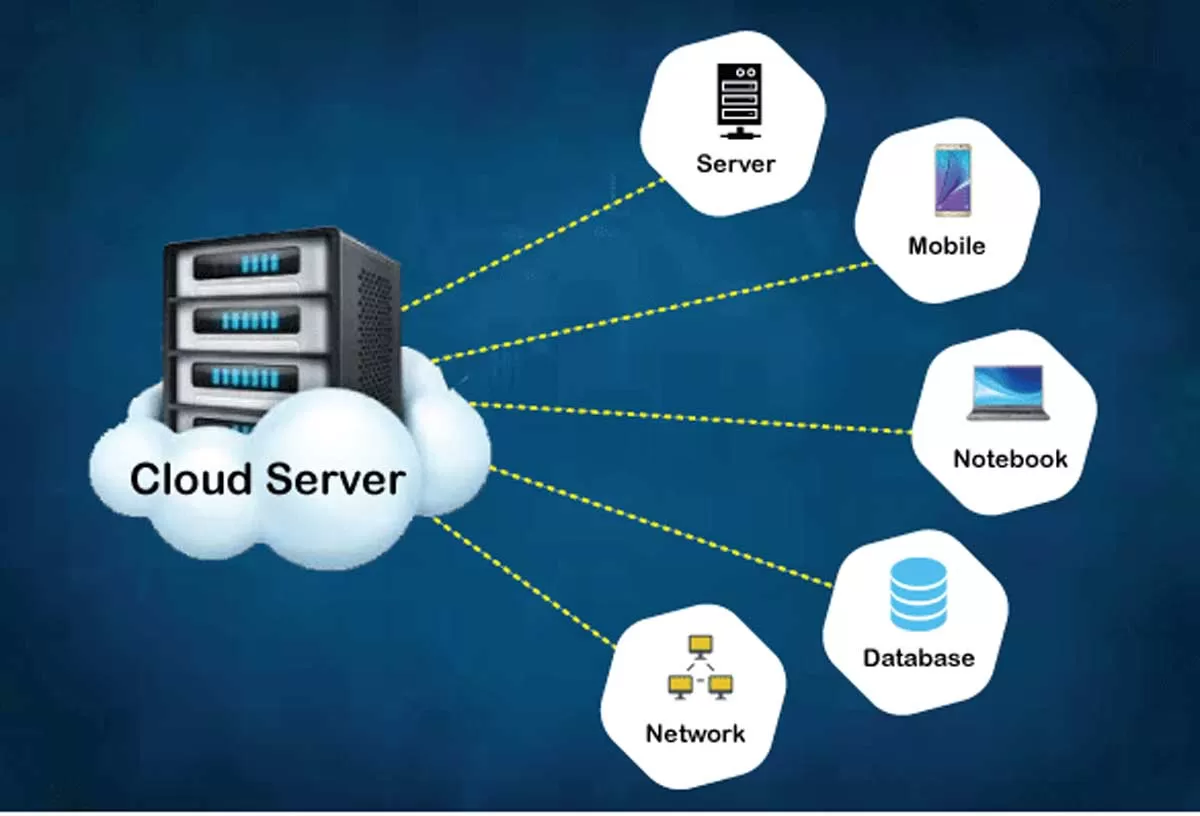

Typical Cloud Server Setup Diagram

The diagram depicts a simplified representation of a cloud server setup. Imagine a box labeled “Cloud Provider Data Center” containing numerous physical servers. Within this box, smaller boxes represent virtualized resources.

One such box is labeled “Virtual Machine (VM)”. This VM contains smaller boxes representing its components: an “Operating System” (e.g., Linux or Windows), “Applications” (e.g., web server, database), and “Data Storage” (e.g., virtual disks). Arrows connect these components, showing data flow within the VM. Another box, labeled “Network,” connects the VM to the internet and other resources within the data center. Finally, a “Control Panel” (or management console) interacts with the VM, allowing for monitoring, configuration, and resource management. This control panel could be accessed via a web interface or command-line interface. The entire setup is contained within the larger “Cloud Provider Data Center” box, highlighting the shared infrastructure nature of cloud computing.

Security Considerations in Cloud and Server Environments

Securing data and applications in both cloud and server environments is paramount in today’s interconnected world. The shared responsibility model, where security is a collaborative effort between the provider and the user, underscores the need for a comprehensive approach that addresses various threats and vulnerabilities. This section details common security threats and Artikels best practices for robust security implementation.

Common Security Threats in Cloud and Server Environments

Cloud and server environments face a range of security threats, from external attacks to internal vulnerabilities. Understanding these threats is the first step toward mitigating risk. These threats often exploit weaknesses in infrastructure, applications, or human practices.

- Data breaches: Unauthorized access to sensitive data, often resulting from vulnerabilities in applications or weak security controls.

- Malware infections: Viruses, ransomware, and other malicious software can compromise systems and data, leading to data loss, operational disruption, and financial losses. Examples include the NotPetya ransomware attack in 2017, which caused billions of dollars in damage globally.

- Denial-of-service (DoS) attacks: These attacks flood servers with traffic, making them unavailable to legitimate users. Distributed denial-of-service (DDoS) attacks, which originate from multiple sources, are particularly difficult to mitigate.

- Insider threats: Malicious or negligent employees can pose a significant security risk, potentially leading to data breaches or system compromise.

- Misconfigurations: Incorrectly configured servers or cloud services can create vulnerabilities that attackers can exploit. For example, leaving default passwords unchanged or failing to properly secure storage buckets in the cloud can have severe consequences.

- Phishing and social engineering: Attackers often use deceptive tactics to trick users into revealing sensitive information or granting access to systems.

Best Practices for Securing Data and Applications

Implementing robust security measures requires a multi-layered approach that combines technical controls, security policies, and employee training. A proactive strategy is crucial to minimize risks and ensure data integrity.

- Strong authentication and authorization: Employ multi-factor authentication (MFA) and implement role-based access control (RBAC) to limit access to sensitive data and resources.

- Regular security patching and updates: Keep software and operating systems up-to-date to address known vulnerabilities. This includes patching both server software and cloud-based services.

- Data encryption: Encrypt data both in transit and at rest to protect it from unauthorized access, even if a breach occurs. This includes encrypting databases, files, and communication channels.

- Regular security audits and penetration testing: Conduct regular security assessments to identify and address vulnerabilities before attackers can exploit them. Penetration testing simulates real-world attacks to evaluate the effectiveness of security controls.

- Security Information and Event Management (SIEM): Utilize SIEM systems to collect and analyze security logs from various sources, providing real-time monitoring and threat detection capabilities.

- Incident response plan: Develop and regularly test an incident response plan to handle security breaches effectively and minimize damage.

Examples of Security Measures

Several security measures can be implemented to protect cloud and server environments. The choice of specific measures depends on the organization’s specific needs and risk profile.

- Firewalls: Network firewalls control traffic entering and leaving a network, blocking unauthorized access attempts. Cloud firewalls provide similar functionality for cloud-based resources.

- Intrusion Detection/Prevention Systems (IDS/IPS): These systems monitor network traffic for malicious activity, alerting administrators to potential threats and automatically blocking malicious traffic (IPS). Cloud-based IDS/IPS solutions are readily available.

- Data Loss Prevention (DLP): DLP tools monitor data movement and prevent sensitive data from leaving the organization’s control, whether through email, cloud storage, or other channels.

- Virtual Private Networks (VPNs): VPNs create secure connections between devices and networks, encrypting data in transit and protecting it from eavesdropping.

- Data encryption techniques: Various encryption algorithms, such as AES-256, can be used to encrypt data at rest and in transit. The choice of algorithm should be based on security requirements and performance considerations.

Data Storage and Management in Cloud and Server Solutions

Data storage and management represent a critical aspect of both cloud and server-based IT infrastructures. The choice between these approaches significantly impacts cost, scalability, security, and overall operational efficiency. Understanding the nuances of each environment is crucial for making informed decisions aligned with specific business needs and data requirements.

Cloud and server environments offer distinct approaches to data storage and management, each with its own advantages and disadvantages. On-premise servers provide direct control over hardware and software, while cloud solutions offer scalability, flexibility, and reduced infrastructure management overhead. This section will explore these differences in detail, focusing on storage options, backup and restoration procedures, and strategies for optimizing performance and reliability.

Data Storage Options in Cloud and Server Environments

The options for storing data differ significantly between cloud and server environments. On-premise servers typically rely on direct-attached storage (DAS), network-attached storage (NAS), or storage area networks (SANs). DAS involves connecting storage devices directly to a server, offering high performance but limited scalability. NAS provides centralized storage accessible via a network, offering better scalability and ease of management. SANs are high-performance storage networks offering advanced features like data replication and failover capabilities. Cloud storage, on the other hand, offers a range of services including object storage (like Amazon S3 or Azure Blob Storage), block storage (like Amazon EBS or Azure Managed Disks), and file storage (like Amazon EFS or Azure Files). Object storage is ideal for unstructured data, block storage for virtual machine disks, and file storage for applications requiring traditional file system access. The choice depends on the type of data, performance requirements, and cost considerations.

Data Backup and Restoration Procedures

Data backup and restoration procedures are essential for business continuity and disaster recovery. In server environments, backups can be performed using various methods, including file-level backups, image-level backups, and database backups. These backups can be stored locally, on a NAS device, or on a separate server. Restoration involves retrieving the backups and restoring them to the original location or a new one. Cloud environments offer automated backup and recovery services, often integrated with storage solutions. These services typically provide options for backing up data to different regions for disaster recovery purposes. Restoration can be automated or performed manually, depending on the service and configuration. Regular testing of backup and restoration procedures is crucial to ensure their effectiveness.

Data Management Strategies for Optimal Performance and Reliability

Effective data management is key to maximizing performance and reliability in both cloud and server environments. Strategies include data deduplication to reduce storage space, data compression to improve storage efficiency, and data tiering to move less frequently accessed data to cheaper storage tiers. In cloud environments, leveraging managed services like database-as-a-service (DBaaS) can significantly simplify data management tasks. Monitoring and logging are crucial for identifying performance bottlenecks and potential issues. Regular security audits and updates are necessary to protect data from unauthorized access and cyber threats. Implementing robust disaster recovery plans, including data replication and failover mechanisms, is vital for ensuring business continuity in the event of hardware failure or other unforeseen events. For example, a financial institution might use a multi-region replication strategy in the cloud to ensure high availability and data redundancy, minimizing downtime in case of a regional outage. A retail company with an on-premise server infrastructure might implement a 3-2-1 backup strategy (3 copies of data on 2 different media, with 1 copy offsite) to protect against data loss.

Cloud and Server Deployment Models

Choosing the right deployment model is crucial for success in leveraging cloud and server solutions. The model selected significantly impacts cost, scalability, security, and overall management. Understanding the differences between the primary models is essential for making informed decisions.

The most prevalent deployment models are Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS). Each offers a different level of abstraction and control, catering to varying needs and technical expertise.

Infrastructure as a Service (IaaS)

IaaS provides fundamental computing resources, including virtual machines (VMs), storage, and networking. Users have complete control over the operating system and applications, offering maximum flexibility. This model is ideal for organizations requiring high customization and control over their infrastructure. Examples include Amazon Web Services (AWS) EC2, Microsoft Azure Virtual Machines, and Google Compute Engine. These platforms offer a range of virtual machine sizes and configurations, allowing users to tailor their infrastructure to specific workloads.

Platform as a Service (PaaS)

PaaS abstracts away much of the underlying infrastructure, focusing instead on providing a platform for developing, deploying, and managing applications. Users are responsible for the application code and its configuration, but the underlying infrastructure, including servers, operating systems, and databases, is managed by the PaaS provider. This simplifies development and deployment, reducing the operational overhead. Examples include Heroku, Google App Engine, and AWS Elastic Beanstalk. These platforms often offer integrated tools for development, testing, and deployment, streamlining the application lifecycle.

Software as a Service (SaaS)

SaaS delivers software applications over the internet, eliminating the need for users to install or manage the software themselves. Users access the applications through a web browser or dedicated client, paying a subscription fee for access. This model offers the highest level of abstraction, requiring minimal technical expertise. Examples include Salesforce, Microsoft 365, and Google Workspace. These applications are typically multi-tenant, meaning multiple users share the same underlying infrastructure, allowing for economies of scale.

Comparison of Cloud and Server Deployment Models

The following table summarizes the key characteristics and benefits of each deployment model:

| Deployment Model | Characteristics | Benefits | Example Providers |

|---|---|---|---|

| IaaS | Provides virtual servers, storage, and networking; maximum control over infrastructure; requires significant technical expertise. | High flexibility, customization, and control; cost-effective for large-scale deployments; ideal for complex applications. | AWS EC2, Azure VMs, Google Compute Engine |

| PaaS | Provides a platform for application development and deployment; abstracts away underlying infrastructure; simplifies development and deployment. | Reduced operational overhead; faster development cycles; easier scalability; suitable for agile development methodologies. | Heroku, Google App Engine, AWS Elastic Beanstalk |

| SaaS | Delivers software applications over the internet; requires minimal technical expertise; typically subscription-based. | Easy to use; low maintenance; cost-effective for small to medium-sized businesses; accessible from anywhere with an internet connection. | Salesforce, Microsoft 365, Google Workspace |

Cost Optimization Strategies for Cloud and Server Resources

Effective cost management is crucial for maintaining the financial health of any organization relying on cloud or server infrastructure. Uncontrolled spending on computing resources can quickly escalate, impacting profitability and potentially hindering growth. This section details strategies for optimizing costs in both cloud and on-premise server environments, focusing on practical techniques and best practices.

Optimizing cloud and server costs requires a multifaceted approach encompassing proactive planning, diligent monitoring, and the strategic utilization of available tools and resources. By implementing the strategies Artikeld below, organizations can significantly reduce their infrastructure expenses without compromising performance or reliability.

Right-Sizing Instances

Right-sizing involves selecting the appropriate size of virtual machine (VM) or server instance to meet the specific needs of your application or workload. Over-provisioning, where resources are allocated beyond actual requirements, leads to unnecessary expenses. Conversely, under-provisioning can result in performance bottlenecks and application instability. A careful analysis of CPU utilization, memory consumption, and storage needs is essential for determining the optimal instance size. Tools provided by cloud providers often offer detailed usage metrics, enabling informed decision-making regarding instance scaling. For example, Amazon Web Services (AWS) provides tools like AWS Cost Explorer and EC2 instance optimization recommendations. Similarly, Microsoft Azure offers Azure Cost Management + Billing and recommendations for optimizing VM sizes. By consistently monitoring resource usage and adjusting instance sizes accordingly, organizations can achieve substantial cost savings.

Utilizing Cost-Management Tools

Cloud providers and server management platforms offer a range of cost-management tools designed to provide insights into spending patterns and identify areas for improvement. These tools often include features such as cost allocation, anomaly detection, and forecasting capabilities. For instance, AWS Cost Explorer allows users to visualize spending trends, identify cost drivers, and generate reports for detailed analysis. Azure Cost Management + Billing provides similar functionalities, along with the ability to set budgets and receive alerts when spending exceeds predefined thresholds. These tools enable proactive cost optimization by highlighting areas where resource consumption can be reduced or optimized. Regularly reviewing these reports and leveraging the insights provided is crucial for maintaining cost efficiency.

Managing Resource Consumption

Efficient resource management is paramount for minimizing expenses. This involves implementing strategies to reduce idle time, optimize application performance, and consolidate resources where possible. For example, scheduling tasks during off-peak hours, utilizing auto-scaling features to dynamically adjust resources based on demand, and implementing robust monitoring systems to detect and address performance bottlenecks can significantly reduce overall resource consumption. In server environments, consolidating multiple physical servers onto fewer, more powerful machines can lead to cost savings in terms of power consumption, maintenance, and space. Regularly reviewing and decommissioning unused or underutilized resources is also a critical aspect of efficient resource management.

Implementing Reserved Instances or Committed Use Discounts

Cloud providers often offer discounted pricing for reserved instances or committed use discounts. These options provide cost savings in exchange for a longer-term commitment to using specific resources. For example, AWS offers Reserved Instances (RIs) and Savings Plans, while Azure offers Reserved Virtual Machine Instances and Azure Savings Plans. By carefully assessing workload requirements and forecasting future needs, organizations can leverage these discounts to significantly reduce their cloud spending. It’s crucial to accurately predict resource requirements to avoid over-commitment and potential waste. For instance, a company anticipating a sustained increase in workload could benefit from committing to a longer-term reservation, whereas a company with fluctuating demands might find spot instances or on-demand pricing more cost-effective.

Automating Resource Provisioning and De-provisioning

Automating the provisioning and de-provisioning of resources can help optimize costs by ensuring that resources are only utilized when needed. This can be achieved through Infrastructure-as-Code (IaC) tools such as Terraform or CloudFormation, which allow for the automated creation and destruction of infrastructure components based on predefined configurations. Automating the process minimizes manual intervention, reducing the risk of human error and ensuring efficient resource allocation. For example, an application deployed using IaC can automatically scale up during peak demand and scale down during off-peak hours, ensuring optimal resource utilization and minimizing unnecessary expenses.

Scalability and Elasticity in Cloud and Server Infrastructure

Scalability and elasticity are critical factors in choosing the right infrastructure for any application. While both on-premise servers and cloud solutions offer ways to increase capacity, their approaches and ease of implementation differ significantly. Understanding these differences is crucial for building robust and cost-effective systems. This section will explore the capabilities of each and how to design for efficient scaling in both environments.

Cloud and server solutions approach scalability and elasticity in fundamentally different ways. Traditional server environments typically require significant upfront investment and planning for scalability. Adding capacity often involves purchasing and installing new hardware, a process that can be time-consuming and disruptive. In contrast, cloud solutions offer on-demand scalability, allowing users to easily adjust resources up or down based on real-time needs. This flexibility is a key advantage of cloud computing, enabling businesses to adapt quickly to changing demands and optimize resource utilization.

Cloud Scalability and Elasticity Mechanisms

Cloud providers offer a range of mechanisms to achieve scalability and elasticity. Auto-scaling features automatically adjust the number of virtual machines or containers based on predefined metrics, such as CPU utilization or request volume. This ensures that the application always has sufficient resources to handle the current workload without manual intervention. Load balancers distribute traffic across multiple instances, preventing overload on any single server. These features, combined with the ability to quickly provision new resources, make cloud environments highly adaptable to fluctuating demands. For example, a retail website might automatically scale up its infrastructure during peak shopping seasons like Black Friday, handling a surge in traffic without performance degradation, then scale back down afterward, optimizing cost efficiency.

Server Scalability and Elasticity Limitations

Scaling a server environment typically involves adding physical hardware. This process is often more complex, time-consuming, and costly than its cloud counterpart. While techniques like load balancing and clustering can improve performance and availability, they don’t offer the same level of on-demand scalability as cloud solutions. Predicting future needs and proactively scaling hardware becomes crucial to avoid performance bottlenecks, but over-provisioning can lead to wasted resources and increased costs. For example, a company might invest in additional servers to anticipate future growth, but if that growth doesn’t materialize as expected, they are left with underutilized hardware.

Designing for Efficient Scalability in Cloud and Server Environments

Designing systems for efficient scalability requires careful consideration of several factors, regardless of the chosen infrastructure. Modular design, where the application is broken down into independent components that can be scaled individually, is essential. Using microservices architecture further enhances this approach, allowing for independent scaling of specific functionalities. Careful monitoring and performance testing are crucial to identify bottlenecks and optimize resource allocation. Furthermore, a robust monitoring system is critical for both environments, providing real-time insights into resource utilization and performance. This allows for proactive adjustments to resource allocation, preventing performance degradation. In cloud environments, leveraging auto-scaling features and load balancing is key, while in server environments, careful capacity planning and the use of clustering and load balancing technologies are necessary.

Comparison of Scalability and Elasticity

| Feature | Cloud | Server |

|---|---|---|

| Scalability | Highly scalable, on-demand resource provisioning | Limited scalability, requires manual hardware additions |

| Elasticity | Highly elastic, automatic scaling based on demand | Limited elasticity, requires manual intervention |

| Cost | Pay-as-you-go model, cost-effective for fluctuating workloads | Higher upfront investment, potential for underutilized resources |

| Speed of Deployment | Rapid deployment, new resources available within minutes | Slower deployment, hardware procurement and installation time |

Disaster Recovery and Business Continuity Planning

Robust disaster recovery (DR) and business continuity (BC) planning are crucial for maintaining operational resilience in both cloud and server environments. These plans ensure minimal disruption to business operations and data availability in the face of unforeseen events, such as natural disasters, cyberattacks, or hardware failures. A well-defined strategy minimizes downtime, protects valuable data, and safeguards the organization’s reputation.

Effective DR and BC planning requires a comprehensive understanding of potential threats and vulnerabilities, coupled with proactive mitigation strategies. This involves identifying critical systems and data, establishing recovery time objectives (RTOs) and recovery point objectives (RPOs), and implementing appropriate technologies and procedures. The specific approach will vary depending on the organization’s size, industry, and risk tolerance. For example, a financial institution will have far stricter RTOs and RPOs than a small retail business.

Data Protection Strategies

Data protection is paramount in any DR and BC plan. This involves implementing multiple layers of protection, including regular backups, data replication, and robust security measures. Backups should be stored offsite, ideally in a geographically diverse location, to protect against data loss due to physical damage or theft. Data replication ensures data availability by maintaining copies in multiple locations, allowing for quick failover in case of a primary site outage. Encryption is essential to protect data both in transit and at rest, safeguarding against unauthorized access. A well-defined data retention policy is also necessary, specifying how long data needs to be retained and under what conditions.

Service Availability Strategies

Ensuring service availability during disruptions is another critical aspect of DR and BC planning. This involves implementing high-availability architectures, such as redundant systems and load balancing, to distribute traffic and prevent single points of failure. Cloud-based solutions often offer built-in redundancy and failover capabilities, making them attractive for organizations seeking enhanced availability. For on-premises server environments, implementing redundant hardware and network infrastructure is crucial. Regular testing and drills are essential to validate the effectiveness of the DR plan and ensure that personnel are adequately trained to respond to incidents. This includes simulating various disaster scenarios and evaluating the time taken for recovery. This process identifies any weaknesses or gaps in the plan and allows for necessary adjustments.

Disaster Recovery Plan Checklist

A comprehensive DR plan requires careful consideration of numerous factors. The following checklist Artikels essential steps for implementing a robust plan:

- Risk Assessment: Identify potential threats and vulnerabilities affecting your organization.

- Critical System Identification: Determine which systems and data are critical to business operations.

- RTO/RPO Definition: Establish acceptable recovery time and recovery point objectives.

- Backup and Recovery Strategy: Define backup frequency, storage location, and recovery procedures.

- Data Replication Strategy: Implement data replication to ensure data availability.

- Failover Mechanisms: Establish failover procedures for critical systems and applications.

- Testing and Drills: Regularly test the DR plan to validate its effectiveness.

- Communication Plan: Develop a communication plan to keep stakeholders informed during an incident.

- Recovery Site Selection: Choose an appropriate recovery site (hot, warm, or cold site).

- Personnel Training: Train personnel on their roles and responsibilities during a disaster.

Monitoring and Management of Cloud and Server Systems

Effective monitoring and management are crucial for ensuring the reliability, performance, and security of cloud and server systems. This involves implementing robust tools and techniques to track key metrics, proactively identify potential issues, and swiftly respond to incidents. A comprehensive approach allows for optimized resource utilization and minimizes downtime.

Proactive monitoring and management techniques are essential for maintaining optimal system health and preventing potential problems. These techniques involve the use of various tools and strategies to track key performance indicators (KPIs), analyze system logs, and receive alerts on significant events. This allows for early detection and resolution of issues, preventing larger problems down the line.

Monitoring Tools and Techniques

A variety of tools and techniques are available for monitoring and managing cloud and server systems. These range from basic command-line utilities to sophisticated, cloud-based monitoring platforms. The choice of tools depends on factors such as the scale of the infrastructure, the specific needs of the organization, and the budget. Common tools include Nagios, Zabbix, Prometheus, Datadog, and cloud-provider specific monitoring services like AWS CloudWatch, Azure Monitor, and Google Cloud Monitoring. These tools provide capabilities such as system resource monitoring (CPU, memory, disk I/O), network monitoring (bandwidth usage, latency), application performance monitoring (response times, error rates), and log management.

Performance Metrics Tracking and Issue Identification

Tracking key performance metrics is vital for identifying potential issues and optimizing system performance. Common metrics include CPU utilization, memory usage, disk I/O, network latency, and application response times. Deviations from established baselines can indicate problems such as resource bottlenecks, application errors, or network connectivity issues. Effective tracking often involves setting thresholds and alerts, allowing for immediate notification when a metric crosses a predefined limit. Analyzing historical data allows for trend identification and proactive capacity planning. For example, consistently high CPU utilization might indicate a need for more powerful servers or application optimization. Similarly, increasing network latency could point to network congestion or a faulty network component.

Monitoring Dashboards and Alerts

Monitoring dashboards provide a centralized view of system health and performance. These dashboards typically display key metrics in real-time, using charts, graphs, and other visualizations to make it easy to identify trends and potential issues. Customizable dashboards allow administrators to focus on the most relevant metrics for their specific needs. Alerts are crucial for proactive issue management. These alerts are triggered when a metric crosses a predefined threshold or when an error occurs. Alerts can be delivered via various channels, including email, SMS, or dedicated monitoring applications. For example, an alert could be triggered if CPU utilization exceeds 90% for a sustained period, indicating a potential performance bottleneck. Similarly, an alert could be generated if a critical application fails to respond within a certain timeframe. These alerts enable timely intervention, minimizing the impact of incidents.

Migration from Server to Cloud

Migrating applications and data from a traditional server environment to a cloud platform can significantly improve scalability, flexibility, and cost-efficiency. However, a well-planned and executed migration is crucial to minimize disruption and ensure a smooth transition. This section details a step-by-step guide, incorporating best practices to achieve a successful cloud migration.

Assessment and Planning

A thorough assessment of your current server environment is the foundation of a successful migration. This involves identifying all applications, databases, and dependencies, analyzing their resource consumption, and determining their suitability for cloud deployment. Factors to consider include application architecture, data volume, performance requirements, and security needs. A comprehensive plan should Artikel the migration strategy (rehosting, replatforming, refactoring, repurchase, or retiring), timelines, resource allocation, and risk mitigation strategies. This plan should also account for potential downtime and establish clear rollback procedures.

Data Migration Strategy

Data migration is a critical phase requiring careful planning and execution. The chosen method depends on factors like data volume, sensitivity, and application requirements. Options include using cloud-native tools, third-party migration services, or custom scripts. For large datasets, a phased approach is recommended, migrating data in increments to minimize disruption. Data validation and verification are essential after each migration phase to ensure data integrity. Consider implementing data encryption both during transit and at rest to safeguard sensitive information.

Application Migration

The approach to application migration varies depending on the application’s architecture and complexity. For simple applications, a lift-and-shift approach (rehosting) might be sufficient. More complex applications may require replatforming (migrating to a different platform but retaining the application’s architecture) or refactoring (rearchitecting the application for optimal cloud performance). Thorough testing is essential throughout this phase to identify and resolve any compatibility issues or performance bottlenecks. Continuous integration and continuous delivery (CI/CD) pipelines can automate the deployment and testing process, accelerating the migration and reducing risks.

Testing and Validation

Before the final cutover, comprehensive testing is paramount. This involves testing application functionality, performance, and security in the cloud environment. Performance testing helps identify potential bottlenecks and ensure the application meets the required performance levels. Security testing verifies the security of the migrated applications and data in the cloud. Load testing simulates real-world usage to assess the application’s ability to handle peak loads. Thorough testing minimizes the risk of unexpected issues after the migration is complete.

Cutover and Go-Live

The cutover phase involves switching from the traditional server environment to the cloud. The chosen approach (big bang or phased migration) depends on the risk tolerance and business requirements. A phased approach minimizes disruption by migrating applications and data incrementally. Real-time monitoring during the cutover is crucial to detect and address any issues promptly. Post-migration monitoring and optimization ensure the application runs efficiently and meets performance expectations in the cloud environment.

Post-Migration Optimization

After the migration is complete, continuous monitoring and optimization are essential to ensure the application’s performance and cost-effectiveness. This includes optimizing resource allocation, adjusting scaling parameters, and implementing cost-saving measures. Regular security assessments are crucial to identify and address any vulnerabilities. Post-migration reviews help identify areas for improvement and refine the migration process for future projects.

Flowchart Description

The flowchart depicting the server-to-cloud migration process would begin with a “Start” node. This would branch into a “Needs Assessment & Planning” box, leading to a “Data Migration Strategy” box. Next would be an “Application Migration” box, followed by a “Testing & Validation” box. A decision point would then follow, asking “Is everything working as expected?”. A “Yes” answer would lead to a “Cutover & Go-Live” box, followed by a “Post-Migration Optimization” box and finally an “End” node. A “No” answer from the decision point would loop back to the “Testing & Validation” box. Each box represents a key stage, and the arrows illustrate the sequential nature of the process. The flowchart visually represents the iterative nature of testing and the importance of thorough planning before the final cutover.

Questions and Answers

What is the difference between a virtual machine and a dedicated server?

A virtual machine (VM) shares physical server resources with other VMs, offering cost-effectiveness but potentially impacting performance. A dedicated server provides exclusive access to the entire server’s resources, ensuring optimal performance but at a higher cost.

How secure is cloud storage compared to on-premise servers?

Both cloud and on-premise servers offer robust security measures, but the responsibility for security differs. Cloud providers handle much of the underlying infrastructure security, while on-premise security is entirely the responsibility of the organization. The level of security depends on the implementation and chosen security measures in either environment.

What are the key factors to consider when choosing between cloud and server solutions?

Key factors include budget, scalability needs, security requirements, in-house IT expertise, application requirements, and compliance regulations. A thorough needs assessment is essential before making a decision.

What are some common cloud security threats?

Common threats include data breaches, denial-of-service attacks, malware infections, misconfigurations, and insider threats. Implementing robust security practices, including multi-factor authentication, encryption, and regular security audits, is crucial.