Defining Cloud Application Servers

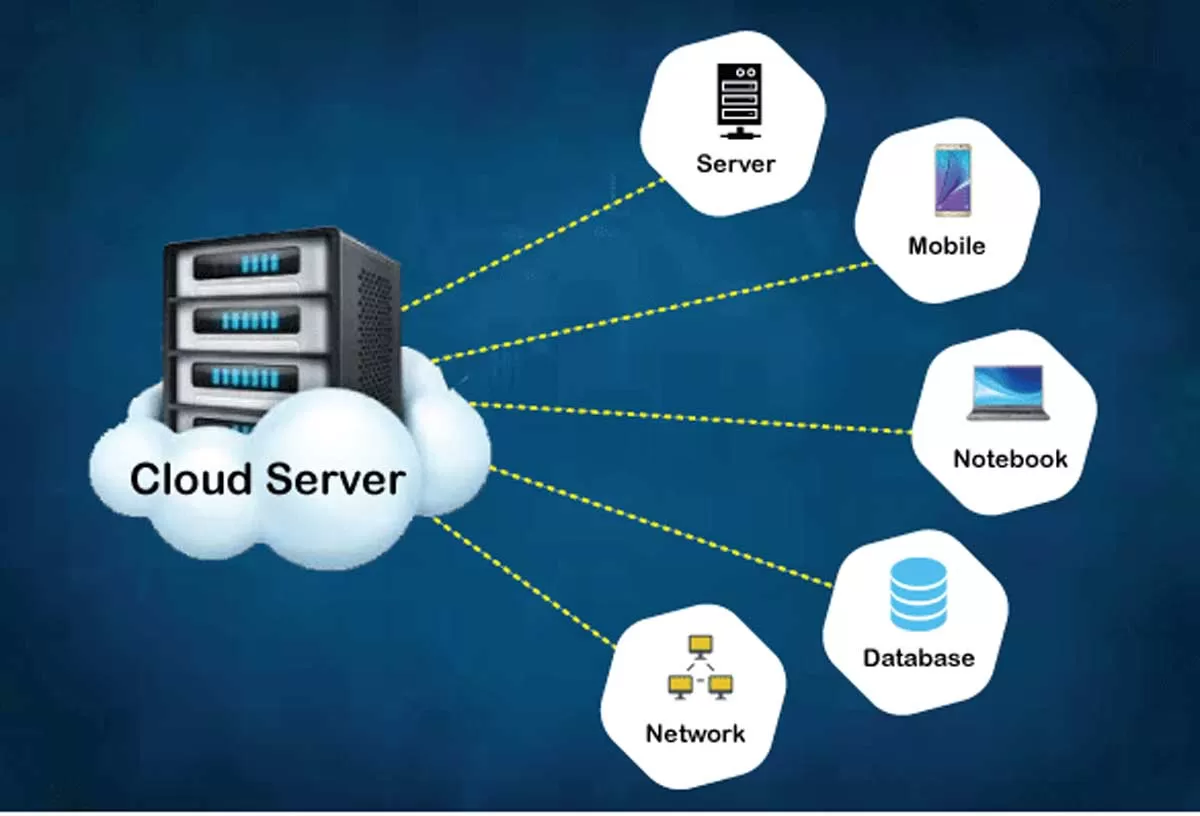

Cloud application servers are the backbone of many modern applications, providing a scalable and reliable platform for deploying and managing software. They essentially act as a bridge between the application code and the underlying cloud infrastructure, abstracting away the complexities of hardware management and allowing developers to focus on building and improving their applications.

Cloud application servers handle various crucial tasks, including managing application processes, allocating resources, ensuring security, and facilitating communication between different application components. This allows for greater flexibility and efficiency in deploying and managing applications compared to traditional on-premise server solutions.

Core Components of Cloud Application Server Architecture

A typical cloud application server architecture comprises several key components working in concert. These components ensure the smooth operation and scalability of the applications hosted on the server. The specific components and their implementation may vary depending on the cloud provider and the chosen application server technology, but common elements include a runtime environment (e.g., Java Virtual Machine, Node.js), a web server (e.g., Apache, Nginx), a database server (e.g., MySQL, PostgreSQL), and a load balancer to distribute traffic across multiple instances of the application for improved performance and resilience. Security features such as firewalls, intrusion detection systems, and access control mechanisms are also integral components. Further components might include monitoring tools, logging services, and deployment automation systems.

Public, Private, and Hybrid Cloud Application Server Deployments

The choice of deployment model – public, private, or hybrid cloud – significantly impacts the cost, security, and control aspects of a cloud application server. Public cloud deployments, such as those offered by AWS, Azure, and Google Cloud Platform, leverage shared infrastructure and resources, offering cost-effectiveness and scalability. However, this comes at the cost of reduced control over the underlying infrastructure and potential security concerns related to shared resources. Private cloud deployments, on the other hand, utilize dedicated infrastructure within an organization’s own data center or a colocation facility, offering greater control and security but potentially higher costs and reduced scalability compared to public clouds. Hybrid cloud deployments combine elements of both public and private clouds, allowing organizations to leverage the benefits of both models, such as using public cloud for scalable workloads and private cloud for sensitive data.

Common Use Cases for Cloud Application Servers Across Various Industries

Cloud application servers find applications across a vast spectrum of industries. In e-commerce, they power online stores, handling transactions, managing product catalogs, and supporting personalized shopping experiences. In the finance sector, they are crucial for handling online banking, trading platforms, and risk management systems, requiring high levels of security and reliability. Healthcare utilizes cloud application servers for electronic health records, telehealth platforms, and medical imaging systems, demanding robust data security and compliance with regulations like HIPAA. The media and entertainment industry relies on them for streaming services, content delivery networks, and digital rights management systems, emphasizing scalability and low latency. Manufacturing and logistics leverage cloud application servers for supply chain management, inventory tracking, and real-time data analysis, supporting efficient operations and decision-making.

Security Considerations for Cloud Application Servers

Securing cloud application servers requires a multifaceted approach, encompassing robust security practices, appropriate access controls, and a well-defined security architecture. Ignoring these aspects can lead to significant vulnerabilities, exposing sensitive data and compromising the availability and integrity of your applications. This section details best practices and architectural considerations for mitigating these risks.

Best Practices for Securing Cloud Application Servers

Implementing strong security measures is paramount for protecting cloud application servers. This involves a combination of preventative measures, proactive monitoring, and responsive incident handling. A layered security approach, incorporating multiple defense mechanisms, is crucial for effectively mitigating various threats. This ensures that even if one layer is breached, others remain in place to prevent complete compromise.

Access Control and Authentication in Cloud Applications

Access control and authentication form the bedrock of cloud application security. Robust authentication mechanisms, such as multi-factor authentication (MFA), prevent unauthorized access by verifying user identities through multiple independent factors. This could include something the user knows (password), something the user has (security token), and something the user is (biometric scan). Access control, on the other hand, restricts user permissions based on their roles and responsibilities, ensuring that only authorized users can access specific resources and perform certain actions. The principle of least privilege should always be applied, granting users only the minimum necessary permissions to perform their tasks. Regular audits of user access rights are essential to identify and revoke unnecessary privileges.

Security Architecture for a Cloud Application Server

A comprehensive security architecture for a cloud application server integrates multiple layers of security controls to protect against a wide range of threats. This architecture typically includes firewalls, intrusion detection systems (IDS), and intrusion prevention systems (IPS). Firewalls act as the first line of defense, filtering network traffic and blocking unauthorized access attempts. They can be implemented at various levels, including network firewalls, host-based firewalls, and application-level firewalls. Intrusion detection systems monitor network traffic and system activity for malicious patterns, alerting administrators to potential security breaches. Intrusion prevention systems go a step further, actively blocking or mitigating malicious activity in real-time. Regular security assessments and penetration testing are essential to identify and address vulnerabilities before they can be exploited. A well-defined incident response plan is also critical for effectively handling security incidents and minimizing their impact. This plan should include procedures for identifying, containing, eradicating, recovering from, and learning from security incidents.

Scalability and Performance Optimization

Cloud application servers must be designed to handle varying levels of demand efficiently and reliably. Scalability and performance optimization are crucial aspects of ensuring a positive user experience and maintaining the operational efficiency of your application. Strategies for achieving these goals involve careful planning, proactive monitoring, and the implementation of appropriate technologies.

Effective scaling and performance optimization significantly impact the overall cost-effectiveness and resilience of your cloud application. By strategically addressing potential bottlenecks and implementing robust monitoring practices, you can minimize downtime, improve response times, and ensure your application remains responsive even during periods of peak demand.

Scaling Strategies for Fluctuating Workloads

Several strategies exist for scaling cloud application servers to accommodate fluctuating workloads. The choice of strategy often depends on the specific application, its architecture, and the nature of the anticipated demand variations. A common approach involves employing autoscaling features provided by cloud providers. These services automatically adjust the number of server instances based on predefined metrics, such as CPU utilization or request rate. Another approach is to use a microservices architecture, where the application is broken down into smaller, independent services that can be scaled individually. This allows for granular control over resource allocation and ensures that only the necessary components are scaled, optimizing resource utilization. Load balancing is also critical; distributing incoming traffic across multiple server instances prevents overload on any single server.

Identifying and Resolving Performance Bottlenecks

Identifying performance bottlenecks is a crucial step in optimizing a cloud application server’s performance. Common bottlenecks include insufficient CPU or memory resources, slow database queries, network latency, and inefficient code. Profiling tools can help pinpoint areas of inefficiency within the application code. Database performance can be improved by optimizing queries, adding indexes, or upgrading database hardware. Network latency can be reduced by choosing a cloud provider with low latency networks and optimizing network configurations. Inefficient code can be identified and improved through code reviews and performance testing. For example, inefficient database queries can be identified using database monitoring tools and optimized by rewriting them or adding indexes to the database tables. A slow network connection could be addressed by switching to a higher bandwidth connection or optimizing network settings.

Monitoring and Managing Performance

A comprehensive monitoring and management plan is essential for maintaining the performance of a cloud application server environment. This involves continuously tracking key performance indicators (KPIs) such as CPU utilization, memory usage, network traffic, and response times. Real-time dashboards provide a visual representation of these metrics, enabling proactive identification of potential issues. Automated alerts can be configured to notify administrators of significant performance deviations, allowing for timely intervention. Log analysis is crucial for identifying and diagnosing errors and performance issues. Regular performance testing, both load testing and stress testing, helps to identify the application’s limits and ensure its ability to handle peak demand. For example, a monitoring system might track the average response time of API requests. If the average response time exceeds a predefined threshold, an alert is generated, notifying the operations team to investigate and resolve the issue.

Cost Optimization and Management

Effective cost management is crucial for the long-term success of any cloud application server deployment. Understanding the various pricing models offered by major cloud providers and implementing a proactive optimization strategy can significantly reduce operational expenditure and improve the overall return on investment. This section explores strategies for optimizing costs associated with cloud application servers.

Cloud Application Server Pricing Models

Major cloud providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) offer a variety of pricing models for their cloud application servers. These models often differ based on factors such as instance type, operating system, region, and usage duration. A thorough understanding of these models is essential for selecting the most cost-effective option for specific needs. Common pricing models include pay-as-you-go, reserved instances, and spot instances. Pay-as-you-go involves paying only for the resources consumed, while reserved instances offer discounted rates in exchange for a long-term commitment. Spot instances provide significant discounts for unused capacity but carry the risk of interruption. The optimal pricing model depends on the application’s requirements and predicted usage patterns. For example, a company with predictable, high-volume workloads might benefit from reserved instances, while a company with fluctuating demand might prefer pay-as-you-go or spot instances.

Cost Optimization Strategies

A well-defined cost optimization strategy is paramount for controlling cloud spending. This strategy should encompass several key areas, including right-sizing instances, leveraging automation, and regularly monitoring and analyzing resource usage. Right-sizing involves selecting the smallest instance type that meets the application’s performance requirements. Over-provisioning resources leads to unnecessary costs. Automation tools can help automate tasks such as scaling instances based on demand, ensuring that resources are only used when needed. Regular monitoring and analysis of resource usage patterns help identify areas for potential cost savings. For instance, identifying periods of low utilization can inform decisions about scaling down instances or utilizing less expensive instance types during those periods. This proactive approach minimizes waste and optimizes resource allocation.

Methods for Reducing Operational Costs

Several methods can directly reduce operational costs associated with cloud application servers. These include utilizing cost-effective instance types, optimizing application code for efficiency, and leveraging managed services. Selecting cost-effective instance types involves choosing instances optimized for specific workloads, avoiding unnecessary features, and opting for instances with lower processing power if possible without compromising application performance. Optimizing application code for efficiency can significantly reduce resource consumption. This may involve improving code efficiency, minimizing database queries, and employing caching strategies. Leveraging managed services offered by cloud providers can reduce operational overhead and costs. These services often provide fully managed infrastructure and handle tasks such as patching and updates, freeing up internal resources and reducing the need for dedicated personnel. For example, using a managed database service eliminates the need to manage the underlying database infrastructure.

Integration with Other Cloud Services

Cloud application servers rarely operate in isolation. Their true power is unlocked through seamless integration with a wide array of other cloud services, creating robust and scalable applications. This integration extends to various aspects, from data storage and retrieval to user authentication and external API interactions. Effective integration significantly improves efficiency, reduces development time, and enhances overall application functionality.

The core principle behind this integration lies in leveraging APIs (Application Programming Interfaces). These APIs provide standardized methods for different cloud services to communicate and exchange data. Cloud application servers often utilize these APIs to interact with databases, storage solutions, messaging queues, and other essential services. This allows developers to focus on application logic rather than low-level infrastructure management.

API Integrations for Cloud Application Servers

Cloud providers offer comprehensive APIs for managing and interacting with their various services. For example, Amazon Web Services (AWS) provides APIs for services like Amazon S3 (Simple Storage Service) for object storage, Amazon RDS (Relational Database Service) for managed databases, and Amazon SNS (Simple Notification Service) for messaging. Similarly, Google Cloud Platform (GCP) offers APIs for services like Google Cloud Storage, Cloud SQL, and Cloud Pub/Sub. Microsoft Azure also provides a rich set of APIs for its cloud services, including Azure Blob Storage, Azure SQL Database, and Azure Service Bus. These APIs allow cloud application servers to seamlessly interact with these services, retrieving data, storing information, and managing notifications, all through programmatic calls. A common pattern involves using RESTful APIs, which are widely adopted and provide a consistent interface for data exchange. For example, a cloud application server might use the AWS S3 API to upload user-generated content to an S3 bucket, or the Google Cloud Storage API to retrieve user profile information from a Cloud Storage bucket.

Benefits of Serverless Functions with Cloud Application Servers

Serverless functions, also known as Function as a Service (FaaS), offer a compelling approach to enhance the capabilities of cloud application servers. These functions are small, independent units of code that execute in response to specific events. They are automatically scaled by the cloud provider, eliminating the need for server management. Integrating serverless functions with cloud application servers allows for efficient handling of asynchronous tasks, event-driven architectures, and microservices. For instance, a serverless function could be triggered when a new user registers on a cloud application server. This function could then automatically create a new database entry for the user, send a welcome email, and add the user to a notification list, all without requiring the main application server to handle these individual tasks. This approach significantly improves scalability, reduces latency, and lowers operational costs by only charging for the actual compute time used by the functions. This contrasts with traditional cloud application servers, where resources are often allocated and charged for even when they are idle.

Disaster Recovery and Business Continuity

Ensuring the continued operation of your cloud application server is paramount. A robust disaster recovery (DR) plan and a commitment to high availability are essential for minimizing downtime and maintaining business continuity. This section Artikels strategies for achieving these goals in a cloud environment.

A comprehensive disaster recovery plan for a cloud application server environment should address various failure scenarios, from minor service disruptions to major regional outages. The plan should detail procedures for quickly restoring services and minimizing data loss. This involves a combination of preventative measures, proactive monitoring, and well-defined recovery steps. Regular testing of the DR plan is crucial to ensure its effectiveness and identify areas for improvement.

Disaster Recovery Plan Design

A well-structured disaster recovery plan begins with a thorough risk assessment. This identifies potential threats, such as hardware failures, natural disasters, cyberattacks, and human error. Based on this assessment, the plan should define recovery time objectives (RTOs) and recovery point objectives (RPOs). RTO specifies the maximum acceptable downtime after an outage, while RPO defines the maximum acceptable data loss. For example, a critical application might have an RTO of 30 minutes and an RPO of 15 minutes, while a less critical application might tolerate a longer RTO and RPO. The plan should Artikel specific recovery procedures for each identified threat, including steps for data backup and restoration, server provisioning, application deployment, and user notification.

High Availability and Redundancy Implementation

High availability and redundancy are fundamental to minimizing downtime. This is typically achieved through techniques such as load balancing, geographic redundancy, and automatic failover. Load balancing distributes traffic across multiple servers, preventing any single server from becoming overloaded. Geographic redundancy involves deploying servers in multiple geographic locations, protecting against regional outages. Automatic failover ensures that if a server fails, another server automatically takes over, minimizing disruption. For instance, using Amazon Web Services (AWS), one could deploy an application across multiple Availability Zones within a region, leveraging AWS’s inherent redundancy. If one Availability Zone experiences an outage, the application automatically fails over to the other zones with minimal user impact.

Best Practices for Business Continuity

Several best practices contribute to ensuring business continuity during cloud application server outages. These include regular data backups to geographically separate locations, rigorous testing of the DR plan, and comprehensive monitoring of the application server environment. Automated alerts and notifications are vital for timely responses to incidents. Moreover, a well-defined escalation process ensures that appropriate personnel are notified and involved in the recovery process. Finally, regular training for IT staff on the DR plan and recovery procedures is essential to ensure smooth execution during an actual event. This training should include simulated disaster scenarios to build familiarity and confidence.

Deployment and Management Tools

Deploying and managing cloud application servers efficiently requires the right tools. The choice of tool depends heavily on factors such as the complexity of the application, the chosen cloud provider, and the team’s expertise. A well-chosen tool can significantly streamline the deployment process, reduce operational overhead, and improve overall system reliability.

Effective deployment and management tools offer a range of functionalities, including automated provisioning, configuration management, monitoring, and scaling capabilities. These tools are crucial for maintaining the health, performance, and security of cloud-based applications. This section explores various tools and provides a practical guide to deployment and best practices for ongoing management.

Comparison of Cloud Application Server Deployment Tools

Several tools facilitate the deployment of cloud application servers. Popular choices include cloud provider-specific tools (e.g., AWS Elastic Beanstalk, Google Cloud Run, Azure App Service) and more general-purpose tools like Docker and Kubernetes. Provider-specific tools often offer tighter integration with the underlying infrastructure, simplifying deployment and management within that specific ecosystem. However, they may lack the flexibility and portability of more general-purpose solutions. Docker and Kubernetes, on the other hand, offer greater portability and flexibility, allowing deployment across various cloud providers and on-premises environments. However, they may require more expertise to configure and manage effectively. The optimal choice depends on the specific needs and priorities of the project.

Step-by-Step Guide: Deploying a Cloud Application Server using AWS Elastic Beanstalk

This guide illustrates deploying a simple Node.js application using AWS Elastic Beanstalk. This is just one example; deployment processes vary significantly based on the application and chosen platform.

- Create an AWS account and configure necessary permissions: Ensure you have an AWS account with appropriate permissions to create and manage resources within Elastic Beanstalk.

- Package your application: Create a deployment package for your application. This typically involves bundling your application code, dependencies, and configuration files into a zip or tarball archive.

- Create an Elastic Beanstalk application: Use the AWS Management Console, AWS CLI, or SDK to create a new Elastic Beanstalk application. Specify a name and region.

- Create an Elastic Beanstalk environment: Within your application, create a new environment. Choose an appropriate platform (e.g., Node.js) and instance type.

- Upload your application: Upload your application package to the newly created environment. Elastic Beanstalk will automatically handle the deployment process, including code deployment, server configuration, and load balancing.

- Monitor the deployment: Track the deployment progress through the Elastic Beanstalk console. Address any errors or issues that may arise during the deployment process.

- Test your application: Once the deployment is complete, thoroughly test your application to ensure it functions as expected.

Best Practices for Managing Cloud Application Servers

Effective management is crucial for maintaining the performance, security, and cost-effectiveness of cloud application servers throughout their lifecycle.

Establishing robust management practices involves several key areas:

- Automated provisioning and configuration management: Utilize tools like Infrastructure as Code (IaC) to automate the creation and configuration of servers, ensuring consistency and repeatability.

- Continuous monitoring and logging: Implement comprehensive monitoring and logging to track application performance, resource utilization, and potential issues. Utilize tools that provide real-time alerts and dashboards.

- Regular security patching and updates: Maintain up-to-date security patches and software updates to mitigate vulnerabilities and protect against threats. Automate this process where possible.

- Capacity planning and scaling: Proactively plan for capacity needs based on anticipated demand. Implement auto-scaling features to dynamically adjust resources based on actual usage.

- Regular backups and disaster recovery planning: Establish a robust backup and recovery strategy to protect against data loss and ensure business continuity in case of failures.

- Cost optimization strategies: Regularly review and optimize resource utilization to minimize cloud spending. Utilize tools and techniques to identify and address areas of inefficiency.

Choosing the Right Cloud Application Server Platform

Selecting the appropriate cloud application server platform is crucial for the success of any cloud-based application. The decision hinges on a careful evaluation of various factors, including application requirements, budget constraints, and long-term scalability needs. This section will compare leading platforms and provide a framework for making an informed choice.

Comparison of Cloud Application Server Platforms

Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) are the dominant players in the cloud computing market, each offering a comprehensive suite of application server solutions. AWS boasts a mature and extensive ecosystem, Azure excels in hybrid cloud integration, and GCP stands out with its advanced data analytics capabilities. However, a direct “best” platform doesn’t exist; the optimal choice depends entirely on specific application needs.

Key Factors in Platform Selection

Several key factors must be considered when choosing a cloud application server platform. These include:

- Application Requirements: The application’s specific needs, such as computing power, storage capacity, and network bandwidth, dictate the platform’s capabilities required. A resource-intensive application might necessitate the robust infrastructure offered by AWS, while a smaller application could find Azure’s cost-effective solutions more suitable.

- Scalability and Elasticity: The platform’s ability to scale resources up or down based on demand is paramount. AWS’s Auto Scaling and Azure’s Virtual Machine Scale Sets provide excellent examples of dynamic scaling capabilities. GCP’s managed instance groups offer similar functionality. The choice depends on the level of automation and control desired.

- Security: Robust security features are essential. All three platforms provide comprehensive security tools, including firewalls, intrusion detection systems, and encryption options. However, the specific security features and their implementation may vary, influencing the final decision.

- Cost Optimization: Cloud costs can quickly escalate if not managed effectively. Each platform offers different pricing models and cost management tools. Careful analysis of pricing structures and the use of cost optimization strategies are vital for long-term cost-effectiveness. For instance, utilizing reserved instances on AWS or Azure Spot Instances can significantly reduce costs.

- Integration with Existing Systems: Seamless integration with existing on-premises infrastructure or other cloud services is often critical. Azure’s strong hybrid cloud capabilities might be preferred for organizations with significant on-premises investments, while GCP’s extensive data analytics tools could be advantageous for data-driven applications.

- Developer Expertise and Support: The availability of skilled developers and the level of technical support offered by the platform provider are crucial considerations. The platform’s documentation, community support, and training resources all play a significant role in successful application deployment and maintenance.

Decision-Making Framework

A structured approach is necessary for selecting the optimal cloud application server platform. This framework involves:

- Define Application Requirements: Clearly specify the application’s functional and non-functional requirements, including performance, security, scalability, and cost constraints.

- Evaluate Platform Capabilities: Compare the features and capabilities of AWS, Azure, and GCP against the defined application requirements. Consider factors such as compute power, storage options, networking capabilities, and security features.

- Assess Cost Implications: Analyze the pricing models of each platform and estimate the total cost of ownership (TCO) for the application’s lifecycle. Consider factors such as compute costs, storage costs, networking costs, and management costs.

- Evaluate Integration and Migration: Assess the ease of integration with existing systems and the complexity of migrating the application to the chosen platform. Consider factors such as data migration, application compatibility, and integration with other services.

- Consider Support and Expertise: Evaluate the level of support and expertise available from each platform provider. Consider factors such as documentation, community support, and training resources.

- Conduct Proof-of-Concept (POC): Before making a final decision, conduct a POC to test the chosen platform’s capabilities and performance in a real-world environment. This will help validate the assumptions made during the evaluation process.

Monitoring and Logging

Effective monitoring and logging are crucial for maintaining the health, security, and performance of a cloud application server. A robust strategy proactively identifies potential issues, facilitates rapid troubleshooting, and ensures business continuity. This involves implementing a comprehensive system that captures relevant data, analyzes it for insights, and provides actionable alerts.

Comprehensive Monitoring and Logging Strategy Design

A comprehensive monitoring and logging strategy requires a multi-faceted approach encompassing various aspects of the cloud application server’s operation. This includes system-level metrics, application-specific performance indicators, and security-related events. The strategy should define which metrics to collect, how frequently to collect them, where to store the data, and how to analyze it for meaningful insights. It should also detail the escalation procedures for critical events and alerts. The selection of monitoring and logging tools will heavily depend on the specific needs of the application and the chosen cloud provider.

System-Level Metrics Monitoring

System-level metrics provide a foundational understanding of the server’s overall health and resource utilization. Key metrics include CPU utilization, memory usage, disk I/O, network traffic, and process activity. These metrics offer insights into potential bottlenecks and resource exhaustion, enabling proactive intervention before performance degradation impacts the application. For example, consistently high CPU utilization might indicate a need for scaling up the server instance or optimizing application code. Similarly, low disk space can trigger alerts that allow for timely intervention before the server runs out of space. Tools such as CloudWatch (AWS), Stackdriver (Google Cloud), and Azure Monitor provide comprehensive dashboards for visualizing these metrics.

Application Performance Monitoring

Application performance monitoring (APM) focuses on the health and responsiveness of the application itself. This involves tracking metrics such as response times, error rates, transaction throughput, and database query performance. APM tools often provide detailed traces of requests, identifying slowdowns and bottlenecks within the application code. By analyzing these traces, developers can pinpoint specific areas requiring optimization. For instance, a slow database query might be optimized by adding indexes or restructuring the database schema. Popular APM tools include Datadog, New Relic, and Dynatrace.

Log Analysis for Security and Troubleshooting

Log analysis plays a critical role in both security and troubleshooting. Application logs provide valuable insights into the behavior of the application, including user actions, error messages, and security-related events. Analyzing these logs can help identify security breaches, diagnose application errors, and understand user behavior patterns. For example, unusual login attempts or access to sensitive data can be detected through log analysis, enabling timely responses to security threats. Similarly, frequent error messages in application logs can pinpoint code bugs or configuration issues that need to be addressed. Tools like ELK stack (Elasticsearch, Logstash, Kibana) and Splunk are widely used for log aggregation and analysis.

Key Metrics to Monitor

| Metric | Description | Unit | Importance |

|---|---|---|---|

| CPU Utilization | Percentage of CPU capacity in use | % | Indicates potential bottlenecks |

| Memory Usage | Amount of RAM used | MB/GB | Highlights memory leaks or insufficient allocation |

| Disk I/O | Read/write operations on disk | IOPS | Shows potential disk performance issues |

| Network Traffic | Incoming and outgoing network data | Mbps | Identifies network congestion or bandwidth limitations |

| Response Time | Time taken to process a request | ms | Measures application performance |

| Error Rate | Percentage of failed requests | % | Indicates application stability |

| Database Query Time | Time taken to execute database queries | ms | Highlights database performance issues |

Case Studies of Cloud Application Server Implementations

This section examines real-world examples of cloud application server deployments, highlighting successes, challenges, and lessons learned. Analyzing these case studies provides valuable insights for planning and executing your own cloud application server projects. Understanding both successful and unsuccessful implementations allows for proactive mitigation of potential issues and informed decision-making.

Successful Cloud Application Server Implementation: E-commerce Platform for a Global Retailer

A major international retailer migrated its e-commerce platform to a cloud application server infrastructure using Amazon Web Services (AWS). The previous on-premises solution struggled to handle peak traffic during sales events, resulting in website crashes and lost revenue. The migration to AWS allowed for significant scalability and improved performance. The retailer leveraged AWS’s auto-scaling capabilities to dynamically adjust server capacity based on real-time demand. This ensured consistent website availability even during peak periods of high traffic, significantly increasing sales conversion rates and customer satisfaction. Furthermore, the move to the cloud reduced infrastructure management overhead, freeing up IT resources to focus on other strategic initiatives. The implementation involved a phased rollout, minimizing disruption to the existing e-commerce operations. Robust monitoring and logging were implemented to proactively identify and address potential issues.

Challenges and Solutions in a Real-World Cloud Application Server Deployment: Financial Services Company

A large financial services company implemented a cloud application server for its core banking system. A major challenge was ensuring regulatory compliance and maintaining data security in a cloud environment. This was addressed by implementing stringent security protocols, including encryption at rest and in transit, access control lists, and regular security audits. Another challenge was integrating the new cloud-based system with existing on-premises legacy systems. This was overcome using application programming interfaces (APIs) and message queues to facilitate seamless data exchange between the two environments. Performance optimization was also a significant consideration. The company used load balancing and caching techniques to ensure optimal application performance under heavy load. Finally, careful planning and execution of the migration process were crucial to minimize downtime and disruption to business operations.

Lessons Learned from a Failed Cloud Application Server Implementation: Healthcare Provider

A healthcare provider attempted to migrate its patient management system to a cloud application server without adequate planning and testing. The initial deployment suffered from performance issues due to insufficient server capacity and poorly configured network settings. This resulted in significant downtime and impacted patient care. The lack of a comprehensive disaster recovery plan further exacerbated the situation when a regional outage affected the cloud provider’s infrastructure. The lessons learned from this failure highlight the importance of thorough planning, rigorous testing, and a robust disaster recovery strategy. Insufficient capacity planning, inadequate testing, and the absence of a comprehensive disaster recovery plan were critical factors in this failed implementation. The healthcare provider subsequently revised its approach, engaging experienced cloud consultants and implementing a more robust solution with comprehensive testing and a well-defined disaster recovery plan.

FAQ Resource

What are the key differences between IaaS, PaaS, and SaaS in the context of cloud application servers?

IaaS (Infrastructure as a Service) provides virtualized hardware; PaaS (Platform as a Service) offers a platform for application development and deployment; SaaS (Software as a Service) delivers software applications over the internet. Cloud application servers can be deployed using any of these models, with the choice depending on the level of control and management desired.

How do I choose the right cloud provider for my application server needs?

Consider factors such as pricing models, geographic location of data centers, available services (databases, storage, etc.), security certifications, and level of support offered by different providers (AWS, Azure, GCP, etc.). Align your choice with your application’s specific requirements and your organization’s technical expertise.

What are some common security threats to cloud application servers, and how can they be mitigated?

Common threats include DDoS attacks, unauthorized access, data breaches, and malware. Mitigation strategies include implementing robust access control, using firewalls and intrusion detection systems, regularly patching vulnerabilities, employing encryption, and adhering to security best practices.