Defining Cloud-Based Servers

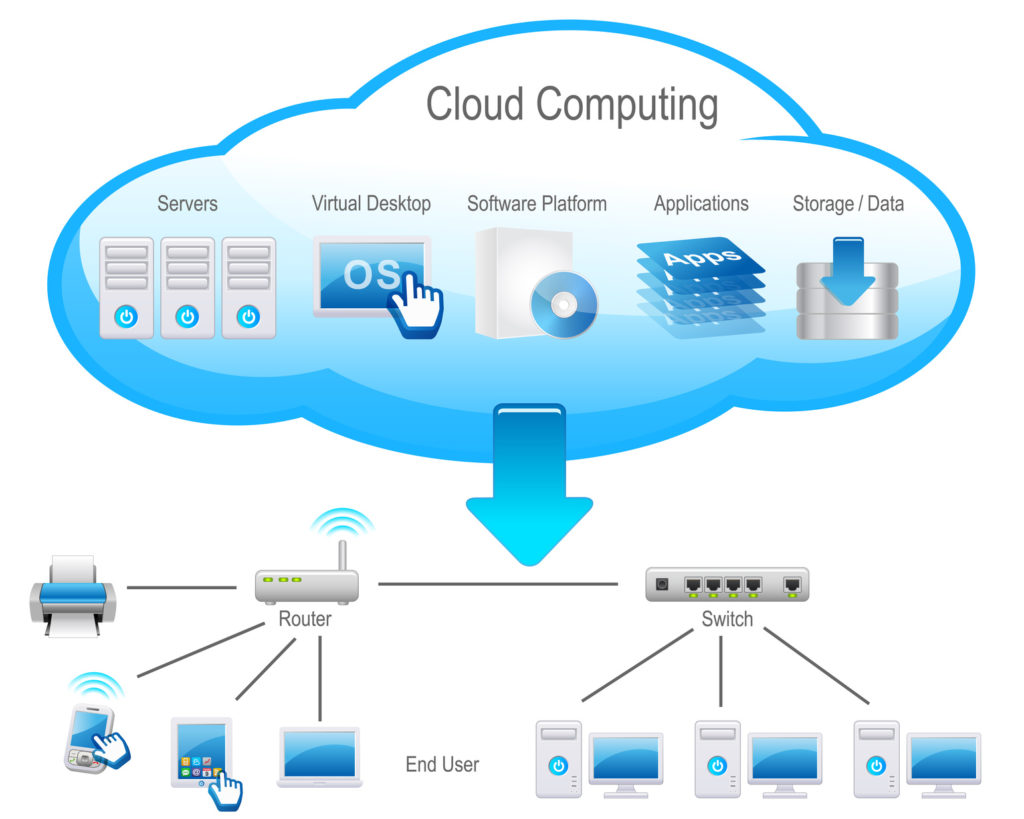

Cloud-based servers represent a fundamental shift in how computing resources are accessed and managed. Instead of relying on physical servers housed within an organization’s own data center (on-premise), cloud servers are virtualized resources provided over the internet by a third-party provider. This allows for greater scalability, flexibility, and cost-effectiveness.

Cloud-based servers leverage a distributed architecture, often employing virtualization technology to create multiple virtual servers from a single physical server. This virtualization allows for efficient resource allocation and utilization, maximizing the capacity of the underlying hardware. The architecture typically includes a network of interconnected data centers globally distributed to ensure high availability and low latency for users around the world. These data centers house the physical servers, networking equipment, and storage systems that support the virtual servers accessed by users. Sophisticated management software orchestrates the allocation and monitoring of these resources, ensuring optimal performance and security.

Cloud Servers versus On-Premise Servers

The primary difference between cloud servers and traditional on-premise servers lies in their location and management. On-premise servers are physically located within an organization’s own data center, requiring significant upfront investment in hardware, infrastructure, and IT personnel for maintenance and management. Cloud servers, conversely, are managed by a third-party provider, eliminating the need for extensive on-site infrastructure and reducing the burden of ongoing maintenance. This translates to reduced capital expenditure (CAPEX) for cloud users, shifting the cost model towards operational expenditure (OPEX). Scalability is another key differentiator; cloud servers can be easily scaled up or down based on demand, whereas scaling on-premise servers requires significant planning and time. Finally, cloud servers offer enhanced security features through the provider’s expertise and investment in robust security measures.

Cloud Server Deployment Models

Cloud computing offers various deployment models, each catering to different needs and preferences. Understanding these models is crucial for selecting the appropriate solution for specific business requirements.

Infrastructure as a Service (IaaS): IaaS provides the most fundamental level of cloud computing, offering virtualized computing resources such as virtual machines (VMs), storage, and networking. Users retain complete control over the operating system and applications, offering maximum flexibility. Examples include Amazon EC2, Microsoft Azure Virtual Machines, and Google Compute Engine.

Platform as a Service (PaaS): PaaS provides a platform for developing, deploying, and managing applications without the need to manage the underlying infrastructure. The provider handles the operating system, middleware, and runtime environment, allowing developers to focus solely on application development. Examples include AWS Elastic Beanstalk, Azure App Service, and Google App Engine.

Software as a Service (SaaS): SaaS delivers software applications over the internet, eliminating the need for users to install or manage the software. Users simply access the application through a web browser or dedicated client. Examples include Salesforce, Microsoft 365, and Google Workspace.

Comparison of Cloud Providers

Choosing the right cloud provider depends on several factors, including cost, performance, features, and compliance requirements. The following table compares three major cloud providers: AWS, Azure, and Google Cloud.

| Feature | AWS | Azure | Google Cloud |

|---|---|---|---|

| Global Infrastructure | Extensive global network of data centers | Large global footprint with extensive reach | Robust global infrastructure with strong geographic coverage |

| Compute Services | EC2, Lambda, Lightsail | Virtual Machines, Azure Functions, Azure Container Instances | Compute Engine, Cloud Functions, Kubernetes Engine |

| Storage Services | S3, EBS, Glacier | Blob storage, Azure Files, Azure Disks | Cloud Storage, Persistent Disk, Archive Storage |

| Database Services | RDS, DynamoDB, Redshift | SQL Database, Cosmos DB, Azure Database for MySQL | Cloud SQL, Cloud Spanner, BigQuery |

| Pricing | Pay-as-you-go, various pricing models | Pay-as-you-go, various pricing models | Pay-as-you-go, various pricing models |

| Security | Robust security features and compliance certifications | Comprehensive security features and compliance certifications | Strong security features and compliance certifications |

Security Aspects of Cloud-Based Servers

Cloud-based servers offer numerous advantages, but their inherent nature introduces unique security challenges. Understanding these threats and implementing robust security measures is crucial for maintaining data integrity, availability, and confidentiality. This section will explore common security threats, best practices, a sample security plan, and mitigation strategies for securing a cloud-based server environment.

Common Security Threats Associated with Cloud-Based Servers

Cloud environments, while offering scalability and flexibility, present a larger attack surface than traditional on-premise servers. Several common threats exist, requiring proactive defense strategies. These threats often exploit vulnerabilities in the shared infrastructure, the cloud provider’s security measures, or the customer’s own configurations and practices.

Best Practices for Securing Cloud-Based Servers

Implementing a layered security approach is essential for mitigating the risks associated with cloud-based servers. This involves combining multiple security controls to protect against a wide range of threats. A robust security posture requires attention to both technical and procedural aspects.

- Strong Authentication and Authorization: Employ multi-factor authentication (MFA) for all user accounts and implement role-based access control (RBAC) to restrict access to sensitive resources based on user roles and responsibilities.

- Data Encryption: Encrypt data both in transit (using HTTPS and VPNs) and at rest (using encryption at the database and storage levels). This safeguards data even if a breach occurs.

- Regular Security Audits and Penetration Testing: Conduct regular security audits and penetration testing to identify vulnerabilities and weaknesses in the cloud infrastructure and applications. This proactive approach helps to identify and address potential security gaps before they can be exploited.

- Network Security: Implement firewalls, intrusion detection/prevention systems (IDS/IPS), and virtual private clouds (VPCs) to secure network traffic and isolate sensitive resources.

- Vulnerability Management: Regularly update and patch operating systems, applications, and other software components to address known vulnerabilities. This is a critical step in preventing exploitation of common security flaws.

- Security Information and Event Management (SIEM): Utilize SIEM tools to collect, analyze, and correlate security logs from various sources, providing real-time threat detection and incident response capabilities.

- Regular Backups and Disaster Recovery Planning: Implement a robust backup and disaster recovery plan to ensure business continuity in the event of a security incident or other unforeseen event. This includes regular backups to a separate, secure location and a well-defined recovery procedure.

Sample Security Plan for a Hypothetical Cloud-Based Server Environment

Consider a hypothetical e-commerce platform hosted on a cloud-based server. A comprehensive security plan would include:

- Risk Assessment: Identify potential threats and vulnerabilities specific to the e-commerce platform, such as data breaches, denial-of-service attacks, and SQL injection.

- Security Controls Implementation: Implement the best practices Artikeld above, including MFA, data encryption, firewalls, intrusion detection systems, and regular security audits.

- Incident Response Plan: Develop a detailed incident response plan outlining procedures for handling security incidents, including communication protocols, containment strategies, and post-incident analysis.

- Compliance Requirements: Adhere to relevant industry regulations and compliance standards, such as PCI DSS for handling payment card information.

- Regular Monitoring and Review: Continuously monitor the security posture of the cloud-based server environment and regularly review and update the security plan to address emerging threats and vulnerabilities.

Potential Vulnerabilities and Mitigation Strategies for Cloud Server Security

Cloud environments are susceptible to various vulnerabilities, and proactive mitigation is key. For example, misconfigured security groups can expose servers to unauthorized access. Mitigation involves carefully configuring security groups to allow only necessary traffic. Another example is insufficient logging and monitoring, hindering threat detection. Implementing comprehensive logging and monitoring with SIEM tools helps to address this. Finally, inadequate access control can lead to unauthorized data access. Implementing robust RBAC and MFA effectively mitigates this risk.

Cost Optimization of Cloud-Based Servers

Optimizing cloud server costs is crucial for maintaining a healthy budget and maximizing the return on investment. Effective cost management involves a strategic approach encompassing various aspects of cloud resource utilization, pricing models, and operational efficiency. This section explores strategies and techniques for achieving significant cost savings without compromising performance or reliability.

Strategies for Optimizing Cloud Server Costs

Several key strategies can significantly reduce cloud spending. These strategies are interconnected and often require a holistic approach for maximum impact. Effective implementation relies on consistent monitoring, analysis, and adaptation to changing needs.

- Right-sizing Instances: Choosing the appropriate server size (instance type) is paramount. Over-provisioning leads to wasted resources and unnecessary expenses. Regularly review resource utilization metrics (CPU, memory, storage I/O) to identify opportunities for downsizing to smaller, more cost-effective instances. For example, if a server consistently operates at 30% CPU utilization, switching to a smaller instance could save substantial costs over time.

- Leveraging Spot Instances: Spot instances offer significant cost savings (up to 90% off on-demand prices) by using spare compute capacity. While they can be interrupted with short notice, they are ideal for fault-tolerant applications and batch processing tasks that can be easily restarted. Careful planning and application design are necessary to mitigate the risk of interruptions.

- Utilizing Reserved Instances: For consistently running applications, reserved instances provide significant discounts compared to on-demand pricing. Committing to a usage term (1 or 3 years) in exchange for a lower hourly rate can result in considerable cost savings. The best choice depends on your predicted usage patterns and long-term strategy.

- Auto-Scaling: Implementing auto-scaling dynamically adjusts the number of instances based on demand. This prevents over-provisioning during low-traffic periods and ensures sufficient capacity during peak demand. Auto-scaling minimizes wasted resources and optimizes costs by automatically scaling up or down as needed.

Cost Analysis of Different Cloud Server Pricing Models

Understanding the various pricing models offered by cloud providers (e.g., Amazon Web Services, Microsoft Azure, Google Cloud Platform) is essential for cost optimization. Each provider offers different pricing structures, and the optimal choice depends on your specific needs and usage patterns.

| Pricing Model | Description | Suitable for | Cost Considerations |

|---|---|---|---|

| On-Demand | Pay-as-you-go; billed by the hour or second. | Short-term projects, unpredictable workloads. | Can be expensive for long-term, consistent usage. |

| Reserved Instances | Pre-purchase instances at a discounted rate for a specified term. | Long-term, consistent workloads. | Requires commitment; cost savings depend on usage patterns. |

| Spot Instances | Use spare compute capacity at significantly reduced rates. | Fault-tolerant applications, batch processing. | Risk of interruption; requires application design considerations. |

Reducing Cloud Server Expenses Without Compromising Performance

Cost reduction doesn’t necessitate sacrificing performance. Many strategies allow for cost optimization without impacting application functionality or user experience.

- Data Optimization: Efficiently managing data storage and retrieval can significantly reduce costs. This includes using appropriate storage classes (e.g., cheaper archival storage for infrequently accessed data), data compression, and regularly purging unnecessary data.

- Network Optimization: Optimizing network traffic can reduce bandwidth costs. Techniques include content delivery networks (CDNs) for distributing content closer to users, efficient data transfer protocols, and minimizing unnecessary data transfers.

- Monitoring and Alerting: Implementing robust monitoring and alerting systems allows for proactive identification of performance bottlenecks and resource inefficiencies. Early detection enables timely intervention, preventing escalating costs and performance degradation.

Efficient Utilization of Cloud Server Resources

Efficient resource utilization is the cornerstone of cost optimization. Several practices ensure that resources are used effectively, minimizing waste and maximizing value.

- Regular Resource Monitoring: Continuous monitoring of CPU utilization, memory usage, disk I/O, and network traffic provides valuable insights into resource consumption patterns. This data informs decisions regarding right-sizing instances and optimizing resource allocation.

- Scheduled Tasks and Automation: Automating tasks such as backups, updates, and scaling operations reduces manual intervention and ensures efficient resource utilization. Scheduling non-critical tasks for off-peak hours minimizes impact on performance and reduces costs.

- Resource Tagging and Cost Allocation: Implementing a robust tagging system allows for accurate tracking and allocation of cloud costs to specific projects or departments. This facilitates cost analysis, identifies areas for optimization, and improves budget management.

Scalability and Flexibility of Cloud-Based Servers

Cloud-based servers offer a significant advantage over traditional on-premise servers due to their inherent scalability and flexibility. This allows businesses to adapt their computing resources to meet fluctuating demands, optimize costs, and ensure consistent performance without the limitations of physical infrastructure. The dynamic nature of cloud computing provides a level of responsiveness impossible with static, pre-allocated resources.

Cloud-based servers enable scalability and flexibility through a pay-as-you-go model and on-demand resource provisioning. Instead of investing in and maintaining a fixed amount of hardware, businesses can easily adjust their computing power, storage, and bandwidth based on real-time needs. This dynamic allocation of resources ensures optimal performance during peak demand and avoids wasted resources during periods of low activity. The underlying infrastructure is managed by the cloud provider, abstracting away the complexities of hardware management and allowing businesses to focus on their core applications.

Scaling Cloud Server Resources

Scaling cloud server resources involves adjusting the computational power allocated to a server instance. This process can be automated or manually initiated, depending on the specific needs and configurations. Scaling up involves increasing resources such as CPU, RAM, and storage, while scaling down reduces these resources. Auto-scaling features, commonly offered by cloud providers, automatically adjust resources based on predefined metrics such as CPU utilization, network traffic, or database load. This ensures that applications maintain optimal performance even during unexpected surges in demand, while simultaneously minimizing costs during periods of low activity. Manual scaling allows for more direct control, particularly useful for predictable fluctuations or planned events. For example, a retailer might manually scale up their servers in anticipation of a major holiday sale, then scale back down afterward.

Examples of Crucial Cloud Server Scalability

Several scenarios highlight the critical role of cloud server scalability. A rapidly growing startup might leverage cloud scalability to accommodate a sudden increase in user traffic without significant upfront investment in hardware. A media company streaming a live event would need the ability to instantly scale resources to handle a large influx of viewers. Similarly, a financial institution processing large volumes of transactions during peak trading hours relies on cloud scalability to maintain system responsiveness and prevent service disruptions. In each case, the ability to quickly and efficiently scale resources up or down is essential for maintaining performance, meeting customer demands, and controlling costs.

Comparison of Cloud and Traditional Server Scalability

Traditional servers, on the other hand, require significant upfront investment in hardware and often involve lengthy lead times for provisioning additional resources. Scaling a traditional server infrastructure usually involves purchasing and installing new hardware, a process that can be costly, time-consuming, and disruptive to operations. In contrast, cloud-based servers offer almost instantaneous scalability, allowing businesses to react quickly to changing demands and avoid the limitations of fixed physical infrastructure. The agility offered by cloud scalability allows for greater responsiveness to market changes and improved operational efficiency. This difference is particularly significant in rapidly evolving markets where flexibility and speed are paramount.

Cloud Server Management and Monitoring

Effective management and monitoring are crucial for ensuring the performance, security, and availability of cloud-based servers. These processes involve utilizing various tools and techniques to proactively identify and resolve potential issues, optimize resource utilization, and maintain a stable and secure operating environment. Without robust management and monitoring, even the most well-designed cloud infrastructure can be susceptible to performance degradation, security breaches, and unexpected downtime.

Cloud server management and monitoring encompass a wide range of activities, from configuring and deploying servers to tracking their performance and security. It’s a continuous cycle of observation, analysis, and action, aimed at optimizing resource allocation and preventing problems before they impact users or applications. This proactive approach minimizes downtime, improves application performance, and ultimately reduces operational costs.

Tools and Techniques for Managing Cloud-Based Servers

Cloud providers offer a suite of management tools integrated into their platforms. These tools provide a centralized interface for managing various aspects of cloud servers, including provisioning, configuration, scaling, and monitoring. Examples include AWS Management Console, Azure Portal, and Google Cloud Console. Beyond provider-specific tools, numerous third-party solutions offer enhanced capabilities and integrations. These often provide features like automated deployment, configuration management, and advanced monitoring functionalities. Popular options include Ansible, Chef, Puppet, and Terraform for infrastructure-as-code, and Datadog, Prometheus, and Grafana for monitoring and logging. The selection of tools depends heavily on the specific needs and complexity of the cloud infrastructure.

Importance of Server Monitoring and Logging

Server monitoring and logging are fundamental to maintaining the health and stability of cloud servers. Monitoring provides real-time insights into server performance, resource utilization (CPU, memory, disk I/O, network traffic), and application health. Logging captures events and errors, providing a historical record that aids in troubleshooting and identifying recurring issues. This combined approach allows for proactive identification of potential problems, enabling administrators to take corrective action before they escalate and impact service availability. Comprehensive logging also plays a vital role in security auditing and incident response.

Proactive Monitoring and Maintenance Plan for Cloud Servers

A proactive monitoring and maintenance plan should include several key components. Regularly scheduled backups are essential to ensure data recovery in case of failures. Automated alerts should be configured to notify administrators of critical events, such as high CPU utilization, disk space exhaustion, or security breaches. Performance testing and capacity planning help determine the resources needed to meet current and future demands. Regular security patching and updates are critical to mitigate vulnerabilities and prevent security incidents. Finally, a well-defined incident response plan is necessary to handle unexpected outages or security breaches effectively. This plan should detail the steps to be taken, roles and responsibilities, and communication protocols. For example, a company might schedule weekly backups, daily performance checks, and monthly security audits.

Using Monitoring Tools to Identify and Resolve Server Issues

Monitoring tools provide dashboards and alerts that highlight potential issues. For instance, a sudden spike in CPU usage might indicate a poorly performing application or a denial-of-service attack. High error rates in application logs could point to a bug in the code or a configuration problem. Network monitoring tools can reveal connectivity issues or bandwidth bottlenecks. By correlating data from different monitoring sources (system metrics, application logs, network traffic), administrators can pinpoint the root cause of problems. Once identified, the issue can be addressed through various methods, including code fixes, configuration changes, scaling resources, or contacting the cloud provider’s support team. For example, if a database server shows high disk I/O, the administrator might investigate slow queries, optimize database schema, or increase disk storage capacity.

Cloud Server Deployment and Configuration

Deploying and configuring a cloud-based server involves several key steps, from choosing the right platform and instance type to optimizing performance and ensuring security. Understanding these processes is crucial for leveraging the full potential of cloud computing. This section details the process, offering best practices for optimal results.

Step-by-Step Cloud Server Deployment

Deploying a cloud server typically involves selecting a cloud provider (such as AWS, Azure, or Google Cloud), choosing an instance type based on your needs (compute power, memory, storage), configuring the operating system, and setting up necessary security measures. The specific steps vary slightly depending on the provider, but the general process remains consistent.

- Account Creation and Region Selection: Create an account with your chosen cloud provider and select the geographic region closest to your target users to minimize latency. Consider factors like data residency regulations when making this choice.

- Instance Type Selection: Choose an instance type that meets your application’s requirements. Consider factors such as CPU cores, RAM, storage type (SSD vs. HDD), and network performance. For example, a web server might require a higher number of CPU cores and RAM compared to a database server.

- Operating System Selection: Select an operating system (OS) image. Most providers offer a wide range of options, including various Linux distributions (like Ubuntu, CentOS, Amazon Linux) and Windows Server. Choose an OS that is compatible with your application and has the necessary libraries and tools.

- Security Group Configuration: Configure security groups to control inbound and outbound network traffic to your instance. This is crucial for protecting your server from unauthorized access. Only open necessary ports (e.g., port 80 for HTTP, port 443 for HTTPS) and restrict access based on IP addresses or security group rules.

- Key Pair Generation: Generate a key pair to securely access your instance via SSH (for Linux) or RDP (for Windows). Keep your private key secure, as it allows access to your server.

- Instance Launch: Launch your instance. This will provision the necessary resources and make your server accessible.

- Post-Launch Configuration: Once launched, connect to your instance using your key pair and perform any necessary post-launch configurations, such as installing applications, configuring services, and setting up backups.

Best Practices for Cloud Server Configuration

Optimizing cloud server configuration for performance and security requires attention to several key areas. Proper configuration significantly impacts resource utilization, application responsiveness, and overall cost-effectiveness.

- Regular Software Updates: Regularly update the operating system and applications to patch security vulnerabilities and improve performance. Automated update mechanisms are highly recommended.

- Resource Monitoring and Scaling: Continuously monitor CPU utilization, memory usage, and network traffic to identify bottlenecks and adjust resources as needed. Utilize cloud provider’s scaling features to automatically adjust resources based on demand.

- Load Balancing: Distribute traffic across multiple instances to improve availability and performance, particularly during peak demand. Cloud providers offer managed load balancing services that simplify this process.

- Caching Strategies: Implement caching mechanisms (e.g., Redis, Memcached) to reduce database load and improve application response times. Caching frequently accessed data can significantly boost performance.

- Database Optimization: Optimize database queries and schema design to minimize database load and improve query performance. Use appropriate indexing strategies and consider database replication for high availability.

Cloud Server Deployment Methods

Different deployment methods cater to varying needs and complexities. Choosing the right method is crucial for efficient and reliable deployments.

- Image-Based Deployment: This involves using pre-configured server images (also known as AMIs in AWS) provided by the cloud provider or created by the user. This method is quick and efficient for deploying standard configurations.

- Infrastructure-as-Code (IaC): IaC uses tools like Terraform or CloudFormation to define and manage infrastructure as code. This approach allows for automated and repeatable deployments, ensuring consistency across environments.

- Containerization (Docker, Kubernetes): Containerization packages applications and their dependencies into isolated units, enabling consistent deployments across different environments. Kubernetes orchestrates the deployment and management of containers at scale.

Setting Up a Basic Web Server on a Cloud Platform

Setting up a basic web server, such as Apache or Nginx, on a cloud platform involves installing the web server software, configuring it to serve web pages, and ensuring appropriate security measures are in place.

- Install Web Server Software: Use the appropriate package manager (e.g., apt for Debian/Ubuntu, yum for CentOS/RHEL) to install the chosen web server software (Apache or Nginx). For example, on Ubuntu, you would use the command:

sudo apt update && sudo apt install apache2. - Configure Web Server: Configure the web server to serve your web pages from a specific directory. This typically involves modifying the web server’s configuration files. For example, with Apache, you might need to edit the

apache2.conffile. - Deploy Web Pages: Upload your web pages to the designated directory. Use tools like `scp` or `rsync` to securely transfer files to your server.

- Test Web Server: Access your web server using its public IP address or domain name in a web browser to verify that your web pages are being served correctly.

- Security Hardening: Implement security measures such as enabling HTTPS using SSL/TLS certificates and configuring firewalls to restrict access to only necessary ports.

Data Backup and Recovery in Cloud Servers

Data backup and recovery are critical aspects of maintaining business continuity and data integrity for any organization relying on cloud-based servers. A robust strategy ensures data protection against various threats, including hardware failures, software glitches, cyberattacks, and human error. This section explores effective strategies, methods, and planning for data backup and recovery in cloud server environments, as well as potential challenges.

Strategies for Backing Up Data Stored on Cloud-Based Servers

Several strategies contribute to a comprehensive cloud data backup plan. These strategies often involve a combination of approaches to ensure redundancy and resilience. A multi-layered approach, using different backup types and locations, is generally recommended.

Backup and Recovery Methods for Cloud Environments

Cloud environments offer diverse backup and recovery methods. These methods vary in their complexity, cost, and recovery time objectives (RTOs) and recovery point objectives (RPOs). Choosing the right method depends on factors like the sensitivity of the data, the organization’s budget, and its recovery requirements. Common methods include:

- Snapshot backups: These are point-in-time copies of the server’s data and configuration. They are fast to create but may not be suitable for long-term archival due to potential storage limitations and potential cost.

- Full backups: These are complete copies of all data on the server. They take longer to create and require more storage but offer a complete recovery point.

- Incremental backups: These back up only the changes made since the last full or incremental backup. They are efficient in terms of storage and time but require a full backup as a base.

- Differential backups: These back up all changes since the last full backup. They are faster than incremental backups but require more storage than incremental backups.

- Cloud-based backup services: These services offer offsite backup and recovery solutions, providing redundancy and protection against data loss due to on-site disasters. Examples include AWS Backup, Azure Backup, and Google Cloud Backup.

Data Backup and Recovery Plan for a Cloud-Based Server Infrastructure

A well-defined data backup and recovery plan is essential. This plan should Artikel the following:

- Backup frequency: This should align with the organization’s RPO, defining how much data loss is acceptable. For critical data, frequent backups (e.g., hourly or daily) may be necessary. Less critical data might only require weekly or monthly backups.

- Backup types: The plan should specify which backup types (full, incremental, differential) will be used and the rationale behind the selection.

- Backup storage location: This should specify where backups will be stored, whether on-site, off-site, or in a cloud storage service. Geographic redundancy is often recommended for disaster recovery.

- Recovery procedures: The plan should detail the steps involved in restoring data from backups, including testing and validation.

- Roles and responsibilities: Clearly define who is responsible for different aspects of the backup and recovery process.

- Testing and review: Regular testing of the backup and recovery plan is crucial to ensure its effectiveness. The plan should also be reviewed and updated periodically to reflect changes in the infrastructure or business needs.

Potential Challenges in Cloud Data Backup and Recovery

Despite the advantages of cloud computing, several challenges exist in cloud data backup and recovery. These challenges require careful consideration and proactive mitigation strategies.

- Data security and compliance: Ensuring the security and compliance of backed-up data is paramount, particularly with regulations like GDPR and HIPAA. Encryption and access control measures are crucial.

- Cost management: Cloud storage can be expensive, especially for large datasets and frequent backups. Careful planning and optimization of storage usage are essential.

- Network bandwidth limitations: Backing up and restoring large amounts of data can consume significant network bandwidth, potentially impacting other applications. This requires efficient bandwidth management strategies.

- Vendor lock-in: Choosing a specific cloud provider can lead to vendor lock-in, making it difficult to switch providers later. Carefully evaluating the long-term implications is crucial.

- Complexity of cloud environments: Managing backups across multiple cloud services and regions can be complex, requiring specialized tools and expertise.

Integration with Other Cloud Services

Cloud-based servers rarely operate in isolation. Their true power is unleashed through seamless integration with a vast ecosystem of other cloud services, creating a flexible and scalable infrastructure. This integration allows for efficient data management, enhanced application functionality, and streamlined workflows, ultimately leading to increased productivity and cost savings.

The ability to connect cloud servers with various services is a key differentiator in the cloud computing landscape. This interoperability facilitates the creation of complex and robust applications by leveraging specialized services rather than building everything from scratch. This approach promotes efficiency, reduces development time, and minimizes the need for extensive in-house expertise in every aspect of IT infrastructure.

Database Integration

Integrating cloud servers with cloud-based databases like Amazon RDS, Google Cloud SQL, or Azure SQL Database offers significant advantages. These managed database services handle the complexities of database administration, including backups, security patching, and scaling, freeing up server resources and development time. For example, a web application hosted on an AWS EC2 instance can easily connect to an Amazon RDS MySQL database using standard database connection protocols. This setup ensures high availability and scalability for both the application and the data it relies on. The benefits include improved performance due to optimized database management, enhanced security through built-in database security features, and simplified administration by offloading database management tasks.

Storage Integration

Cloud storage services, such as Amazon S3, Google Cloud Storage, and Azure Blob Storage, provide scalable and cost-effective solutions for storing various types of data. Integrating cloud servers with these services allows for easy access to large amounts of data, enabling applications to handle large files, multimedia content, or backups. For instance, a media streaming service hosted on a Google Compute Engine instance might store video files in Google Cloud Storage, allowing for on-demand streaming to users worldwide. This integration offers benefits such as reduced storage costs compared to on-premise solutions, increased scalability to handle growing data volumes, and improved data availability through redundancy and geographic distribution.

Application Integration

Cloud servers can integrate with a wide range of other cloud applications, such as message queues (Amazon SQS, Google Cloud Pub/Sub, Azure Service Bus), serverless functions (AWS Lambda, Google Cloud Functions, Azure Functions), and other SaaS applications. For example, a retail application running on an Azure Virtual Machine might use Azure Service Bus to handle asynchronous communication between different application components. Another example could involve integrating a CRM system (e.g., Salesforce) with a cloud server using APIs to synchronize customer data and automate workflows. This type of integration enhances application functionality, improves operational efficiency, and promotes better data consistency across different systems.

API-Based Integration

Application Programming Interfaces (APIs) are crucial for integrating cloud servers with other platforms. APIs provide a standardized way for different systems to communicate and exchange data. Most cloud providers offer comprehensive APIs for their services, allowing developers to automate tasks, manage resources, and integrate their applications with various cloud platforms. For example, the AWS SDKs (Software Development Kits) provide libraries and tools for interacting with various AWS services, including EC2, S3, and RDS, from various programming languages. This enables developers to write scripts or applications that automatically provision servers, upload data, or manage databases. Similarly, Google Cloud Platform and Microsoft Azure offer robust APIs and SDKs to facilitate seamless integration with their respective services. The benefits of API-based integration include increased automation, improved interoperability, and enhanced flexibility in building and deploying cloud-based applications.

Disaster Recovery and Business Continuity

Cloud-based servers offer significant advantages in ensuring disaster recovery and business continuity for organizations of all sizes. Their inherent scalability, redundancy features, and geographically distributed infrastructure provide a robust foundation for mitigating risks associated with various disruptive events. Effective planning and implementation of disaster recovery strategies are crucial to leverage these benefits fully.

Cloud servers play a pivotal role in disaster recovery planning by offering readily available, scalable resources that can be quickly deployed in the event of an outage. This minimizes downtime and ensures business operations can continue with minimal disruption. The ability to replicate data across multiple regions and utilize geographically diverse data centers is a cornerstone of a comprehensive cloud-based disaster recovery strategy.

Disaster Recovery Plan for a Cloud-Based Application

A comprehensive disaster recovery plan for a cloud-based application should detail procedures for responding to various scenarios, including natural disasters, cyberattacks, and hardware failures. The plan should define roles and responsibilities, communication protocols, and recovery time objectives (RTOs) and recovery point objectives (RPOs). Consider the following key elements:

Data Backup and Replication: Regular automated backups of application data and configurations should be stored in geographically separate regions to protect against data loss due to regional outages or data center failures. This includes employing techniques like replication to ensure data consistency and quick recovery.

Failover Mechanisms: Implementing automatic failover mechanisms is crucial. This ensures that if a primary server or data center fails, applications automatically switch to a secondary location with minimal downtime. Load balancers and failover clusters are key components of this process.

Testing and Validation: Regular testing of the disaster recovery plan is paramount. Simulated disaster scenarios allow for identifying weaknesses and refining the plan to ensure effectiveness when a real event occurs. This includes testing the failover mechanisms and verifying data recovery processes.

Communication Plan: Establish clear communication channels for notifying stakeholders during and after a disaster. This includes establishing a communication protocol for informing customers, employees, and other relevant parties about the incident and the recovery progress.

Recovery Procedures: The plan should detail step-by-step procedures for restoring services, including the order of restoration and the resources required for each step. This should be easily accessible and understandable to the recovery team.

Redundancy and Failover Mechanisms in Cloud Environments

Redundancy and failover mechanisms are essential for ensuring high availability and business continuity in cloud environments. Redundancy involves creating multiple instances of critical components, such as servers, databases, and network connections. This ensures that if one component fails, others can take over seamlessly. Failover mechanisms automate the process of switching to redundant components when a failure occurs, minimizing downtime.

Redundant Servers and Databases: Deploying multiple instances of servers and databases across different availability zones or regions provides protection against regional outages and hardware failures. This ensures continuous operation even if one location experiences a disruption.

Load Balancers: Load balancers distribute incoming traffic across multiple servers, preventing overload on any single server and ensuring high availability. If one server fails, the load balancer automatically redirects traffic to the remaining healthy servers.

Geographic Redundancy: Storing data and applications in multiple geographic regions provides protection against regional disasters such as earthquakes or hurricanes. This ensures business continuity even if one region is affected by a major event. Amazon’s S3, for example, offers multi-region replication to safeguard data across geographically separate locations.

Cloud Server Migration Strategies

Migrating applications and data to a cloud-based server environment can significantly enhance scalability, flexibility, and cost-effectiveness. However, a well-defined strategy is crucial for a smooth and successful transition. This section Artikels various migration approaches, compares their suitability, and details the process, including potential challenges.

Migration Approaches

Several strategies exist for migrating applications and data to the cloud. The optimal approach depends on factors such as application complexity, dependencies, and business requirements. Choosing the right method can significantly impact the project’s timeline, cost, and risk.

Lift and Shift

Lift and shift, also known as “rehosting,” involves moving applications and data to the cloud with minimal or no code changes. This is the quickest and often least expensive approach. It’s ideal for applications that are not heavily reliant on specific on-premise infrastructure and where refactoring is not prioritized. However, it might not fully leverage the cloud’s capabilities and may result in ongoing higher operational costs compared to other strategies. For example, a legacy application running on a physical server could be directly migrated to a virtual machine (VM) in the cloud, with minimal configuration changes.

Replatforming

Replatforming, or “rehosting with optimization,” involves migrating applications to the cloud while making some modifications to improve performance or leverage cloud-specific services. This approach balances speed and cost-effectiveness with some degree of optimization. For instance, an application might be moved to a more suitable cloud-based database service or utilize managed services to reduce operational overhead. This offers a better balance between speed of migration and long-term cost optimization compared to a pure lift-and-shift approach.

Refactoring

Refactoring involves significant code changes to optimize an application for the cloud environment. This approach is more time-consuming and complex but can lead to substantial improvements in scalability, performance, and cost efficiency. Microservices architecture, for example, is often employed during refactoring, allowing for independent scaling and deployment of application components. This is particularly useful for complex applications requiring high availability and scalability, although it requires a greater upfront investment of time and resources.

A Plan for On-Premise Server Migration

Migrating an on-premise server to a cloud environment typically involves several key steps:

- Assessment: Analyze the application, its dependencies, and data requirements to determine the best migration strategy.

- Planning: Develop a detailed migration plan, including timelines, resource allocation, and risk mitigation strategies.

- Preparation: Prepare the cloud environment, including network configuration and security settings.

- Migration: Execute the migration plan, using the chosen approach (lift and shift, replatforming, or refactoring).

- Testing: Thoroughly test the migrated application to ensure functionality and performance.

- Cutover: Switch over from the on-premise server to the cloud environment.

- Post-Migration Optimization: Monitor and optimize the application’s performance and resource utilization in the cloud.

Challenges and Risks of Cloud Server Migration

Cloud server migration presents several challenges and risks:

- Downtime: Migration can cause downtime if not properly planned and executed.

- Data Loss: Data loss is a significant risk if proper backup and recovery procedures are not in place.

- Security Risks: Increased attack surface requires robust security measures.

- Cost Overruns: Poor planning can lead to unexpected costs.

- Integration Issues: Integrating with existing cloud services can be complex.

- Lack of Expertise: Insufficient cloud expertise can hinder the migration process.

Choosing the Right Cloud Server for Specific Needs

Selecting the optimal cloud server requires careful consideration of your application’s specific requirements and long-term goals. The right choice ensures performance, scalability, and cost-effectiveness. A poorly chosen server can lead to underperformance, unnecessary expenses, and operational difficulties. This section provides a framework for making informed decisions.

Application Requirements and Server Selection

Matching cloud server capabilities to application needs is paramount. Factors to consider include processing power (CPU), memory (RAM), storage capacity (disk space), and network bandwidth. For example, a database-intensive application will require significantly more RAM and storage than a simple web server. Applications requiring real-time processing will necessitate low latency and high bandwidth. Analyzing your application’s resource consumption patterns through profiling and benchmarking tools is crucial for accurate estimation. This analysis will reveal the minimum acceptable resource levels needed for smooth operation. Ignoring this step can lead to performance bottlenecks and system instability.

Decision-Making Framework for Cloud Provider and Configuration

Choosing a cloud provider involves evaluating factors beyond just price. Reliability, security features, geographical location of data centers (for latency and compliance), and the provider’s support infrastructure are all critical. A robust decision-making framework should incorporate these aspects. A structured approach might include creating a weighted scoring system, assigning points to each provider based on predefined criteria. For instance, a company prioritizing security might heavily weight the security features offered by different providers, while a company focused on cost might prioritize pricing models and discounts. Once a provider is selected, configuring the server involves choosing the appropriate instance type (e.g., virtual machine size, container configuration) and operating system.

Comparison of Cloud Server Types: Virtual Machines and Containers

Virtual machines (VMs) and containers represent two distinct approaches to cloud computing. VMs provide a complete virtualized environment, including an operating system, while containers virtualize only the application and its dependencies, sharing the underlying host OS. VMs offer greater isolation and security but consume more resources. Containers are more lightweight and efficient, leading to better resource utilization and faster deployment. The choice depends on the application’s needs. For applications requiring strict isolation and diverse operating systems, VMs are preferable. For microservices architectures and applications requiring rapid deployment and scalability, containers are generally more suitable.

Selecting Server Size and Resources for Specific Workloads

Determining the appropriate server size involves aligning resource allocation with expected workload demands. Cloud providers typically offer a range of instance types, each with varying CPU, RAM, and storage capacities. Estimating peak and average resource utilization is crucial. For instance, a web application expecting a surge in traffic during peak hours should be provisioned with sufficient resources to handle the increased load. Over-provisioning can be costly, while under-provisioning leads to performance degradation. Right-sizing involves continuously monitoring resource usage and adjusting server resources as needed to optimize cost and performance. Tools and techniques such as auto-scaling can dynamically adjust resources based on real-time demand.

Key Questions Answered

What are the different types of cloud server instances?

Cloud providers offer various instance types optimized for specific workloads. These range from general-purpose instances suitable for a variety of tasks to specialized instances designed for high-performance computing, databases, or machine learning.

How do I choose the right cloud server provider?

Selecting a provider depends on factors like budget, required services (compute, storage, networking), geographic location, compliance needs, and technical expertise. Consider evaluating providers based on their service level agreements (SLAs), security features, and customer support.

What is serverless computing?

Serverless computing is a cloud execution model where the cloud provider dynamically manages the allocation of computing resources. Developers focus on writing code without worrying about server management. Functions are triggered by events, and the provider automatically scales resources based on demand.

How secure is data on a cloud-based server?

Cloud providers employ robust security measures, but responsibility for data security is shared. Implement strong passwords, encryption, access controls, and regular security audits to protect your data. Choose providers with strong security certifications and compliance standards.